Meeting the Moment: Addressing Barriers and Facilitating Clinical Adoption of Artificial Intelligence in Medical Diagnosis

Introduction

Clinical diagnosis is essentially a data curation and analysis activity through which clinicians seek to gather and synthesize enough pieces of information about a patient to determine their condition. The art and science of clinical diagnosis dates to ancient times, with the earliest diagnostic practices relying primarily on clinical observations of a patient’s state, coupled with methods of palpation and auscultation (Mandl and Bourgeois, 2017; Berger, 1999). Following a period of stagnation in clinical diagnostic practices, the 17th through 19th centuries marked a period of discovery that transformed modern clinical diagnostics, with the advent of the microscope, laboratory analytic techniques, and more precise physical examination and imaging tools (e.g., the stethoscope, ophthalmoscope, X-ray, and electrocardiogram) (Walker, 1990). These foundational achievements, among many others, laid the groundwork for modern clinical diagnostics. However, the volume and breadth of data for which clinicians are responsible has exponentially grown, generating challenges for human cognitive capacity to assimilate.

Computerized diagnostic decision support (DDS) tools emerged to alleviate the burden of data overload, enhance clinicians’ decision-making capabilities, and standardize care delivery processes. DDS tools are a subcategory of clinical decision support (CDS) tools, with the distinction that DDS tools focus on diagnostic functions, whereas CDS tools more broadly can offer diagnostic, treatment, and/or prognostic recommendations. Debuting in the 1970s and 1980s, expert-based DDS tools such as MYCIN, Iliad, and Quick Medical Reference operated by encoding then-current knowledge about diseases through a series of codified rules, which rendered a diagnostic recommendation (Miller and Geissbuhler, 2007). While these early DDS tools initially achieved pockets of success, the promise of many of these tools diminished as several shortcomings became evident. Most prominently, the capacity of data collection and the complexity of knowledge representation prevented accurate representation of the pathophysiological relationships between a disease and treatments. Programmed with a limited set of information and decision rules, several expert-based DDS tools could not generalize to all settings and cases. Some suffered from performance issues as well, often struggling to generate a result or yielding an errant diagnosis. Moreover, users were frustrated. Since these tools existed outside of the main clinical information systems, clinicians had to reenter a long list of information to use them, which created significant friction in their workflows. Similarly, updating the knowledge base of a DDS system often required cumbersome manual entry. Finally, there was a lack of incentives to drive adoption. Thus, provider acceptance remained low, and expert-based DDS tools faded from use (Miller, 1994).

The revitalization of the artificial intelligence (AI) field – the ability of computer algorithms to perform tasks that typically require human intelligence – offers an opportunity to augment human diagnostic capabilities and address the limitations of expert-based DDS tools (Yu, Beam, and Kohane, 2018). Current AI techniques possess not only remarkable processing power, speed, and ability to link and organize large volumes of multimodal data, but also the ability to learn and adjust based on novel inputs, building upon previous knowledge to generate new insights. For this reason, AI approaches, specifically machine learning (ML), are especially well suited to the problems of clinical diagnosis, shortening the time for disease detection, diagnostic accuracy, and reducing medical errors. By doing so, AI diagnostic decision support (AI-DDS) tools could reduce the cognitive burden on providers, mitigate burnout, and further enhance care quality.

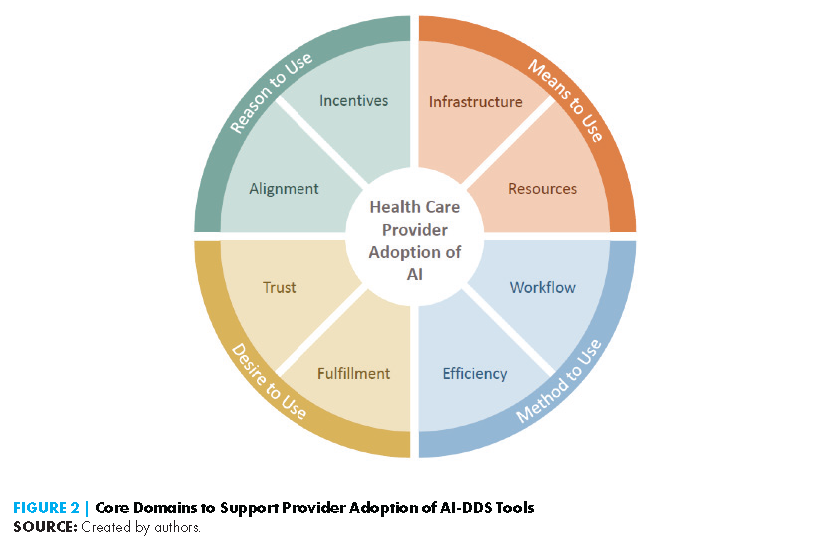

While contemporary AI-DDS tools are more sophisticated than their expert-based predecessors, concerns about their development, interoperability, workflow integration, maintenance, sustainability, and workforce requirements remain, hampering the adoption of AI-DDS tools. Additionally, the “black box” nature of some AI systems poses liability and reimbursement challenges that can affect provider trust and adoption. This paper examines the key factors related to the successful adoption of AI-DDS tools, organized into four domains: reason to use, means to use, method to use, and desire to use. Additionally, the paper discusses the crosscutting issues of bias and equity as they relate to provider trust and adoption of these tools. Addressing biases and inequities perpetuated by AI tools is paramount to preventing the widening of disparities experienced by certain populations and to engendering confidence and trust among clinicians who are responsible for providing care to these populations. To conclude, the authors discuss the policy implications around the adoption of AI-DDS systems and propose action priorities for providers, health systems leaders, legislators, and policy makers to consider as they engage in collaborative efforts to advance the longevity and success of these tools in supporting safe, effective, efficient, and equitable diagnosis.

A Primer on AI-Diagnostic Decision Support Tools

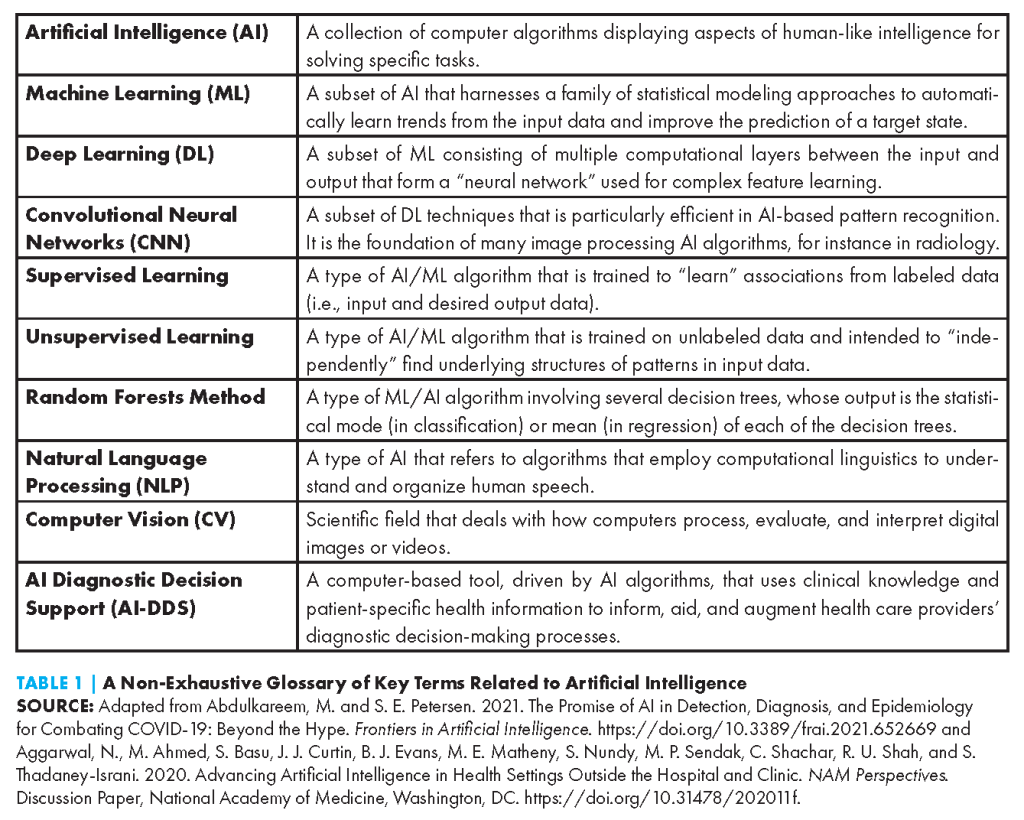

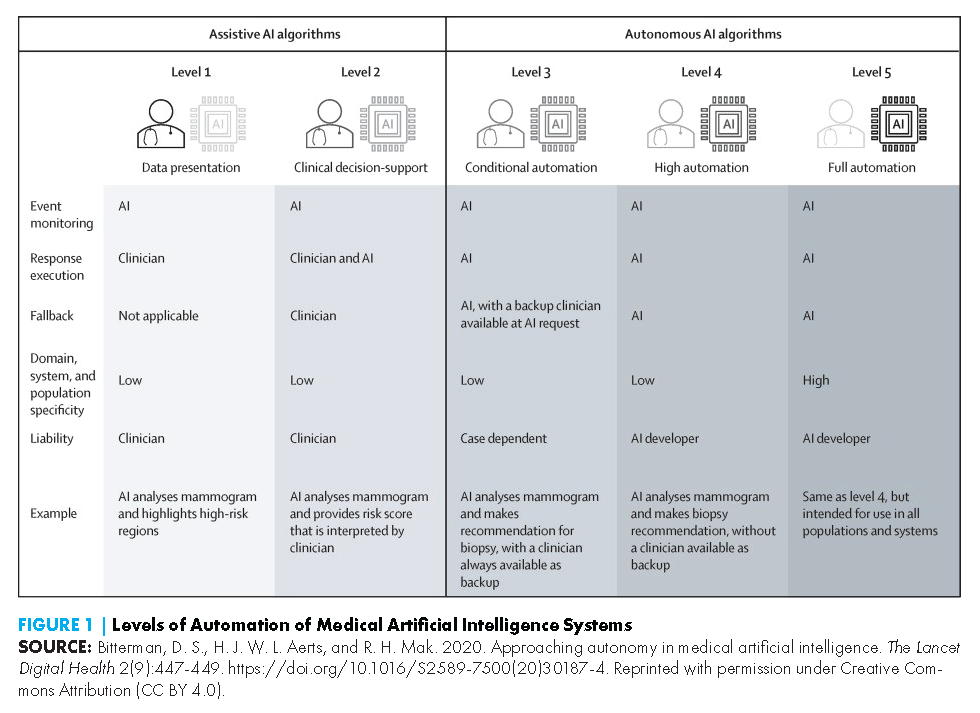

AI-DDS tools come in various forms, use myriad AI techniques (see Table 1), and can be applied to a growing number of conditions and clinical disciplines. In this paper, the authors focus on adoption factors as they relate to assistive AI-DDS tools. Unlike autonomous AI tools, which operate independently from a human, assistive AI tools involve a human to some degree in the analysis and decision-making process (see Figure 1) (Bitterman, Aerts, and Mak, 2020). The authors in this paper focus on AI-DDS tools designed to support health care professionals in decision-making processes, rather than consumer-facing tools in which a layperson interacts with an AI-DDS system.

Current AI-DDS tools reflect artificial narrow intelligence (ANI), i.e., the application of high-level processing capabilities on a single, predetermined task, as opposed to artificial general intelligence (AGI), which refers to human-level reasoning and problem-solving skills across a broad range of domains. AI-aided diagnostic tools are designed to address specific clinical issues related to a prescribed range of clinical data. They do not (and are not intended to) comprise omniscient, science-fiction-like algorithmic interfaces that can span all disease contexts. Ultimately, the purpose of AI-DDS tools is to augment provider expertise and patient care rather than dictate it.

Generally, assistive AI-DDS tools currently use a combination of computer vision and ML techniques such as deep learning, working to identify complex non-linear relationships between features of image, video, audio, in vitro, and/or other data types, and anatomical correlates or disease labels. The authors highlight a few representative examples below.

Most prominently, assistive AI-DDS tools can be found in the field of diagnostic imaging, given the highly digital and increasingly computational nature of the field. In fact, radiology boasts more Food and Drug Administration (FDA)-authorized (that is, cleared or approved) AI tools than any other medical specialty (Benjamens et al., 2020). A well-studied algorithm within the cardiac imaging space is HeartFlow FFRCT. Trained on large amounts of computed tomography (CT) scans, this algorithm employs deep learning to create a precise 3D visualization of a patient’s heart and major vessels to assist in the detection of arterial blockage (Heartflow, 2014). Deep learning methods can also be applied to gauge minute variations in cardiac features such as ventricle size and cardiac wall thickness to make distinctions between hypertrophic cardiomyopathy and cardiac amyloidosis – two conditions which have similar clinical manifestations and can often be misdiagnosed (Duffy et al., 2022). Within oncology, ML techniques in the form of computer-aided detection systems have been used since the 1990s to support early detection of breast cancer (Fenton et al., 2007; Nakahara et al., 1998). Since then, the FDA has approved several AI-based cancer detection tools to help detect anomalies in breast, lung, and skin images, among others (Shen et al., 2021; Ray and Gupta, 2020; Ardila et al., 2019). Many of these models have been shown to improve diagnostic accuracy and prediction of cancer development well before onset (Yala et al., 2019).

Beyond imaging, AI applications include the early recognition of sepsis, one of the leading causes of death worldwide. Electronic health record (EHR)-integrated decision tools such as Hospital Corporation of America (HCA) Healthcare’s Sepsis Prediction and Optimization Therapy (SPOT) and the Sepsis Early Risk Assessment (SERA) algorithm developed in Singapore draw on a vast repository of structured and unstructured clinical data to identify signs and symptoms of sepsis up to 12 – 48 hours sooner than traditional methods. In this regard, natural language processing (NLP) of unstructured clinical notes is particularly promising. NLP helps to discern information from a patient’s social history, admission notes, and pharmacy notes to supplement findings from blood results, creating a richer picture of a person’s risk for sepsis (Goh et al., 2021; HCA Healthcare Today, 2018). However, there are significant concerns about the clinical utility and generalizability of these tools across different geographic settings (Wong et al., 2021).

In the fields of mental health and neuropsychiatry, AI-DDS tools hold potential for combining multimodal data to uncover pathological patterns of psychosocial behavior that may facilitate early diagnosis and intervention. For instance, the FDA recently authorized marketing of an AI-based diagnostic aid for autism spectrum disorder (ASD) developed by Cognoa, Inc. As a departure from deep learning and CNNs, the Cognoa algorithm is based in random forest decision trees. It integrates information from three sources to provide a binary prediction of ASD diagnosis:

- a brief parent questionnaire regarding child behavior completed via mobile app,

- key behaviors identified in videos of child behaviors, and

- a brief clinician questionnaire.

The tool has demonstrated safety and efficacy for ASD diagnosis in children ages 18 months to five years, performing at least as well as conventional autism screening tools (Abbas et al., 2020). There have also been promising demonstrations of AI for diagnosing depression, anxiety, and posttraumatic stress disorder (Lin et al., 2022; Khan et al., 2021; Marmar et al., 2019).

AI-DDS systems are also becoming increasingly common in the field of pathology, particularly in vitro AI-DDS tools. Akin to the radiological examples, AI techniques can analyze blood and tissue samples for the presence of diagnostic biomarkers and characterize cell or tissue morphology. For example, a model developed by PreciseDx uses CNNs to calculate the density of Lewy-type synucleinopathy, a biomarker of early Parkinson’s disease, in the peripheral nerve tissue of saliva glands (Signaevsky et al., 2022).

Facilitating Provider Adoption of AI-Diagnostic Decision Support Tools

Despite the significant potential AI-DDS tools hold in augmenting medical diagnosis, these tools may fail to achieve wide clinical uptake if there is insufficient clinical acceptance. A particularly telling example is that of many early expert-based DDS examples (the forerunners to modern AI-DDS systems, as discussed in the Introduction), which disappointed provider expectations because of a host of usability and performance issues as discussed in the Introduction.

However, the deficiencies of these early AI-DDS tools are instructive for facilitating the adoption of contemporary AI-DDS tools. Additionally, lessons learned from implementing current non-AI-based DDS tools, or systems that generate recommendations by matching patient information to a digital clinical knowledge base, can offer insight. The authors of this paper present a model for understanding the key drivers of clinical adoption of AI-DDS tools by health systems and providers alike, drawing from these historical examples and the current discourse around AI, as well as notable frameworks of human behavior (Ajzen, 1985; Ajzen, 1991). This model focuses on eight major determinants across four interrelated core domains, and the issues covered within each domain are as follows (see Figure 2):

- Domain 1: Reason to use explores the alignment of incentives, market forces, and reimbursement policies that drive health care investment in AI-DDS.

- Domain 2: Means to use reviews the data and human infrastructure components as well as the requisite technical resources for deploying and maintaining these tools in a clinical environment.

- Domain 3: Method to use discusses the workflow considerations and training requirements to support clinicians in using these tools.

- Domain 4: Desire to use considers the psychological aspects of provider comfort with AI, such as the extent to which the tools alleviate clinician burnout, provide professional fulfillment, and engender overall trust. This section also examines medicolegal challenges, one of the biggest hurdles to fostering provider trust in and the adoption of AI-DDS.

Domain 1: Reason to Use

At the outset, the adoption and scalability of a given AI-DDS tool are driven by two simple but critical factors that dictate the fate of nearly any novel technology being introduced into a health setting. The first factor is the ability of a tool to address a pressing clinical need and improve patient care and outcomes (alignment with providers’ and health systems’ missions). Considering that these tools require sufficient financial investment for deployment and maintenance, the second factor is the tool’s affordability both to the patient and health system, including the incentives for the provider, patient, and health system to justify the costs of acquiring the tool and investments needed to implement it. The issues related to Alignment and Incentives and Reimbursements are, in practice, deeply intertwined and codependent. However, for the purposes of the discussion that follows, the authors have separated the two for clarity, emphasizing the logistical and technical steps relevant to Incentives and Reimbursement.

Alignment with Health Care Missions

AI-DDS tools must facilitate the goals and core objectives of the health care institution and care providers they serve, although the specific impetus and pathway for AI-DDS tool adoption can vary by organization. For instance, risk prediction and early diagnosis AI-DDS tools being developed and implemented by the Veterans Health Administration (VHA) – the largest integrated health care system in the United States – were initiated by governmental mandates and congressional acts requiring VHA to improve specific patient outcomes in this population (i.e., the Comprehensive Addiction and Recovery Act) (114th Congress, 2016b). Such initiatives, mandated on a national level, benefit immensely because the VHA is a nationalized health care service, capable of deploying resources in an organized fashion and on a large scale. Another pathway by which these tools can be introduced into clinical settings is through private AI developers collaborating with academic health centers or other independent health systems. These collaborations can result in the creation of novel AI-DDS tools or the customization of “off-the-shelf” commercial tools. A recent example of this type of partnership is Anumana, Inc., a newly founded health technology initiative between Nference (a biomedical start-up company) and Mayo Clinic focused on leveraging AI for early diagnosis of heart conditions based on ECG data (Anumana, 2022). In this context, the AI-DDS development process may be geared toward a given health system’s specific needs or strategic missions. However, this does not necessarily preclude its broader utility in other health systems.

A useful framework for evaluating the necessity and utility of AI-DDS tools relates to the Quintuple Aim of health care – better outcomes, better patient experiences, lower costs, better provider experiences, and more equitable care (Matheny et al., 2019). Given the link between patient outcomes and provider experience, it is also important to establish and validate the accuracy of new AI-DDS tools at the start of the adoption process and throughout its use. However, there are often discrepancies between AI-DDS developers’ scope and the realities of clinical practice, resulting in tools that can be either inefficient or only tangentially useful. To reassure providers that their tools are optimized for clinical effectiveness, health system leaders must be committed to regular evaluations of AI-DDS models and performance, as well as efficient communication with developers and companies to update algorithms based on changes like diagnosis prevalence and risk-factor profiles. As algorithms are deployed, and their output is presented to providers in EHR systems, special attention must be paid to the information design and end-user experience to optimize providers’ ability to extract key information and act on it efficiently (Tadavarthi et al., 2020). Another critical step in proving robust clinical utility of an AI-DDS tool will be to demonstrate low burden of unintended harms and consequences with use of a given tool (i.e., high sensitivity and high specificity) (Unsworth et al., 2022). The degree to which provider reasoning impacts the AI-DDS will also play a role in this regard. Finally, in implementing care plans based in part on AI-DDS output, all care team members must be coordinated in their response and long-term follow-up roles (see Domain 2: Means to Use for discussion about requisite resources and roles to accomplish these tasks).

Incentives and Reimbursement

Many health care systems operate on razor-thin financial margins (Kaufman Hall & Associates, 2022). Moving forward, robust insurance reimbursement programs for the purchase and use of AI-DDS tools will be critical to promoting greater adoption by providers and health systems (Chen et al., 2021). However, incentive structures and payer reimbursement protocols for AI-DDS tools are in their nascent stages. Furthermore, insurance dynamics, including for AI-DDS

systems, are particularly complex in the U.S., due in part to the heterogeneity of potential payers that range from governmental entities to private insurers to self-insured employers.

In the current fee-for-service environment, a general trend is for the Centers for Medicare and Medicaid Services (CMS), the federal agency that is the nation’s largest health care payer, to be the first to establish payment structures for new technologies and for private payers to then emulate the standards set by CMS (Clemens and Gottlieb, 2017). In determining whether to reimburse the use of a novel AI-DDS tool (and to what extent), a primary consideration for payers, regardless of type, is to assess whether the technology in question pertains to a condition or illness that falls under the coverage benefits of the organization. For instance, an AI-DDS system may be deemed as a complementary or alternative health tool, which may fall outside the scope of many insurance plans and, therefore, be ineligible for reimbursement. If the AI-DDS tool is indeed related to a covered benefit by an insurer [(for examples of AI-DDS tools currently reimbursed by U.S. Medicare, see (Parikh and Helmchen, 2022)], developers must provide payers with an adequate evidentiary basis for the utility and safety of the new tool. For this assessment, payers often require data similar to what the FDA would require for premarket approval of a device – for example, clinical trial data showing effectiveness (clinical validity and utility) or other solid evidence that clinical use of the tool improves health care outcomes (Parikh and Helmchen, 2022). Developers bringing new DDS systems to market through FDA’s other market authorization pathways, such as 510(k) clearance or de novo classification, may lack such data and need to generate additional evidence of safety and effectiveness to satisfy payers’ data requirements (Deverka and Dreyfus, 2014). Ongoing post-marketing surveillance to verify the clinical safety and effectiveness of new AI-DDS tools thus is important not only to support the FDA’s continuing safety oversight but also as a source of data to support payers’ evaluation processes.

Experts in health care technology assessment highlight two components of AI-DDS evaluation that are of particular interest to payers: potential algorithm bias and product value. Payers must be convinced that a given AI-DDS will perform accurately and improve outcomes in the specific populations they serve. As described later in this paper, algorithm bias can arise with the use of non-representative clinical data in AI-DDS algorithm development and testing and may lead to suboptimal performance in disparate patient populations based on geographic or socioeconomic factors, as well as in historically marginalized populations (e.g., the elderly and disabled, homeless/displaced populations, and LGBTQ communities). To avoid such biases, monitoring and local validation need to be incorporated into reimbursement frameworks. With regard to product value, payers may weigh the potential clinical benefits of an AI-DDS tool relative to standard diagnostic approaches against the logistical and workflow disruptions that introducing and integrating a new tool into health systems may cause (Tadavarthi et al., 2020; Parikh and Helmchen, 2022). Furthermore, payers can also seek assurance of long-term technical support from algorithm developers.

Although there are not direct reimbursement channels for many types of AI-DDS tools, within the scope of CMS payment systems, there are currently two primary mechanisms through which AI-DDS services can be reimbursed. The first is that CMS reimburses physician office payments through the Medicare Physician Fee Schedule (MPFS). Within MPFS, payment details are specified via the Current Procedure Terminology (CPT), maintained by the American Medical Association (AMA). CPT codes denote different procedures and services provided in the clinic. New AI-CDS/DDS systems that receive approval for reimbursement by CMS may be assigned a CPT code, as was done in 2020 for IDx-DR, an autonomous AI tool for the diagnosis of diabetic retinopathy (Digital Diagnostics, 2022). The second CMS mechanism is through the Inpatient Prospective Payment System (IPPS) for hospital outpatient services. Within IPPS, the Diagnosis Related Groups (DRG) coding system describes bundles of procedures and services provided to clusters of medically similar patients. Novel AI-DDS tools can be reimbursed in the context of a DRG via a mechanism known as the New Technology Add-on Payment (NTAP). NTAP, created to encourage the adoption of promising new health technologies, provides supplemental payment to a hospital for using a given new technology in the context of a broader care plan that may be covered in the original DRG (Chen et al., 2021).

As AI-DDS systems become more prevalent, sophisticated, and integrated into broader diagnostic workflows, distinguishing their specific role in the diagnostic process and ascribing specific reimbursement values to an algorithm may become difficult. AI-DDS tools may fare better and enjoy greater adoption under value-based payment frameworks, where efficiency and overall quality of care are incentivized rather than individual procedures (Chen et al., 2021).

Domain 2: Means to Use

Paramount to establishing the value proposition is ensuring that clinical environments are properly equipped to support and sustain the implementation of AI-DDS tools. This consists of two interrelated elements: (a) the data and computing infrastructure required to collect and clean health care data, develop and validate an AI algorithm at the point of care, and perform routine maintenance and troubleshooting of technical problems in a high-throughput environment; and (b) the human and operational resources needed to conduct these technical functions so clinicians can seamlessly interface with these tools.

Infrastructure

Building the necessary infrastructure to deploy AI-DDS relies on developing the hardware and software capabilities to support a range of functions beginning with data processing and curation. Concurrent with developing and implementing a working AI-DDS pipeline, several health IT infrastructure and data flow steps are required to support the implementation and sustainment of an AI-DDS tool. The first point of entry into the pipeline is data ingestion. This step requires linking a data producer, such as an MRI machine, into a data collection and processing workflow to maintain and represent the data in a way that can be leveraged by an AI-DDS algorithm. Many AI-DDS systems currently in use are “locked,” which means that the algorithms are static. However, in the case of a continuous learning/adaptive AI system, in which the system continuously ingests new data to update the algorithm in “real-time,” this could be performed on a fixed schedule (e.g., every day, month, etc.) or a trigger. The next consideration is determining where and how the raw data is stored (e.g., enterprise data warehouse [EDW] versus a data lake). In practice, these considerations are constrained by, first, the specific clinical problem being addressed and, second, the extent to which the available resources can accommodate the complexity of the pipeline. An EDW, which contains structured, filtered data for specific uses, may be preferred for operational analysis, whereas a data lake house, which is a large repository of raw data for purposes yet to be specified, may be selected by institutions seeking to perform deep research analysis. While model development is a distinct step in building an AI pipeline, it is nonetheless interdependent on deployment considerations. For example, an institution seeking to build analytic tools that are robust to future changes in imaging (e.g., adding a new MRI machine) may opt for a more flexible architecture of a data lake house instead of a traditional EDW. This, in turn, creates dependency cascades since data storage choice changes the order and extent to which data cleaning and other pre-processing pipelines are implemented. Thus, AI-DDS development and implementation choices are both business operations and data science decisions since their steps are codependent.

Some clinical problems may require more frequent data updates or “data meals” to ensure that adaptive AI systems can appropriately address rapidly evolving issues with a nascent foundation of data. For instance, a COVID-19 diagnostic model at the beginning of the pandemic might have been built around admission vital signs and complete blood count (CBC) results. However, as knowledge about the natural history of the illness progressed, the model may have evolved to include additional data types such as erythrocyte sedimentation rates (ESR), chest X-ray (CXR) images, and metabolic panel data. In many hospital systems, adding the ESR values is not particularly challenging from a data ingestion standpoint because this data originates from the same system that provides the CBC values. However, the addition of CXR images is challenging because it requires working with another department – radiology, in this instance – and interfacing with another information system (picture archiving and communication system [PACS]). Finally, extending predictions from a single outcome at a discrete point in time (i.e., cross-sectional analysis) to multiple predictions or ones relying on time series data can impact upstream choices for data ingestion pipelines.

It is also important to consider that health care AI needs to be deployed in clinical workflows. In these settings, the demand for near real-time data can result in added hardware complexity, expense, and risk. Notably, for most AI-DDS systems, raw data is insufficient; high-quality data that has been curated and annotated is required for robust algorithm training. At a minimum, redundant storage and processing cores capable of model training and validation are essential. While the granular technical requirements are specific to the algorithm employed, the amount and type of data (e.g., images vs. audio vs. text) institutions seek to implement AI-DDS tools may necessitate the ability to access storage on the terabyte and potentially petabyte scale. However, not all data are required to be available for realtime access. Furthermore, while discussion of data privacy and security is beyond the scope of this section, there are numerous Health Insurance Portability and Accountability Act (HIPAA)-compliant cloud solutions that could address the issues of availability of real-time data access and storage. These issues should be carefully considered in an institution’s data plan when seeking to develop and deploy AI-DDS tools.

Another major consideration beyond storage is processing power, particularly for model development and model updating. The types and number of specific chipsets that would be most beneficial should be determined by expert consultation once there is some understanding of the clinical use case and the amount and type of medical data involved. Due to the computational requirements, deep learning-based models might require use of graphical processing units (GPUs), which, in contrast to central processing units (CPUs), offer the ability to do parallel processing with multiple cores, which is particularly useful in deep learning models. While such models could be run on conventional CPUs, efficiency may be reduced by several orders of magnitude depending on model complexity, resulting in models that take weeks to train rather than hours.

Finally, with respect to deployment, it is essential that there is a local solution permitting any mission-critical AI-DDS tools to continue to function at times when internet connectivity is disrupted. Previously, these “downtime” events were often limited to a few hours or days. However, in the age of hospitals becoming an increasing target for ransomware attacks, some planning should be made for what to do if a downtime event lasts weeks or months.

With respect to software needs, the ability of models to run on mobile devices is becoming increasingly important. As such, the ability to either securely log on to a hospital’s server or perform the computations for an AI-DDS on a mobile device is becoming the industry standard, rather than a bespoke one-off requirement for providers enthusiastic about technology. The extent to which health systems should invest in such technology depends on the amount and type of data, the complexity and efficiency of AI/ML models, and the clinical scenario the AI-DDS is addressing. To illustrate, consider an AI-DDS that predicts the need for hospital admission based on data collected from traveling wound care nurse checking capillary blood glucose and uploading a picture of a patient’s worsening extremity wound. All of this can now be done on a mobile device. A model could be implemented such that a traveling wound care nurse takes a picture and runs the model at the point of care using an application on a mobile device.

Another key consideration for deployment of AI-DDS tools is system interoperability. This issue can be conceptualized from many different “pain points”. One occurs at the data ingestion stage, as discussed previously. This may be due to incompatible EHR systems (e.g., the hospital’s inpatient system uses Cerner, but the outpatient clinics use Epic), which cannot “speak” to one another. Alternatively, a health system may have hospitals that use the same EHR, but the EHRs do not share a common data storage repository. Although everyone uses the same PACS system, pulling imaging data from hospitals A, B, and C requires accessing one server, while pulling data from hospitals X, Y, and Z across the state requires accessing a different server, an issue of interoperability related to information exchange. A second ingestion scenario would require harmonization of different sensors into the same repository. For example, the hospital may use multiple types of point-of-care glucose monitors. The workflow workaround is often that the bedside technician looks at the monitor reading and then types it into the EHR. However, if this data needed to be transitioned into an automatically collected format, there may need to be different integrations for each type of glucose monitor. A second “pain point” occurs in the data cleaning stage, known as the data curation stage. Consider the ramifications of a hospital changing from reporting hemoglobins to hematocrits or traditional troponins to high sensitivity troponins. While this makes little difference at the bedside, it has the potential to signifi cantly complicate AI/ML modeling if the change is not recognized and a standardized process for addressing the inconsistency is not developed. Although a hospital’s primary focus should be on selecting tools that enhance value for patients, some attention should be devoted to considering how these tools may impact AI-DDS pipelines. As the reliance on cyber-physical systems grows, health systems should plan to mitigate how physical equipment upgrades change AI/ML data ingestion and use pipelines. Usually, such changes have a trivial effect on overall model performance; however, they can significantly impact the time and effort required to pre-process data. The most efficient way would be to have members of the AI-DDS team with expertise in cyber-physical systems and extract, transform, and load (ETL) data pipelines.

In addition, ensuring providers can readily access AI-DDS tools is critical to adoption. Successfully deploying an AI-DSS tool requires optimizing the multitude of human and software factors involved in the patient care workflow. However, as a preliminary consideration, the essential task is building infrastructure that avoids clinician devising workarounds. There is ample evidence that clinicians will avoid using or develop workarounds for poorly-tailored solutions or requirements that are perceived as being foisted on them and otherwise constitute yet another inefficiency in an already inefficient system. Regarding software, developers must be prepared to ensure that the tool can be used and viewed on both desktop and mobile devices and potentially by provider-facing and patient-facing versions of the EHR software. Transitioning between these various contexts should be seamless and, more importantly, provide the same information.

Resources

Apart from the data and computational infrastructure necessary to develop, implement, and maintain a health care AI-DDS solution, there are also significant human capital requirements. Practices and health systems often lack the required human resources to run a minimum data infrastructure that can support AI-powered applications. Key requirements include, but are not limited to, frontline IT staff, data architects, and AI-machine learning specialists to understand the context of use and tailor the solution to be fit for purpose. The infrastructure also requires information security and data privacy officers, legal and industrial contract officers for business and data use agreements, and IT educators to train and retrain providers and staff.

To ensure sustainable and safe integration of AI-DDS tools into clinical care, it is crucial that the tools meet the clinical needs of the institution while also maintaining alignment with best practice guidelines, which change over time (Sutton et al., 2020). This requires a governance process in the health care system, with time investments from executive leadership and sponsorship as well as committee and oversight mechanisms to provide regular review (Kawamanto et al., 2018). Direct clinical champions must also have dedicated time to interface between front-line clinicians and the leadership, informatics, and data science teams. These models and tools need to be assessed for accuracy in the local environment and modified and updated if they do not perform as expected. Lastly, they must be surveilled over time and checked regularly to ensure performance maintenance.

One of the major challenges in effectively deploying AI in health care is managing implementation and maintenance costs. Nationally, non-profit hospital systems report an average profit margin of around 6.5%. (North Carolina State Health Plan and Johns Hopkins Bloomberg School of Public Health, 2021). These relatively slim margins encourage health care systems to be conservative in investing in unproved or novel technologies. Robust analysis of cost savings and cost estimates in the deployment of AI in health care is still lagging, with only a small number of articles found in recent systematic reviews, most of which focus on specific cost elements (Wolff et al., 2020). In general, industry estimates the overall cost of development and implementation of such tools can range from $15,000 to $1 million, depending on the complexity of the system and integration with workflow (Sanyal, 2021).

Another challenge is the tension between hiring a health care technology firm to develop or adapt the algorithms and tools into a health care environment versus hiring and supporting internal staff, which could cost between $600 and $1,550 a day (Luzniak, 2021). Even when much of the core data science expertise is hired into a system, data scientists spend about 45% of their time on data cleaning (GlobeNewswire, 2020). Because familiarity and ongoing business intelligence and clinical operations needs require managing data, many systems choose to hire internally for a portion of their infrastructure needs, which require a continued injection of capital.

Domain 3: Method to Use

Operationalizing and scaling innovations within the health care delivery system is costly and challenging. This is partly due to the heterogeneity of clinical workflows across and within organizations, medical specialties, patient populations, and geographic areas. Thus, AI-DDS tools must contend with this heterogeneity by plugging into key process steps that are universally shared. However, a weakness that limits options for reshaping physician workflows is the still nascent implementation science for deploying interventions that change provider behavior as well as the non-modularity and non-modifiability of extant, sometimes antiquated point-of-care software, including EHRs (Mandl and Kohane, 2012).

Coupled with workflow challenges is the issue of developing and deploying these tools in a manner that improves efficiency of practice and frees up cognitive and emotional space for providers to interact with their patients. The risk of unsuccessful systems interfering with or detracting from the diagnostic process, through user interface distractions or data obfuscation, exists and must be guarded against. In addition, extensive user training, both onboarding and ongoing and equally nimble educational infrastructure, is necessary to ensure technical proficiency.

Workflow

AI-DDS tools must be effectively integrated into clinical workflows to impact patient care. Unfortunately, many integrations of AI solutions into clinical care fail to improve outcomes because context-specific factors limit efficacy when tools are diffused across sites. Although numerous details are crucial to integrating AI/ML tools into practice, three key insights have emerged from experiences integrating AI/ML tools into practice at various locations and drawn from literature reviews of the AI clinical care translation process (Kellogg et al., 2022; Sendak et al., 2020a; Yang et al., 2020; He et al., 2019; Wiens et al., 2019; Kawamoto, 2005).

First, health systems looking to use AI-DDS tools must recognize the factors that shape adoption and be willing to restructure roles and responsibilities to allow these tools to function optimally. The current state of health information technology centers workflows around the EHR, and AI tools often automate tasks that historically required manual data entry or review. Similarly, AI tools often codify clinical expertise and can prompt concern from clinicians who value autonomy (Sandhu et al., 2020). To navigate these complexities, health systems may need to develop new workflows that change clinical roles and responsibilities, including new ways for interdisciplinary teams to respond to AI alerts. For example, an increasing number of AI tools require staff in a remote, centralized setting to support bedside clinical teams (Escobar et al., 2020; Sendak et al., 2020b). Many hospitals already benefit from more manual remote, interdisciplinary support through services such as cardiac telemetry, eICU, and overnight teleradiology. Similarly, AI can decentralize the location of specialized services. For example, instead of diabetic retinopathy screening requiring a visit to a retina specialist, Digital Diagnostics now hosts automated AI machines at grocery stores (Digital Diagnostics, 2019).

Second, health systems must closely examine the unique impacts of AI integration on different stakeholders along the care continuum and balance stakeholder interests. This is a key facet in establishing the value proposition for the introduction of a new AI-DDS tool. Experience in AI integration reveals that “predictive AI tools often deliver the lion’s share of benefits to the organization, not to the end user” (Kellogg et al., 2022). Predictive AI tools often identify events before they happen, meaning the optimal setting for AI use is upstream of the setting typically affected by the event. For example, patients with sepsis die in the hospital and often in intensive care units, but timely intervention to prevent complications must occur within the emergency department (ED). Similarly, patients with end-stage renal disease often present to the ED to initiate dialysis, but preventive interventions must occur in primary care. Project leaders looking to integrate AI into workflows must map out value streams, and if value is captured by downstream stakeholders in a different setting, project leaders must identify other opportunities to create value for end users. One approach is to identify

“how a tool can help the intended end users fix problems they face in their day-to-day work” (Kellogg et al., 2022). For example, when a team of cardiologists and vascular surgeons aimed to reduce unnecessary hospital admissions for patients with low-risk pulmonary embolisms (PEs), ED clinicians initially pushed back. Scheduling outpatient followup for a low-risk PE had historically been challenging, so the specialists offered to coordinate care for patients identified by the AI/ML tool and block off outpatient appointments to ensure timely follow-up, allowing both the tool and the clinicians to operate as efficiently as possible (Vinson et al., 2022).

Third, workflows should be continuously monitored and adapted to respond to optimize the labor effort required to effectively use AI tools. For example, when a chronic kidney disease algorithm was implemented on a Duke Health Medicare population of over 50,000 patients, many patients identified by the algorithm as high risk for dialysis were already on dialysis or seeing a nephrologist outside of Duke (Sendak et al., 2017). Early intervention was no longer as relevant for these patients, so the team agreed to establish a new pre-rounding process by which a nurse filtered out patients already impacted by the outcome of interest. However, after months of manually reviewing alerts for patients identified by an AI tool as high risk of inpatient mortality, the lead nurse felt confident that the algorithm identified appropriate patients (Braier et al., 2020). The team agreed to remove the manual review step and directly automate emails to hospitalist attendings to consider goals of care conversations. Lastly, there must also be feedback loops with end users to ensure that the AI tool continues to be appropriately used. For example, hospitalists using the inpatient mortality tool inquired about using the tool to triage patients to intensive care units. Similarly, nurses responding to sepsis alerts began asynchronously messaging clinicians in the ED through the EHR rather than calling and talking directly with provider. These changes in communication approach and intended use may seem subtle but can undermine validity of the tool and potentially harm patients. To avoid drift in workflow or use of AI tools, project leaders should clearly document algorithms and regularly train staff on appropriate use (Sendak et al., 2020c).

Efficiency of Practice

The impact of AI-DDS tools and systems on the cognitive and clerical burdens of health care providers remains unclear. Successful tools would ideally reduce both burdens by delivering just-in-time diagnostic assistance in the most unobtrusive manner to providers while minimizing clerical tasks that might be generated by their use (e.g., extra clicks, menu navigation, more documentation). Experience with traditional CDS systems has shown that these tools are significantly more likely to be used if they are integrated into EHRs instead of existing as stand-alone systems. However, integration alone is insufficient. How that integration is executed – from the design of the user interfaces to the way alerts and notifications are displayed (e.g., triggers, cadence) or handled (e.g., non-interruptive versus interruptive alert) – is critical to practice efficiency and, ultimately, provider acceptance and adoption.

One major impediment is the high degree of difficulty integrating new software with vendor EHR products. Most integrations are “one-offs,” and, therefore, the technology fails to diffuse broadly. The 21st Century Cures Act (“Cures Act”) specifies a new form of health IT interoperability underpinning the redesign of provider-facing applications as modular components that can be launched within the context of the EHR, and which may be instrumental in delivering AI capabilities to the point of care (114th Congress, 2016a). The Cures Act and the federal rule that implements interoperability provisions require that EHRs have an application programming interface (API) granting access to patient records “with no special effort” (Wu et al., 2021; HHS, 2020). “APIs are how modern computer systems talk to each other in standardized, predictable ways. The Substitutable Medical Applications, Reusable Technologies (SMART) on Fast Healthcare Interoperability Resource (FHIR) API, required under the rule, enables researchers, clinicians, and patients to connect applications to the health system across EHR platforms” (Wu et al., 2021). Top EHR vendors have all incorporated common API standards (“SMART on FHIR”) into their products, creating a substantial opportunity for innovation in software and data-assisted health care delivery. Illustrative of the transformative potential of the integration of AI-DDS with EHRs is Apple’s decision to use the SMART API to connect its Health App to EHRs at over 800 health systems, giving 200 million Americans the option to acquire standardized and computable copies of their medical record data on their phones. The implementation science underpinning translation of machine learning to practice is nascent, however. Cultivating support for standards is driving an emerging ecosystem of substitutable apps, which can be added to or deleted from EHRs (like apps on a smartphone can). Such apps yield opportunity to deliver the output of diagnostic algorithms within the provider workflow during an EHR session within a patient context (Barket and Johnson, 2021; Kensaku et al., 2021; Khalifa et al., 2021).

EHR alert fatigue is a widespread and well-studied phenomenon among providers that has been linked to avoidable medical errors and burnout (Ommaya et al., 2018). How the introduction of AI-DDS systems into next-generation EHRs might affect alert fatigue and the provider experience is unclear. Successful deployment of these AI-DDS tools likely requires use of both human factors engineering and informatics principles, as the problem arises from the technology and how busy humans interact with it. Diagnostic outputs provided by the DDS should be specific, and clinically inconsequential information should be reduced or eliminated. Outputs should be tiered according to severity with any alternative diagnoses presented in a way that signals providers to clinically important data. Alerts must be designed with human factors principles in mind (e.g., format, content, legibility, placement, colors). Only the most important, high-level, or severe alerts should be made interruptive.

While thoughtful human-centered design can facilitate adoption to an extent, some degree of health care provider training will be required to ensure the necessary competencies to use AI-based DDS tools. The rapid pace of technological change requires such educational infrastructure to be equally nimble. Training opportunities must be integrated across undergraduate medical education, graduate medical education, and continuing medical education. To the extent that some AI-DDS tools are designed to support collaborative team workflows, interprofessional and multidisciplinary training is also necessary. While competencies surrounding the use of AI-DDS systems are still evolving and yet to be established, the authors of this paper have identified the following core areas as essential:

- Foundational knowledge (“What is this tool?”);

- Critical appraisal (“Should I use this tool?”);

- Clinical decision-making (“When should I use this tool?”);

- Technical use (“How should I use this tool?);

- Addressing unintended consequences (“What are the side effects of this tool and how should I manage them?”)

For foundational knowledge, health care providers need to understand the fundamentals of AI, how AI-DDS are created and evaluated, their critical regulatory and medicolegal issues, and the current and emerging roles of AI in health care. For critical appraisal, providers need to be able to evaluate the evidence behind AI-DDS systems and assess their benefits, harms, limitations, and appropriate uses via validated evaluation frameworks for health care AI. For clinical decision-making, providers need to identify the appropriate indications for and incorporate the outputs of AI-DDS into decision-making such that effectiveness, value, and fairness are enhanced. For technical use, providers need to perform the tasks critical to operating AI-based DDS in a way that supports efficiency, builds mastery, and preserves or augments patient-provider relationships. To address unintended consequences, providers need to anticipate and recognize the potential adverse effects of AI-DDS systems and take appropriate actions to mitigate or address them. Determining how to integrate this education into an already crowded training space, whether extra certification or credentialing is required for providers to use AI-DDS, and how institutions can adapt to rapidly changing training needs on the frontlines remain open questions.

Domain 4: Desire to Use

Ultimately, the success of AI-DDS tools in optimizing health system performance is dependent on the desire of clinicians to incorporate these tools into routine practice. Indeed, the factors discussed in the previous three core domain sections are crucial variables in the “desire to use” calculus. Additionally, it is important to attend to psychological factors, such as addressing how these tools can facilitate professional fulfillment among providers, including mitigating burnout. The other indispensable element within the desire to use core domain is trust. Clinicians must be able to trust that these tools can deliver quality care outcomes for their patients without creating harm or error and align with both patients’ and clinicians’ ethics and values.

Professional Fulfillment

Continued alignment of AI technology with the element of the Quintuple Aim to improve the work-life balance of health care professionals remains an indispensable aspect of the potential success and adoption of AI tools. Health care providers report high levels of professional burnout, partially attributable to EHRs and related technologies (Melnick et al., 2020). Generally, for every one hour spent with patients, providers spend another two hours in front of their computers (Colligan et al., 2016). The exponential rise in digital work since the COVID-19 pandemic began has exacerbated burnout and amplified some providers’ deeply rooted reluctance to adopt new technologies (Lee et al., 2022). Successful AI-DDS tools will need to overcome this hesitancy and tap into positive sources of fulfillment for providers, including facilitating professional pride, autonomy, and security; reassessing or expanding their scope of practice; and augmenting their sense of proficiency and mastery.

A major contributing source of professional fulfillment is the strength of the patient-provider relationship. As discussed, AI-DDS tools hold the potential to greatly improve diagnostic accuracy and reduce medical errors. If seamlessly integrated, they could also unburden providers of rote tasks, enabling them to allocate more attention to engaging and establishing meaningful bonds with patients. However, by deferring certain higher-order data analysis and synthesis tasks – functions traditionally within the scope of providers – to an AI-based system, providers may experience a sense of detachment from their work. There also is concern that AI systems could erode the patient-provider relationship if patients begin to preferentially value the diagnostic recommendation of an AI system. While the personal qualities of interacting with a human might be preferred, some believe that AI’s ability to emulate human conversation (via chatbots or conversational agents) could eventually supplant providers (Goldhahn et al., 2018). However, it should be noted that this concern only applies to autonomous systems, and the assistive systems this paper focuses on involve, by definition, a health care professional in the workflow.

As observed in previous cycles of AI diffusion, potential threats to job security have negatively impacted provider receptivity to AI. Anxiety has been particularly acute in certain specialties, such as radiology, where in 2016, speculation arose that radiologists would be irrelevant in five years (Hinton, 2016). However, instead of replacing providers, AI in radiology has assumed an assistive role, supporting providers in the sorting, highlighting, and prioritizing key findings that might otherwise be missed (Parakh, 2019). Therefore, to foster the adoption of AI-DDS, it is important to uphold the paradigm of augmented intelligence – in which these tools enhance human cognition, and the human is ultimately the arbiter of the action recommended. A key element of this is to empower providers to co-exist in an increasingly digital world through skill-building and instilling trust and transparency in AI systems. It is also important to reconsider expectations about provider roles and responsibilities. With the potential of increased practice efficiency, AI-DDS tools may expand provider bandwidth and purview. In this regard, providers could see patients in greater numbers, through multiple media, and in geographically distant areas.

Despite increasingly sophisticated AI algorithms, it is imperative to value the human qualities that can correct or counteract the shortcomings of AI systems. For instance, biased algorithms struggle with diagnosing melanoma in darker-skinned patients (Krueger, 2022). Having a provider carefully review and assess results produced or interpreted by an AI tool is essential to avoiding a missed or erroneous diagnosis in this case. Above all, provider involvement is critical in shared decision-making. Even in circumstances when an AI-DDS tool is highly accurate, providers are indispensable in helping patients select the right course of treatment based on their health goals and preferences.

Trust

Trust within human-AI-diagnostic partnerships requires a human willingness to be vulnerable to an AI system. Trust overall is a complex concept and trust in technology is equally complex (Lankton et al., 2015). A human user may distrust an AI-DDS tool whose recommendations go against their intuitive conclusions, especially if that person has professional training and significant experience. A user may also distrust AI-DDS recommendations if the user finds something faulty with the development process of the tool, such as inadequate testing or a lack of process transparency. Another potential impediment can include concern that the tool’s development and use is motivated by profits over people or a lack of professional values alignment (Rodin and Madsbierg, 2021). Clarity in individual clinician and health care organizational governance and standards setting for various AI tools remains unclear, which also may inhibit trust. Drivers of trust, on the other hand, can include positive past experiences with a particular manufacturer or service provider, seamless interoperability of a new application with an existing suite of tools from a familiar and currently trusted company or product, or company reputation among the professional health care community (Adiekum et al., 2018; Benjamin, 2021; European Commission, 2019).

In this section of the paper, the authors focus on two significant sources of distrust with AI-DDS products as especially relevant to the adoption of AI-DDS by clinicians:

- bias (real or perceived) and

- liability.

Providers may be concerned that AI-DDS tools underperform in care for certain patients, especially marginalized populations, as AI trained on biased data can produce algorithms that reproduce these biases. However, it is critical to recognize that bias has multiple sources. It could arise, for example, if the data used to train the AI did not adequately represent all population subgroups that eventually will rely on the AI-DDS tool. It is crucial to ensure that training data are as inclusive and diverse as the intended patient populations, and that deficiencies in the training data are frankly disclosed. Using all-male training data for a tool intended for use only in males to detect a male health condition would not result in bias, but using all-male data would cause bias in tools intended for more general use. Other bias types could exist, for example, if AI tools are trained using real-world data incorporating systemic deficiencies in past health care. For example, if doctors in the past systematically underdiagnosed kidney disease in Black patients, the AI can “learn” that bias and then underdiagnose kidney disease in future Black patients. Thus, it is crucial to design and monitor AI tools with a lens toward preventing, detecting, and correcting bias and disclosing limitations of the resulting AI-DDS tools.

Complicating this issue is the fact that it can be very difficult to understand the inner workings of many AI-DDS algorithms. The terms “transparency” and “explainability” can have various technical meanings in different contexts, but this paper conceives them broadly to denote that the user of an AI tool, such as a health care professional, would be able to understand the underlying basis for its recommendations and how it arrived at them. It can be challenging, and at times impossible, to understand how an AI arrives at its output and to determine whether the tool in question problematically replicates social biases in its predictions. Furthermore, developers rarely reveal the underlying data sets used to train AI-DDS algorithms, making it difficult for providers to ascertain if a particular product is trained to reflect their patient populations. There may also be tension between the AI-DDS purchasing decisions made by hospital leadership and the providers affiliated with the institutions, with the perception that hospital leadership is “imposing” use of specific AI-DDS algorithms on the providers.

To foster trust among clinician users, a regulatory framework that prospectively aims to prevent injuries (see discussion in Tools to Promote Trust), coupled with mechanisms to assign accountability and compensate patients if problematic outcomes occur, must exist. Because AI-DDS tools sit at the intersection of technology and clinical practice, there are two potential avenues for compensating patient injuries through the American tort system. The first is medical malpractice, which implies that the ultimate responsibility for problematic clinical decisions rests with the provider. The second is product liability, which implies that the responsibility for problematic clinical decisions rests instead with the developer and manufacturer of the AI-DDS tool.

Currently, the dividing line appears to be whether an independent professional, such as an end-user provider, could review the recommendations from an AI tool and understand how it arrived at them. As commentators note:

The Cures Act parses the product/practice regulatory distinction as follows: Congress sees it as a medical practice issue (instead of a product regulatory issue) to make sure health care professionals safely apply CDS [clinical decision support] software recommendations that are amenable to independent professional review. In that situation, safe and effective use of CDS software is best left to clinicians and to their state practice regulators, institutional policies, and the medical profession. When CDS software is not intended to be independently reviewable by the health care provider at the point of care, there is no way for these bodies to police appropriate clinical use of the software. In that situation, the Cures Act tasks the FDA with overseeing its safety and effectiveness. Doing so has the side effect of exposing CDS software developers to a risk of product liability suits (Evans and Pasquale, 2022).

This distinction is a workable and sensible one, reflecting the limitations of the average provider’s abilities to evaluate new AI-DDS tools. It would be helpful to educate providers and hospital administrators on the dividing line between explainable CDS tools, which allow health care providers to understand and challenge the basis for algorithmic decision-making and “black box” algorithms, for which the basis of algorithmic decisions making is obscure, on the other hand. This distinction carries implications for liability insofar as courts may hesitate to hold providers accountable for “black box” tools that precluded the possibility of provider control. Providers who hesitate to adopt AI-DDS out of fear of medical malpractice liability may find that distinction comforting and trust-building. For patient injuries arising when AI-DDS systems are in use, policymakers and courts may wish to consider shifting the balance of liability from the current norm (which focuses almost entirely on medical malpractice) to one that also includes product liability in situations where the AI tool, rather than the provider, appears primarily at fault. This shift could further encourage trust and desire to use these tools among providers and would incentivize developers to design algorithms and select training data with a view to minimizing poor outcomes.

Product liability generally arises when a product inflicts “injuries that result from poor design, failure to warn about risks, or manufacturing defects” (Maliha et al., 2021). Product liability, to date, has only been applied in limited and inconsistent fashion to software in general and to health care software in particular (Brown and Miller, 2014). For example, in Singh v. Edwards Lifesciences Corp, the court permitted a jury to award damages against a developer because its software resulted in a catheter malfunctioning (CaseText, 2009b). On the other hand, in Mracek v. Bryn Mawr Hospital, a court rejected via summary judgment the plaintiff’s argument that product liability should be imposed when the da Vinci surgical robot malfunctioned in the course of a radical prostatectomy (CaseText, 2009a). Further complicating the product liability landscape, the Supreme Court concluded in Riegel v. Medtronic that devices going through the FDA premarket approval process, as opposed to other market authorization pathways such as 510(k) clearance, can enjoy certain protection against state product liability cases (CaseText, 2008). Thus, available redress for patients can vary depending on the market authorization pathway for the specific AI tool. The conflicting and limited case law in this area suggests that there is room to explore an expanded product liability landscape for AI-DDS software. One clear point from prior case law is that clinicians will bear the brunt of liability for injuries that occur when using AI-DDS tools “off-label” (e.g., using a tool that warns it is only intended for use on one patient population on a different population). This fact may help incentivize AI tool developers to disclose limitations of their training data since doing so can shift liability to providers who venture beyond the tool’s intended use.

It is also important to note that opening the door to product liability suits does not foreclose the potential for medical malpractice suits against providers who use AI-DDS tools. A provider who relies on AI-DDS tools in good faith could still face medical malpractice liability if their actions fall below the generally accepted standard of care for use of such tools or if the AI-DDS tool is used “off label”, i.e. using an AI-DDS tool developed for one type of MRI interpretation on another type of MRI image (Prince et al., 2019). Overall, courts are reluctant to excuse physician liability, allowing malpractice claims to proceed against physicians even in cases where:

- there was a mistake in the medical literature or an intake form;

- a pharmaceutical company failed to warn of a therapy’s adverse effect; or

- there were errors by system technicians or manufacturers (Maliha et al., 2021).

These cases, taken together, suggest that providers cannot simply point to an AI-DDS error as a shield from medical malpractice liability.

Eventually, widespread adoption of AI-DDS could open the door for medical malpractice liability for providers who do not incorporate these tools into their practice, i.e., “failure to use”. Physicians, specifically, open themselves to medical malpractice liability when they fail to deliver care at the level of a competent physician of their specialty (Price et al., 2019). Currently, the standard of care does not include relying on AI-DDS tools. But as more and more providers incorporate AI-DDS tools into their practice, that standard may shift. Once the use of AI-DDS is considered part of the standard of care, medical malpractice liability will create a strong incentive for all providers to rely on these tools, regardless of their personal views on appropriateness.

Tools to Promote Trust

Two of the most impactful mechanisms to promote trust in AI-DDS among clinicians (and, thus, improving desire to use) would be to further refi ne the existing regulatory landscape for AI-DDS tools and to promote collaborations between stakeholders. This section of the paper explores avenues to promote trust.

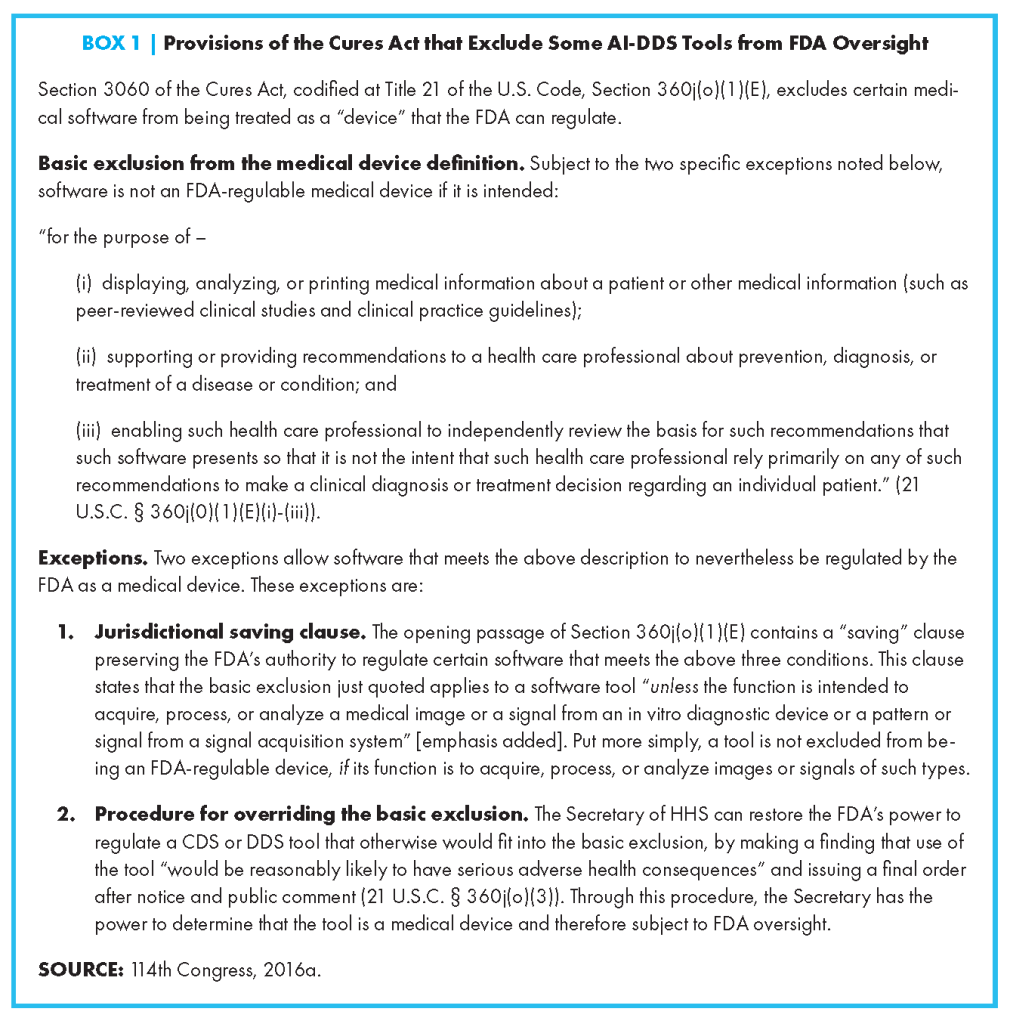

To minimize concerns about liability, nuanced, thoughtful regulation and governance from all levels of the U.S. government – federal, state, and local – can reassure providers that they can trust available AI-DDS tools and move forward with implementation. A key factor affecting clinicians’ willingness to adopt AI-DDS tools is likely whether the tools will receive a rigorous, data-driven review of safety and effectiveness by the FDA before moving into clinical use. A potential concern is that some, but not necessarily all, AI-DDS software is subject to FDA medical device regulation under the Cures Act. It remains difficult for providers to intuit whether a given type of AI-DDS tool is or is not likely to have received oversight under FDA’s medical device regulations. Uncertainty about which tools will receive FDA oversight – and which marketing authorization process the FDA may require (e.g., premarket approval, 510(k), or de novo classification) – likely fuels provider discomfort with using AI-DDS tools.

A key source of this uncertainty, at present, is that the Cures Act addresses the scope of the FDA’s power to regulate various types of medical software but does not itself define or use the terms DDS or CDS software (114th Congress, 2016a; 21 U.S. Code § 360j, 2017). As used in this paper, AI-DDS tools broadly refer to computer-based tools, driven by AI algorithms, that use clinical knowledge and patient-specific health information to inform health care providers’ diagnostic decision-making processes (see Table 1), with DDS tools being a subset of CDS tools more generally. This paper thus follows the definition provided by the Office of the National Coordinator for Health Information Technology (ONC), which stresses that CDS tools “provide … knowledge and person-specific information, intelligently filtered or presented at appropriate times, to enhance health and health care” (ONC, 2018). The FDA has used this ONC definition when discussing how CDS software is broadly understood (FDA, 2019b). Central to the ONC definition, and this paper, is the notion that DDS and CDS tools combine general medical “knowledge” with patient-specific information to produce recommended diagnoses. With AI-DDS systems, that knowledge can include inferences generated internally by an AI/ML algorithm.

The Cures Act authorizes the FDA to regulate only some of the software that might fit into the broader, more common conception of AI-DDS systems just described. Thus, FDA lacks authority to regulate all of the tools that clinicians might think of as being DDS/CDS tools. The Cures Act expressly excludes five categories of medical software from the definition of a “device” that FDA can regulate (114th Congress, 2016a [21 U.S.C. § 360j(o)(1), 2017]). One of these exclusions places restrictions on FDA’s power to regulate CDS and DDS software (114th Congress, 2016a [21 U.S.C. § 360j(o)(1)(E)]). Box 1 shows the specific wording of the relevant Cures Act exclusion.

Looking at the basic exclusion in Box 1, the first two conditions, (i) and (ii), describe CDS and DDS software without using those names. The third condition, shown at (iii), bears on the concept this paper refers to as explainability, again without using that term. When all three conditions are met, this passage of the Cures Act creates a potential exclusion from FDA regulation for CDS/DDS software that meets the criterion for explainability set out in condition (iii) of Box 1. This exclusion, however, is subject to the two exceptions shown at the bottom of Box 1.

The first exception – the saving clause – confirms the FDA’s power to regulate many types of software whose function supports diagnostic testing, such as software used in the bioinformatics pipeline for genomic testing. Before the Cures Act, FDA’s medical device authority included oversight covering both in vitro diagnostic devices (which support clinical laboratory testing of biospecimens) and in vivo devices (such as X-rays and MRI machines that produce images of tissues within a patient’s body). FDA has long regulated software embedded in diagnostic hardware devices, for example, software internal to sequencing analyzers and MRI machines. The saving clause confirms FDA’s power to regulate “stand-alone” diagnostic software that is not necessarily part of a hardware device but processes signals from in vitro and in vivo testing devices.

This power is crucial in light of the modern trend for many clinical laboratories to use third-party software service providers and vendors for data analysis supporting complex diagnostic tests, such as genomic tests (Curnutte et al., 2014). In vitro diagnostic testing by clinical laboratories is subject to the Clinical Laboratory Improvement Amendments of 1988 (CLIA) regulations (100th Congress, 1988). The CLIA framework focuses on the quality of clinical laboratory services but does not provide an external, data-driven regulatory review of the safety and effectiveness of tests used in providing those services, nor does it evaluate the software laboratories use when analyzing and interpreting test results. FDA’s authority to regulate stand-alone diagnostic software positions FDA to oversee clinical laboratory software, even in situations where FDA exercises discretion and declines to regulate an underlying laboratory-developed test (Evans et al., 2020). In a 2019 draft guidance document, circulated for comment purposes only, the FDA noted that “bioinformatics products used to process high volume ‘omics’ data (e.g., genomics, proteomics, metabolomics) process a signal from an in vitro diagnostic (IVD) and are generally not considered to be CDS” tools (FDA, 2019b). The saving clause clarifies that FDA can regulate such software, even in situations where it might technically be considered CDS software falling within the basic exclusion in Box 1 (114th Congress, 2016a [21 U.S.C. § 360j(o)(1)(E)]).

Much of the AI-DDS software providers use in clinical health care settings would not fall under the saving clause (see Box 1), which seems directed at software processing signals from diagnostic devices as part of the workflow for producing finished diagnostic test reports and medical images. However, there is some ambiguity. An example would be an AI-DDS tool that analyzes several of a patient’s gene variants along with the patient’s reported symptoms, clinical observations, treatment history, and environmental exposures to recommend a diagnosis to a clinician. It is unclear if the fact that the tool processes gene variant data means that it is “processing a signal from an IVD device” and thus FDA-regulated, or if the saving clause only applies when the signal is directly fed to the software as part of the clinical laboratory workflow. Without knowing how the FDA interprets the breadth of the saving clause, it is hard for clinicians to understand what is and is not regulated.

Assuming the saving clause does not apply, AI/DDS tools are generally excluded from FDA regulation if they meet all three of the conditions listed at (i)-(iii) in Box 1. The first two conditions are fairly straightforward, but it is still not clear how the FDA plans to assess whether the third condition, bearing on the concept of explainability, has been met. How, precisely, the FDA will decide whether an AI/DDS tool is “intended” to be “for the purpose of” “enabling [a] health care professional to independently review the basis for [its] recommendations” (see Box 1) is unknown. The FDA’s regulation on the “Meaning of intended uses” offers insight into the range of direct and circumstantial evidence the agency can consider when assessing objective intent (FDA, 2017b [21 C.F.R. § 801.4]). Yet how the agency will apply those principles in the specific context of AI/ML software tools is not clear.

Without greater clarity on these matters, clinicians lack a sense of whether a given type of AI-DDS tool usually is, or usually is not, subject to FDA oversight or what FDA’s oversight process entails. Almost six years after the Cures Act, FDA’s approach for regulating AI/ML CDS/DDS software remains a work in progress, leaving uncertainties that can erode clinicians’ confidence when using these tools. Through two rounds of draft guidance (in 2017 and 2019), the FDA solicited public comments to clarify its approach to regulating CDS/DDS tools. A final guidance on Clinical Decision Support Software appears on the list of “prioritized device guidance documents the FDA intends to publish during FY2022” (October 1, 2021 – September 30, 2022) (FDA, 2021c). As this paper went to press in September 2022, the final guidance was not yet available, but the authors hope it may clarify these and other unresolved questions around the regulation of CDS/DDS tools.

Unfortunately, guidance documentswhether draft or final – have no binding legal effect and do not establish clear, enforceable legal rights and duties on which software developers, clinicians, state regulators, and members of the public can rely. There is fairly wide scholarly agreement that the use of guidance as a regulatory tool can be appropriate for emerging technologies where knowledge is rapidly evolving and flexibility is warranted, but there can be longterm costs when agencies choose to rely on guidance and voluntary compliance instead of promulgating enforceable regulations (Wu, 2011; Cortez, 2014). FDA’s Digital Innovation Action Plan (FDA, 2017a; Gottlieb, 2017) and its Digital Health Software Precertification (Pre-Cert) Program (FDA, 2021b) both acknowledge that its traditional premarket review process for moderate and higher-risk devices is not well suited for “the faster iterative design, development, and type of validation used for software-based medical technologies” (FDA, 2017a). The FDA’s 2021 AI/ML Action Plan envisions incorporating ongoing post-marketing monitoring and updating of software tools after they enter clinical use (FDA, 2021a). This may leave health care providers in the uncomfortable position of using tools that may be modified even after the FDA clears them for clinical use and potentially facing liability if patient injuries occur. Also, it implies that vendors and developers of AI/ML tools will need access to real-world clinical health care data to support ongoing monitoring of how the tools perform in actual clinical use.