Empowering 8 Billion Minds: Enabling Better Mental Health for All via the Ethical Adoption of Technologies

Foreword

The Global Future Council on Neurotechnologies represents a diverse group of experts drawn from psychiatry, psychology, brain science, technology, advocacy and the public sector. They are passionate about stimulating the global conversation on mental healthcare gaps and the best ways to address those gaps through emerging technologies.

Mental health disorders are among the leading causes of morbidity and mortality worldwide and could cost the global economy some $16 trillion by 2030. [31] Today, an estimated 300 million people worldwide suffer from depression alone, while suicide is the second leading cause of death among young people. Most mental illnesses are treatable. Everyone has a right to good mental health, and yet, worldwide, some estimates suggest that around two-thirds of people experiencing a mental health challenge go unsupported. Even in wealthy nations such as the US and the UK, over 50% of people may receive no care. Such large diagnostic and care gaps call urgently for novel solutions.

The rapid spread of smartphones, wearable sensors and cloud-based deep-learning artificial intelligence (AI) tools offers new opportunities for scaling access to mental healthcare. The goal of this report is to spotlight, through expert knowledge and case studies, the most promising technologies available today to meet gaps in mental healthcare as well as forthcoming innovations that may transform future care. The report also proposes an initial ethical framework around issues such as privacy, trust and governance, which may be holding back the scaling of technology-driven initiatives in mental health settings. This report is not a systematic review of the effectiveness of technology nor is it a primer on its implementation into global health systems.

Technology is a tool that can allow us to achieve greater scale than ever in every walk of life, but human touch and compassion remain crucial for healing the mind. We are not advocating that machines should replace psychotherapists; our wish is for the adoption of technology as a supplement, in a fair, empathetic and evidence-based manner, to ensure that everyone everywhere facing a serious mental health challenge can get the help they seek.

Executive Summary

Imagine this: Ajay lives in India. In his teens he experienced an episode of depression. So when, as a new undergraduate, he was offered the chance to sign up for a mental healthcare service, he was keen to do so. Ajay chose a service that used mobile phone and internet technologies to enable him to carefully manage his personal information. Ajay would later develop clinical depression, but he spotted that something wasn’t right early on when the feedback from his mental healthcare app highlighted changes in his sociability. (He was sending fewer messages and leaving his room only to go to campus.) Shortly thereafter, he received a message on his phone inviting him to get in touch with a mental health therapist; the message also offered a choice of channels through which he could get in touch.

Now in his mid-20s, Ajay’s depression is well under control. He has learned to recognize when he’s too anxious and beginning to feel low, and he can practise the techniques he has learned using online tools, as well as easily accessing high-quality advice. His progress through the rare depressive episodes he still experiences is carefully tracked. If he does not respond to the initial, self-care treatment prescribed, he can be quickly referred to a medical professional. Ajay’s experience is replicated across the world in low-, middle- and high-income countries. Similar technology-supported mental illness prevention, prediction and treatment services are available to all.

Now back to reality. And today’s reality could not be more different.

A huge void – caused by the stigma that holds people back from seeking help, and by the severe under-resourcing of mental healthcare – leaves more than half of those who experience mental ill health without any treatment. [1]

Even in the wealthiest countries, waiting times for expert appointments and counseling extend to months. (One UK study of more than 500 adults found that a quarter of individuals with mental health issues waited more than three months to see an NHS mental health specialist; 6% had waited at least a year.) [2]

And in the poorest areas, isolation, financial hardship and an inability to find even limited treatment is commonplace.

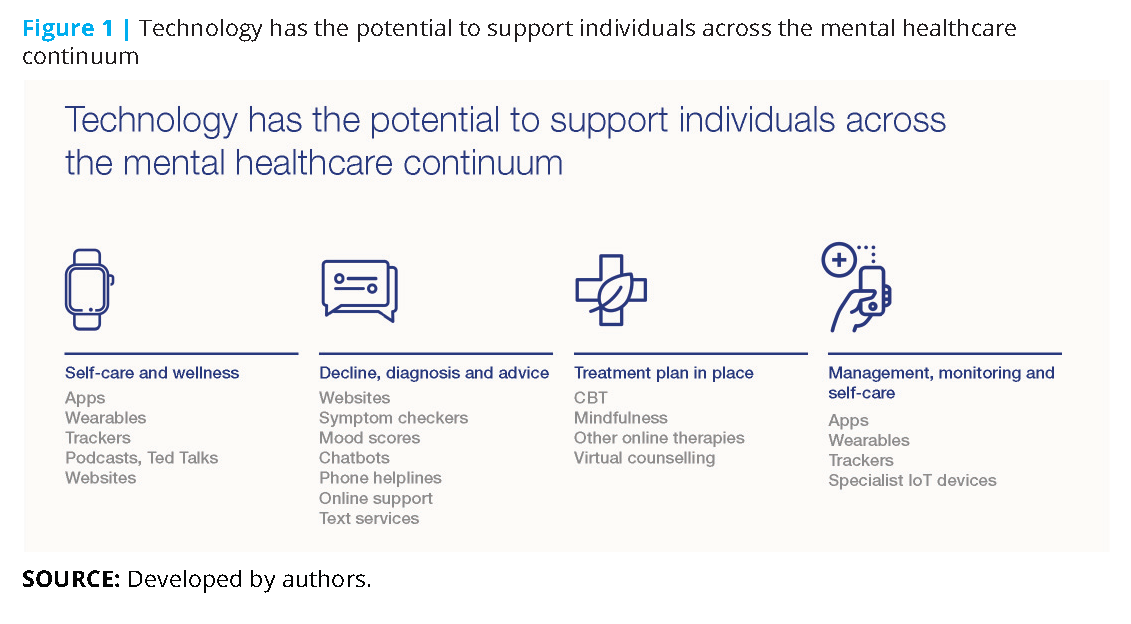

However, an experience such as Ajay’s is not as much of a pipe dream as it might appear. The range of new technologies within the mental healthcare sector (and the rate at which these are being developed) is staggering. These innovations span the whole spectrum of mental health, from self-care (one of the fastest-growing markets for apps) to self-assessment tools (there are hundreds of psychological tests available online) through to developments such as electroencephalogy (EEG), which measure brain activity and offer the promise of early diagnosis (see Figure 1).

This report by the Global Future Council on Neurotechnologies explores the evolving application of technology in mental healthcare and considers the ethical considerations that surround its use. It finds that:

- Technology is already being widely used in mental healthcare.

- While the efficacy of many of these technologies remains to be confirmed by research, such technologies include a growing number of lower-risk, assistive options that show great promise in being able to provide support at scale. Crisis counseling via text messaging, digital cognitive behavioural therapy and tele-psychiatry are examples with an increasing evidence base to support their wider use.

- The unique power of these technology-enabled services is their scalability and low marginal cost.

- Tech-based care is location-agnostic, with the ability to offer “anytime, anywhere, any way” access via today’s most widely owned technology-based tools (mobile phones and the internet).

- AI and machine learning, and the “big data” sets they offer, are starting to provide new insights into disease subtypes and are helping to optimize screening and care pathways.

- Technological advances in the fields of digital phenotyping, immersive technologies (including extended reality) and digital medicine – among many others – will bring further opportunities in the future.

Given these findings, the Council urges governments, policy-makers, business leaders and practitioners to step up and address the barriers keeping effective treatments from those who need them. Primarily, these barriers are ethical considerations and a lack of better, evidence-based research.

The greater use of new and existing technologies in this space raises a complex web of ethical dilemmas, particularly in the areas of data privacy and individuals’ rights. Ultimately, we believe that these concerns can be addressed if decision-makers and other stakeholders address how to:

- Focus on transparency and security to build trust by shifting the discussion from one of data privacy to one of transparency (how and why data is used and by whom), control (by the individuals themselves) and security (to guard against any misuse). The voices of people with lived experience are paramount. Engaging users in how their personal information is collected and used will be critical.

- Establish protocols for the use of big data collected through technology-enabled healthcare to facilitate a better understanding of mental health, what it is, what causes mental ill health and what treatments are effective. Involving those whose data is being collected and used, and ensuring transparency in the giving of consent, is paramount.

- Intervene more proactively on mental health issues, addressing the stigma that surrounds the topic while creating a more empathetic mental healthcare environment.

- Use technology to promote broad and equal access to treatment, thus combatting rather than exacerbating the digital divide and promoting equality.

- Make pragmatic progress, managing the necessity of safety and efficacy with the need to address treatment gaps quickly.

The need for mental healthcare is increasing and healthcare resources are stretched. Technology is presenting solutions that could improve – and indeed save – the lives of millions of people. With that opportunity in mind, this report also calls for eight actions:

- Create a governance structure to support the broad and ethical use of new technology in mental healthcare (including the collection and use of big data), ensuring that innovations meet the five ethical imperatives listed above.

- Develop regulation that is grounded in human rights law, and nimble enough to enable and encourage innovation while keeping pace with technological advances when it comes to ensuring safety and efficacy.

- Embed responsible practice into new technology designs to ensure the technologies being developed for mental healthcare have people’s best interests at their core, with a primary focus on those with lived experience.

- Adopt a “test and learn” approach in implementing technology-led mental healthcare services in ways that allow continual assessment and improvement and that flag unintended consequences quickly.

- Exploit the advantages of scale by deploying innovations over larger communities with their consent.

- Design in measurement and agree on unified metrics. To ensure efficacy and to inform the “test and learn” approach.

- Build technology solutions that can be sustained (in terms of affordability and maintenance) over time.

- Prioritize low-income communities and countries as they are the most underserved today and most likely to see tangible benefits at relatively low costs.

Why mental healthcare matters

The burden of mental illness, in terms of human suffering, is both catastrophic and growing. Current estimates suggest that around one in four adults and young people experience mental ill health. [3] Higher rates have been reported among younger people, women, those who experience man-made trauma (war, refugee crises, violence), environmental trauma (flooding, earthquakes) and those who identify as being LGBTQ. [4]

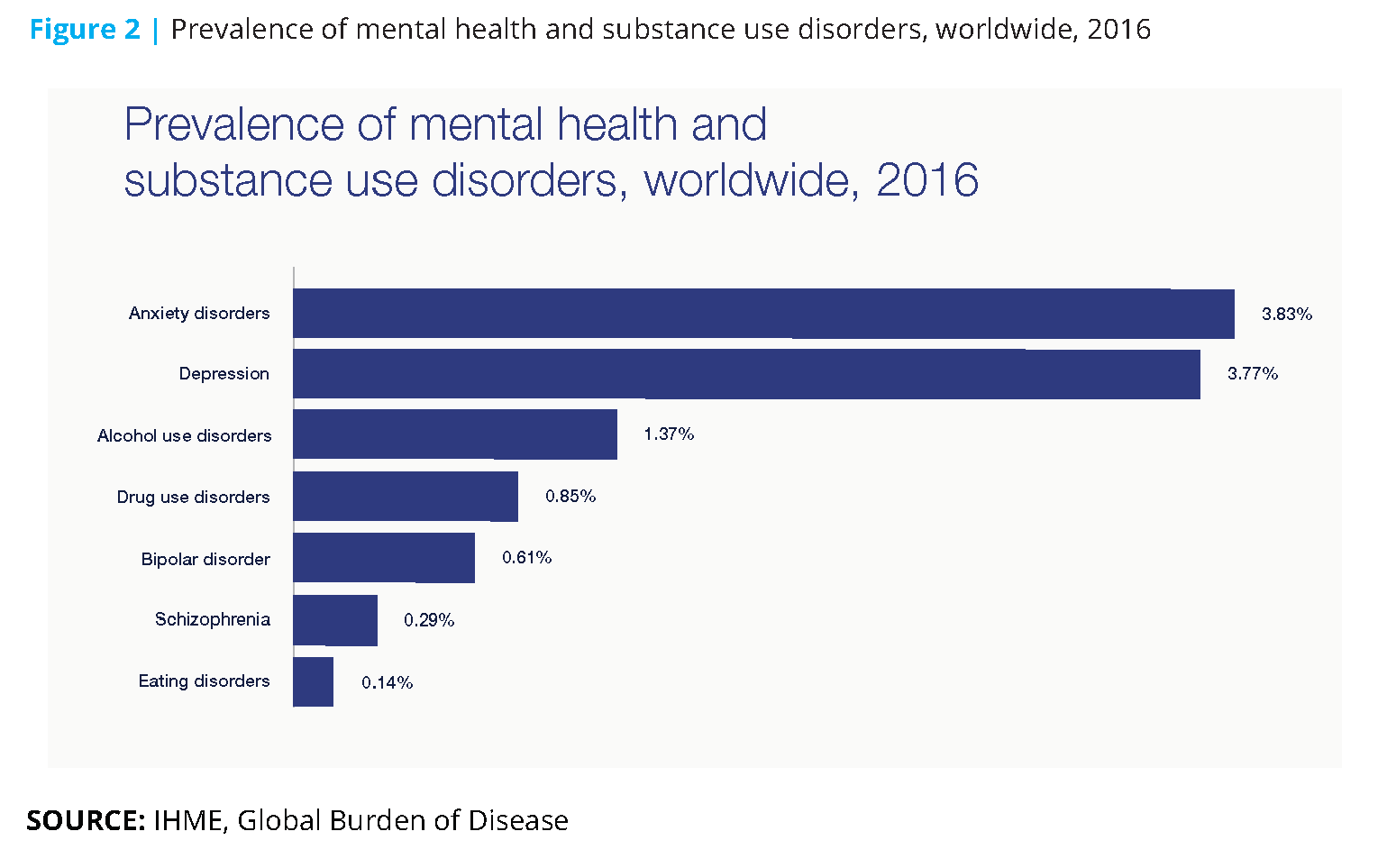

Depression, which affects 300 million people across the world, is now the leading cause of disability worldwide. [5] And every 40 seconds someone dies from a successful suicide attempt, resulting in up to 900,000 deaths each year. [6] Suicide remains the second leading cause of death among those between the ages of 15 and 29, with 79% of suicides occurring in low- and middle-income countries in 2016. [7]

By 2030, mental health problems, including depression, anxiety and schizophrenia, will likely be the leading cause of mortality and morbidity in the world. [8]

The impact of mental illness is compounded by the fact that it affects, and is in turn affected by, other illnesses. It can be a consequence of conditions such as HIV/AIDS, diabetes, heart disease or cancer. The life expectancy of people with severe mental health illness is 20 years less than those who do not experience mental illness. [9] Mental illness and many other illnesses also share common risk factors, such as sedentary behaviour and harmful use of alcohol.

In addition, research indicates that an individual’s mental ill health affects the well-being of caregivers, families, friends and colleagues. [10] Some 85% of workers in one recent survey said that someone close to them had been affected by mental health challenges. Nine in ten of them reported that they had been affected by mental health challenges in some way.

The economic cost of mental illness is also staggering. Along with cardiovascular diseases, mental illnesses are the top drivers of lost productivity. [11]

Almost two-thirds of lost workdays in the US are caused by mental illness. In the 36 largest countries where treatment is not accessible to everyone, anxiety and depression have resulted in more than 12 billion days of lost productivity. [12] Inaction on mental illness is expected to cost the global economy $16 trillion in the 20-year period ending in 2030 (see Figure 2). [13]

Barriers preventing effective mental healthcare today

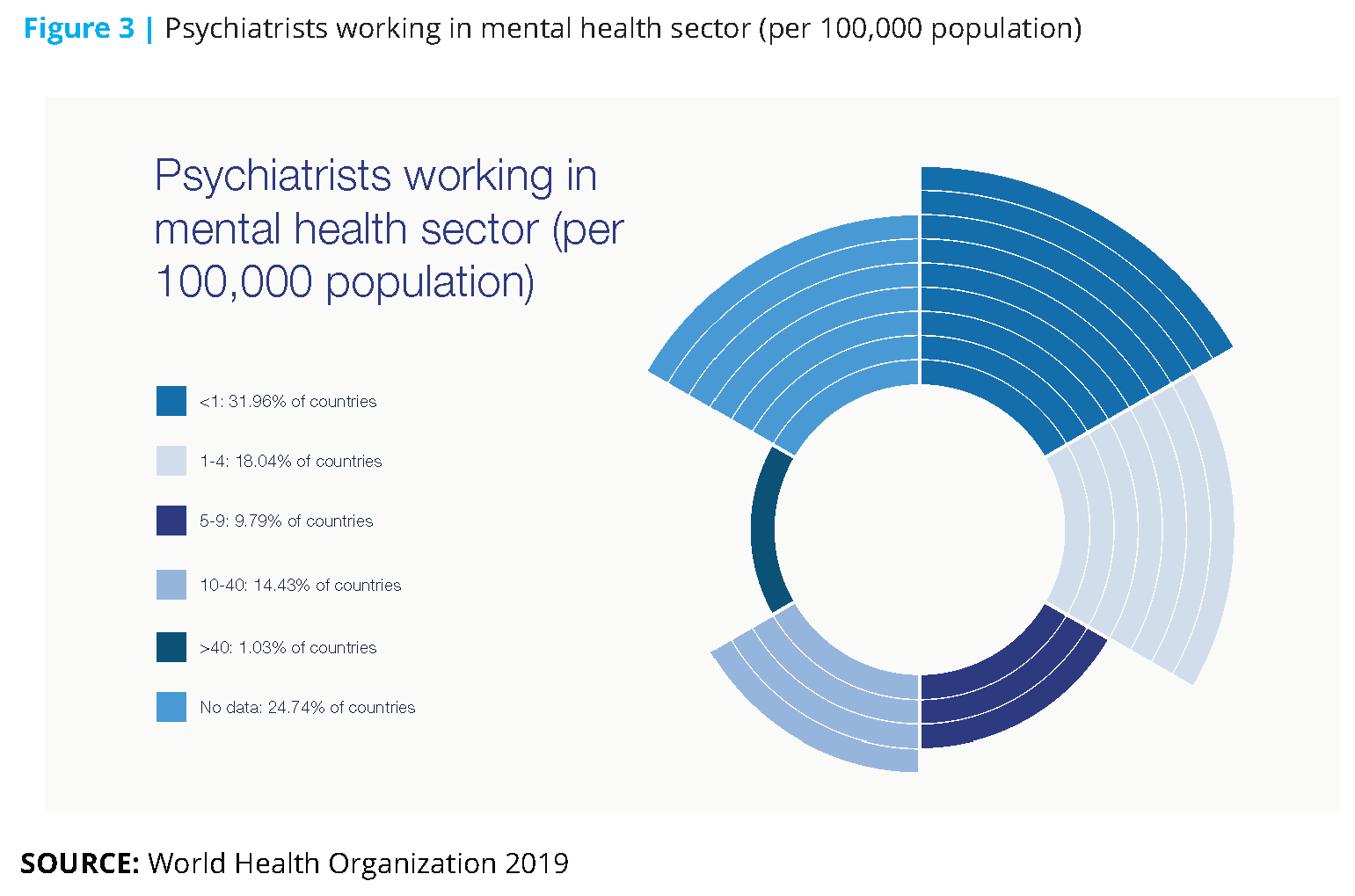

Most mental illnesses are treatable. Most suicides are preventable. And yet, worldwide, more than half of those experiencing a mental health challenge go untreated (see Figure 3). [14] Why is this the case?

The causes of the treatment gap are complex, but the following represent the most significant barriers:

- A persistent stigma, compelling people to conceal their mental illness, or even fail to recognize that they – or someone close to them – has a mental health issue. This stigma is globally endemic. Rooted in history, shrouded in misunderstanding, fear and even associated with witchcraft, mental illness has long been a subject that few people have been willing to speak about openly. Signs point to this stigma slowly lifting. For example, informed awareness is increasing. In the UK, the number of published articles on the topic of mental health increased by a factor of seven over the five-year period ending in 2018. In one survey, 82% of UK workers said it was easier to talk about mental health now than in previous years. And high-profile mental-health advocates (including Prince William, the Duke of Cambridge) and charities are also bringing mental health into the open. [15] But it’s clear that there is a very long way to go. Without doubt, the stigma associated with mental illness is one of the underlying reasons why the symptoms of mental ill health are not well understood and why those with mental health problems are treated differently from those with physical health conditions.

- The variety in type and frequency of mental ill health symptoms and illnesses. Mental health is not black and white. Mental health exists on a spectrum from good health through to mild and then more severe illnesses. Episodes of poorer mental health vary from being time-limited to chronic. And some areas of mental health are not yet well understood. The difficulty of pinpointing the right time to intervene, coupled with the challenge of making a good diagnosis, and the difficulty of identifying an appropriate treatment regime, leads in turn to …

- Lack of consensus about what constitutes a mental health disorder among both world experts and practising clinicians, as evidenced by the conflicting range of published estimates on its prevalence and by poor diagnostic accuracy for some conditions.

- Chronic and widespread under-investment in mental health services, due in part to the factors above. It comes as no surprise that this barrier manifests most acutely in low- to middle-income countries, where the risk of experiencing mental ill health is also greater than in wealthier countries.

- Lack of qualified professionals. In almost half of the world’s countries, the ratio of psychiatrists to people is 4.0 or less to every 100,000. In low-income countries, the ratio is 0.1 to every 100,000.16 Similarly, there are low levels of trained community health workers or health workers.

- The magnitude of the problem. As awareness around mental health grows, and as countries begin to tackle the stigma, the extent of the mental health challenge has become more obvious, and the need for action more urgent. Yet our increasing awareness has in part had the negative effect of delaying our response, as governments and business leaders struggle to home in on actions that are effective, sustainable, accessible and scalable.

Those who experience mental ill health are vital members of the economy and of society. One UK study found that those with mental health conditions contributed £226 billion to the UK economy in 2015, around 12% of the country’s economic output – despite all of the barriers listed above. [17]

In any case, access to mental healthcare is a human right (enshrined in the UN convention on the rights of persons with disabilities). Without it, people can struggle to secure the necessary basics for survival: clothing, food, shelter, and money to sustain those essentials. [18] In higher-income countries, unemployment is greater among those with mental health conditions; those experiencing mental ill health are also more likely to lose their jobs than the general population. [19] In lower-income countries, these individuals may live in extreme poverty, and in too many countries they are subject to abuse, discrimination and even threats to their liberty.

Improving mental healthcare can only enhance the quality of life of those affected, and the economic potential of the workforce.

Technology is already being widely used in mental healthcare

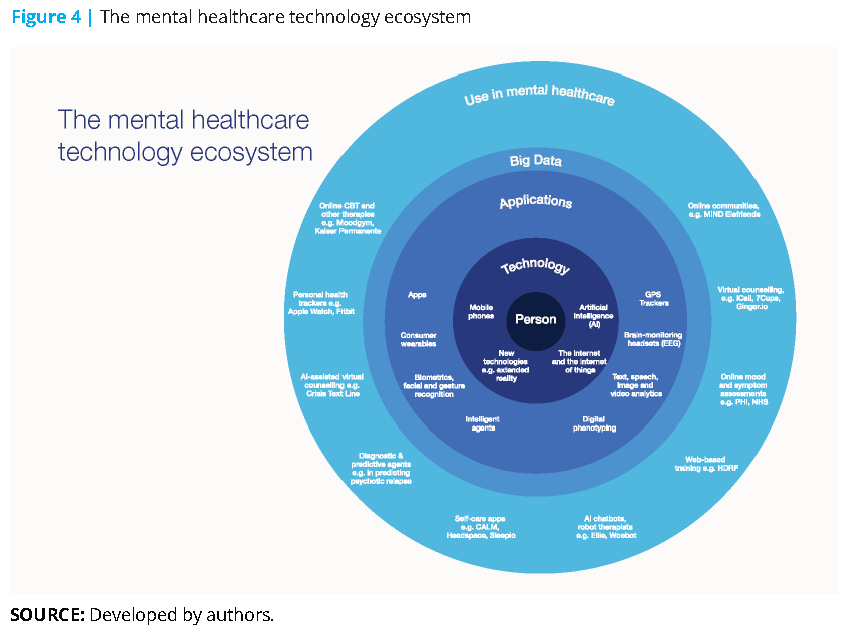

The global digital health market has been valued at $118 billion worldwide, with mental health being one of the fastest-growing sectors. [20] Tech start-ups in the mental health space have seen funding triple in the past five years, reaching a record $602 million in 2018. In short, technology is already being widely used in mental healthcare. And consumers are open to this: 65% of workers in a UK study (and 75% of the youngest workers) were positive about the role of technology in managing their mental health (see Figure 4). [21]

Three interdependent technologies in particular are having a tangible impact on mental healthcare: mobile technology, the internet and AI (including machine learning and big data).

Mobile technology is fundamentally a story of connection. Some 65% of the world’s population have access to a mobile phone, a figure that has increased by 61% over the past 10 years. [22]

Internet technology: The number of internet users has more than tripled in a decade, rising from just over 1 billion in 2005 to an estimated 3.8 billion in 2018. Mobile access to the internet is also rapidly increasing, up 160% over nine years.

Access to both phone and internet has enabled the growth of mental health apps known as mobile medical apps (MMAs). MMAs are a fast-growing segment of the app market, with more than 10,000 consumer-targeted apps available to download. Apple identified self-care apps (such as CALM or Headspace) as their App Trend of the Year in 2018; consumer spending on the top five mindfulness apps grew 130% year on year in 2018. [23] In one study, 77% believed it was important to actively manage your mental health; MMAs clearly resonate with consumers as a tool to support such efforts. [24] However, while they certainly hold potential, there is a need for more research to confirm their efficacy.

The internet of things (IoT) – made up of devices and sensors that are connected to each other and to the internet – is driving a new industry within the general healthcare sector. From simple wearables that let individuals track their heart rate and step count to devices that connect the user to healthcare systems and professionals, this sector is projected to be first in the top 10 industries for IoT app development by 2020. [25]

Artificial Intelligence (AI) is becoming a fundamental part of business and healthcare. Through approaches such as deep learning, AI uses data models and algorithms to learn from the world around it. Predictive systems find relationships between points in historical datasets and their eventual outcomes. In turn, they help predict future results. Expert systems use knowledge of a topic (medicine, symptoms) and apply this knowledge when presented with a set of facts (such as symptoms), learning from each new experience. Audio, visual and signal processing similarly helps interpret clues from sound, pictures and other inputs. Natural language processing (NLP) focuses on analysing human use of language to interpret sentiment and feeling.

These three technologies are being used to:

- Monitor and measure behaviours and (self-reported) feelings outwith the doctor’s office

- Deploy interventions by phone and web

- Enhance the effectiveness of mental health workers

- Build relevant data lakes

Measurement of behaviours and (self-reported) feelings: Blood pressure, pulse and body temperature are the vital biomarkers used to assess physical health. In mental health, such clear, consistent vital signs are absent. But technology can help.

There are hundreds of tried-and-tested rating scales to measure mood, anxiety, sleep and memory available online. Many can point to a possible diagnosis or indicate the severity of a condition while not going as far as providing a specific diagnosis.

Tracking mobile phone activity may also prove valuable. The time between a phone’s active and inactive periods can provide a crude indicator of insomnia (and thus possibly an early-warning sign for many related conditions). Location services can indicate whether someone is straying from their regular route to avoid an area where a trauma occurred, or if someone is not going out at all. Signifi cant shifts in call volumes can signal someone looking for help or withdrawing from contact during the early stages of depression.

IoT-connected wearables and apps can track signs (for example, sleeplessness and raised heart rates) that could be shared with trusted professionals. Such real-time data can inform decisions about treatment. Professionals can also use this data to track the progress of treatment, monitor an individual’s response to medication, and determine when to intervene if an appointment is not scheduled in the immediate future (see Figure 5).

NLP and machine learning have made it possible to interpret someone’s tone, inflection, energy and pitch during phone calls, giving caregivers additional information (beyond the content of the call) on which to base a diagnosis or a progress assessment. US-based firm Cognito worked with the US Department of Veterans Affairs to pilot an app that, with the veterans’ permission, monitored mobile phone conversations in this manner to provide insights into their emotional and psychological state. Similar technologies have been used to build a predictive model to detect the onset of psychosis. [26]

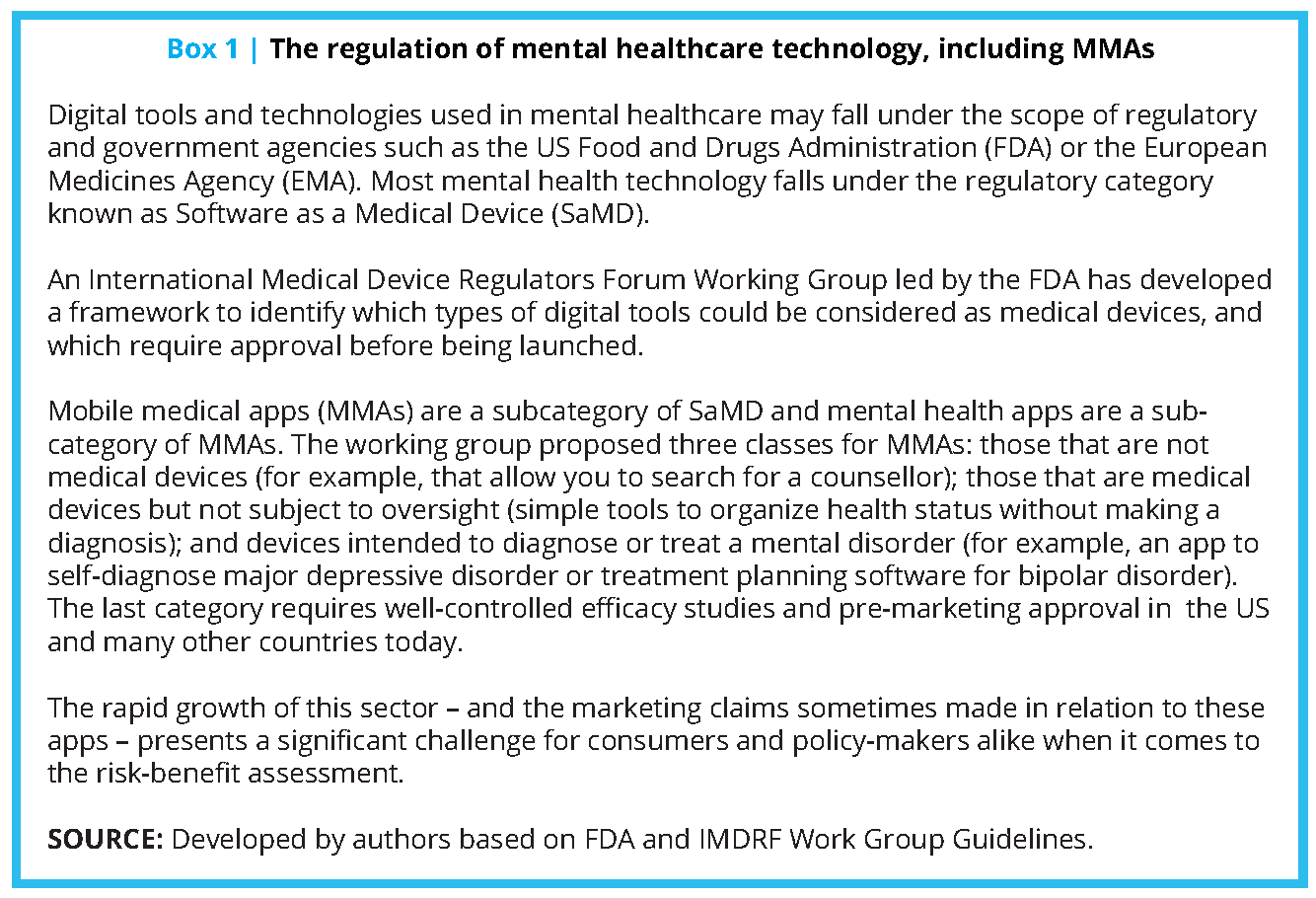

Absence of a consensual, globally harmonized, ethical and big-data framework has led to each of these companies adopting their own consent, transparency, privacy and data policies. The International Medical Device Regulators Forum (IMDRF) working group and US FDA have published guidelines on how medical apps and medical software should be regulated and what types of outcomes are needed for pre-market approval (see Box 1).

Case Studies

The cases we selected are among the best illustrations of how mental health technologies can be scaled. We invited each organization to submit a short write-up; most are relatively low risk in that they use assistive technologies for scaling triaging, counseling or therapy using well-established clinical principles. They have also either published their initial outcomes or plan to do so, and these findings are available on each organization’s website. As a result, we felt they are good role models for consideration of adoption in other settings. They should, however, be viewed as work in progress until more definitive long-term outcomes and cost-effectiveness data are published in a peer-reviewed journal. The cases should be viewed in that context.

Crisis Text Line

A “human-first” interface that combines the accessibility of mobile technology (text) with NLP to triage high-risk cases and provides virtual counseling support.

Crisis Text Line provides free, 24/7 counseling to people in crisis via text. In doing so, it has changed the crisis intervention space by enabling and expanding care for people who might otherwise find it difficult to get help. It is often the first or only service they have used. Fewer than half (45%) of users have spoken to anyone else.

The service was the idea of Nancy Lublin, then chief executive officer of DoSomething.org; Lublin launched the service in 2013 as an initiative of that organization. Within four months, it was being used throughout the US. Two years later, it became a separate entity. To date, Crisis Text Line has exchanged 100 million messages and trained 20,000 crisis counselors.

Three straightforward principles guide staff and volunteers:

- Crisis Text Line’s priority is to help people move from a hot moment to a cool calm, and then help them create a plan to stay safe and healthy.

- Data science and technology make the organization’s services more efficient and accurate. The organization uses data to inform, train and guide staff members. Every text is viewed by a human.

- Open collaboration is critical, and data can lead to prevention. The organization’s data updates every 30 seconds. It is aggregated, anonymized and published in an open forum at CrisisTrends.org.

In practice, each texter is triaged at several levels: Algorithms scan initial texts for severity. Imminent-risk texters are identified, and the organization serves 94% of high-risk texters in under five minutes. Trained crisis counselors exchange messages directly with the individuals in crisis. Full-time professional staff members provide counselors with support, guidance and review.

The Human Development Research Foundation

Uses a combination of on-the-ground volunteers and technology to reach a population otherwise unserved by developmental health services. Avatars are used to help people understand and recognize health challenges, and the approach is being extended into mental health.

As in many low-income countries, the treatment gap for developmental disorders in rural Pakistan is almost 100%. Children are not getting the treatments they need; caregivers are not getting the support or guidance they require. The Human Development Research Foundation (HDRF), a nonprofit charitable trust based in Mandrah, Pakistan, seeks to bridge that gap, in part, with technology.

One initiative in particular showcases the potential for doing so. This programme was conducted in collaboration with the University of Liverpool, UK, and supported by Grand Challenges, Canada, Autism Speaks, USA, and the HDRF in Pakistan. It ran from 2014 to 2017 and targeted a rural area with a population of 50,000.

First, the organization tapped into local community health workers, who visit each household at least once a month, to distribute a leaflet describing the main signs of a developmental disorder, conveying a motivational message and providing a free phone number to call for assistance.

iCALL

Uses multichannel technology tools to provide mental health services with an “any time, anywhere, any way” approach, with a focus on marginalized communities.

Mumbai, India-based iCALL is a technology-assisted mental health service providing telephone, email and chat counseling in nine languages by trained mental health professionals. The service was launched in 2012 as a project at the School of Human Ecology, Tata Institute of Social Sciences (TISS), Mumbai, to provide mental healthcare in areas lacking enough trained professionals and services to meet the need.

A number of special features mark iCALL’s services. For example, during work hours, callers are immediately connected to counselors without having to deal with an interactive voice response system. Email is particularly helpful for those who may not have the privacy to talk on a phone, and chat-based counseling allows users to remain anonymous if they so choose.

All iCALL services are provided by mental health professionals who have a master’s degree in either clinical psychology or counseling psychology; these individuals also participate in more than 40 hours of training to ensure that they are ready to help across a wide range of challenges.

iCALL’s focus is on inclusion, and on working with an understanding of, and sensitivity to, the realities and concerns of variously marginalized individuals and communities. These include persons who are vulnerable to violence and abuse based on (often intersecting) factors such as age, gender, caste, class, sexuality, physical ability and mental well-being.

The organization’s vision also calls for increased capacity for mental healthcare in India overall. With that goal in mind, iCALL has initiated a number of partnerships and collaborative efforts.

MoodGYM

Self-directed training designed to prevent or reduce anxiety and depression.

MoodGYM is an interactive, automated, online programme designed to prevent and/or reduce symptoms of anxiety and depression. Developed in 2001 by researchers at the Australian National University (ANU), it is essentially self-directed training in cognitive behavioural therapy (CBT). It can be used anonymously, and is accessible online.

MoodGYM has more than 1.2 million users, hailing from locations all over the world. And interestingly, approximately 40% of them report that they were referred to the programme by a health professional. That’s likely because of MoodGYM’s extensive and growing evidence base demonstrating its efficacy. Its seminal study was a randomized controlled trial (RCT) published by ANU researchers in 2004 – the first trial in the world to demonstrate that an automated internet programme could reduce depressive symptoms. The trial also found that the programme was cost-effective relative to conventional treatment.

Until 2016, the programme was delivered by the ANU and was available cost-free to end users worldwide. One major challenge in the implementation of evidence-based internet interventions, however, is the need for ongoing maintenance and upgrades of software systems. MoodGYM’s leaders saw a clear need to develop a funding model that would ensure the programme’s ongoing delivery and improvement over time.

The answer was a spin-off company, e-Hub Health, established in 2016. E-Hub Health delivers MoodGYM free in Australia (with funding from the Australian government) and in Germany (with funding from a large health insurance company). Elsewhere in the world, the programme is available via subscription.

Kaiser Permanente

Enhances patient treatment through digital therapies; improves consultations by capturing patient metrics via a digital interface and providing therapists with insightful analytics-driven information ahead of consultations.

Before 2017, California-based Kaiser Permanente (KP)’s experience with online tools in mental health had been underwhelming. Relatively few people had been willing to use the tools consistently. Clinicians, aware of this reluctance, were not inclined to recommend them. But KP leaders believed in the potential for technology to make mental healthcare more widely available, and to make care better overall. Moreover, the healthcare consortium was seeing a dramatic growth in demand for services, even as evaluations showed that 20–25% of those being treated had subclinical (mild or early-stage) symptoms for which medication or in-person psychotherapy was not the best option.

So the staff at KP’s internal Design Consultancy undertook research to better understand the barriers preventing patient engagement. One important finding? Those being treated were more inclined to use online tools that were embedded in an overall system of care supporting their recovery and wellness. With that insight in mind, the team generated a curated set of tech-based solutions. In 2018, Kaiser Permanente began testing these new tools. The team also trained more than 50 clinicians in eight care settings in three KP regions.

Today, more than 700 people have been referred to these tools. The average 30-day engagement rate across all tools is roughly 30%, which is two to three times higher than the industry average. Several of the tools have a much higher engagement rate, and the company plans to narrow its digital “formulary” to the most effective tools before offering this approach more broadly across the organization.

7 Cups

Uses multichannel technology tools to provide mental health services with an “any time, anywhere, any way” approach.

Psychologist Glen Moriarty saw that there was great power in listening but knew that many people do not have someone to talk to. In 2013, to meet that need, he founded 7 Cups. Today, the Palo-Alto, California-based organization offers web- and smartphone-based access to free, on-demand, anonymous emotional support. 7 Cups has 320,000 trained volunteers (including many credentialled professionals), speaking a total of 140 different languages, serving millions of individuals.

The organization offers help in several forms.

People seeking support can connect anonymously to volunteers trained to offer active listening. “Listeners” are searchable by gender, age, issue specialty, language and country.

Communities are available for those who wish to participate in moderated, online discussion boards. Moderated chat rooms and scheduled topic-specific group chats allow for real-time support at all hours of the day or night and in multiple languages. The goal is to help people realize that they are not alone.

Noni, the 7 Cups AI interactive chatbot, weaves together psychotherapy scripts and AI to communicate empathy and acceptance to individuals who might be too anxious to interact with another person but are still seeking help. Noni is also preferred by individuals who are concerned about the shame and stigma they perceive might exist should their need become known.

Finally, 7 Cups offers 32 self-help treatment plans. These are empirically backed protocols that guide users as they complete simple wellness steps (such as therapeutic exercises and guided meditations) that build towards improved mental health.

Ieso Digital Health

Offers online cognitive behavioural therapy (CBT) services, with integrated deep-learning models used to monitor and continuously improve the quality of care delivery.

Ieso Digital Health (IDH) provides online, evidence-based CBT to people with common mental health conditions, such as depression and anxiety disorders. IDH patients communicate one-to-one with an accredited trained clinician using a real-time text-based message system. Therapy is delivered using an internet-based platform securely accessible from any connected device 24/7. Typically, individuals receive five to seven hours of treatment during a course of care.

About 60% of users access treatment via a mobile phone. This online modality makes it easier to get care if, for example, a person cannot get to a physical clinic during working hours, if they live in a rural location or face mobility challenges.

Developed for the British National Health Service, IDH is delivered in the UK through a programme called Improving Access to Psychological Therapies (IAPT). In 2018, the service received over 16,000 referrals, with the 2019 figure expected to exceed 30,000. IDH has also begun to expand beyond its original borders. For example, in the US, working with major health insurance companies, the organization has established a care delivery network in over 40 states.

IDH’s service integrates mandatory recording of clinical outcomes at each therapy session. Service and provider performance is monitored continuously using standardized clinical outcome scales. Regular supervision, continuing professional development and clinical decision support is available free of charge to therapists. Researchers at IDH also use this data, together with natural language processing and deep-learning techniques, to inform and improve mental healthcare delivery models.

Inuka

Uses technology to train “life coaches” and thus scale local mental health provisions in areas where there are few qualified professionals.

Across Africa and India, mental health professionals are conspicuously absent. Inuka aims to overcome this harsh reality by making affordable, effective and accessible support available via a digital platform. Its team rose from within the Philips Innovation Hub in Kenya, after a chance meeting between associate professor and director of the African Mental Health Research Initiative Dixon Chibanda and Robin van Dalen, now Inuka’s chief vision and facilitation officer. The two launched Inuka as an independent social enterprise in 2018.

Before Inuka’s founding, Dixon Chibanda had been making waves as a scientist with his unique approach to offering problem-solving therapy (PST). That evidence-based approach, called “The Friendship Bench”, centres on trained non-clinicians administering PST. Inuka has translated the method to a digital setting.

Care-givers – called “life coaches” – receive certification by Amref Health Africa, the largest health development non-governmental international organization based in Africa. They are then connected with individuals seeking support, setting up four 50-minute sessions, which are conducted via confidential chat.

Life coaches are supervised and supported by licensed therapists. Users are asked to provide feedback after each session, to help the organization continually improve its offerings. The Inuka programme is not designed to replace mental health treatment by professionals; however, it is a valuable way to nurture mental well-being.

Interventions deployed by phone and web also promise immediately scalable solutions. As our case studies suggest, not only can these interventions be cost-effective and often easily replicated, but they are also reaching populations that have not previously had access to support. The simplest solutions use text, phone calls or video to connect individuals to a medical professional who can offer diagnosis, medicine, and counseling. Those with access to the internet can take advantage of more sophisticated services that offer multichannel counseling and support via text, phone, online chat tools (anonymous if desired) and even email (popular among individuals who have no privacy to make phone calls).

Online exercises, chatbots and other tools can deliver a range of diagnostics and therapies without the presence of a counselor.

These technologies are also enhancing the effectiveness of mental health workers. In many countries, a lack of trained psychiatric professionals is the biggest immediate cause of the treatment gap. Effectively taking advantage of care expertise is thus essential. Here, mobile and digital technologies can facilitate the training of staff and volunteers (through online programmes, videos and webinars, for instance). More advanced technologies, such as Expert Learning Systems, can be programmed to create an interactive encyclopedia of symptoms, treatments and much more. Mental health workers can tap into this information to enhance their skills and knowledge, while individuals seeking help can also access it directly (for example, through AI-powered chatbots such as 7 Cup’s Noni).

Finally, by enabling the creation of data lakes, mobile and internet technologies, along with AI, are tackling one of the most significant barriers to the implementation of effective mental health services and treatment at scale: the lack of robust, evidence-based data. The power of big data to unlock effective prediction, diagnoses and treatment represents one of the most exciting – and most ethically challenging – frontiers in the effort to improve the world’s mental health.

Take digital phenotyping, which involves the collection of high-quality data on, for example, sleep patterns, cognitive function, activity or speech. When the data and insights from thousands of individuals is combined, the resulting data lake becomes the richest possible source of understanding. Longitudinal data about multiple conditions, with the help of AI, could be developed into predictive and diagnostic tools that could forever change the way we manage mental health. The big data provided by these technologies holds the key to so much insight; yet with it comes the privacy issues associated with the collective use of sensitive personal data. As we discuss later in the report, finding the right, ethical way forward is essential.

Since this report is focused primarily on solutions that are scalable and affordable in the near term, we have not touched on many other promising innovations. These include EEG technology (electroencephalography, which allows researchers and practitioners to monitor and capture brain activity), extended reality (used, for example, in the treatment of obsessive-compulsive disorder [OCD] and phobias), and digital medicine (medicine that sends a digital signal to confirm it has been ingested, such as in the treatment of schizophrenia). [27]

Technology brought to bear in mental healthcare can be:

- Accessible: available wherever and whenever it’s needed

- Affordable: relatively low incremental costs

- Confidential: offering a safe, confidential or even anonymous environment for those not ready to talk openly

- Digital-friendly: particularly attractive to younger generations who have grown up with digital technologies

- Empowering: helping people take responsibility for their own mental health

- Enabling: helping professionals make a diagnosis and determining a treatment path, even for more unusual conditions

- Equalizing: having the potential to reach all individuals regardless of race, country, income or gender

- Non-judgemental: can be developed without bias, judgment or stigma

- Scalable: effective across distances and even geographic boundaries.

Today, the efficacy of many of these technologies remains to be confirmed by research, but among them is a growing number of lower-risk, assistive technologies that show great promise in being able to provide support at scale: online assessment tools; crisis counseling via text messaging; digital cognitive behavioural therapy and tele-psychiatry are examples with an increasing evidence base to support their wider use.

However, even as these benefits come into focus, the need to address the complex ethical issues that surround the use of technology in mental healthcare becomes more acute.

Ethical challenges must be addressed with urgency

It is clear that technology already has much to offer in terms of providing, and improving access to, better mental healthcare. It is reasonable, therefore, to ask why more has not already been done to take advantage of these tools. Traditional challenges to adoption (such as lack of funding) play a part. However, there is also an understanding within the industry that greater use of new and existing technologies in this space requires policy-makers and practitioners to navigate a complex web of ethical dilemmas, particularly in areas such as data privacy and individuals’ rights. The general nervousness surrounding this journey is understandable, but it has resulted in a reluctance to develop and scale technology-based initiatives that could improve – and save – the lives of large numbers of people.

That’s why the Council believes it is time to resolve the ethical dilemmas associated with mental healthcare. In many areas, the ethical principles and standards required to govern the use of technology in mental healthcare do not differ radically from those required in other healthcare and non-healthcare sectors. This reality needs to be better understood and acknowledged.

We must also combat the natural tendency to focus only on the ethics of “doing something new” and pay more attention to the ethics of “not doing something new”. The tacit implication is that the status quo is both proper and ethical, although this is not always the case.

In fact, given the scale of the diagnosis and treatment gaps that exist within mental health – and the problems people and society as a whole face as a result – there are strong ethical arguments for taking bold action in this space.

Ultimately, we believe that the global conversation around the ethics of using technology within mental healthcare needs to urgently address five issues relating to:

- Trust: Focus on transparency and security to build trust.

- Big data: Establish protocols to enable research while respecting individuals’ data.

- Intervention: Intervene more proactively on mental health issues.

- Equal access: Use technology to promote broad and equal access to treatment, thus combatting rather than exacerbating the digital divide.

- Pragmatic progress: Manage the need for safety and efficacy alongside the need to address treatment gaps quickly.

1. Trust: Focus on transparency and security to build trust.

There is a tendency to view “privacy” and “security” as interchangeable terms; however, they are two very different concepts. Some of the highest-profile data privacy scandals have involved social media platforms whose fundamental purpose is to allow individuals to share or publicize their experiences, views and personal information – the very opposite of keeping them private.

Often, an individual’s goal is to make sure they have clear controls around (and an informed understanding of) what aspects of their data is being collected and stored, how it is being used and, most importantly, for what purpose. Similarly, when personal information is to be shared more broadly, people want reassurance that this sharing is being undertaken in as secure and ethical a manner as possible, and that it is justified by the benefits that may result from it.

Well-informed consumers are aware of these subtle tradeoffs. Equally, they know that their personal information is valuable, and that its value and utility can be enhanced by granting others access to it or by allowing it to be combined (or compared) with other types of information. Furthermore, some young people are significantly more open to sharing their personal data than older people – and so, as the generations evolve, a more permissive global attitude towards data sharing and privacy may naturally emerge.

There may even be occasions when those experiencing mental ill health and practitioners would tangibly benefit from certain privacy laws being relaxed rather than strengthened. In the US, for example, there is separate legislation (42 Code of Federal Regulations, Part 2) that restricts the sharing of any individual’s data relating to alcohol or substance dependency. The legislation was well-intentioned and introduced at a time when individuals might otherwise have avoided seeking treatment out of fear that police and prosecutors could obtain the resulting healthcare records and use them to secure convictions for drug use. Some US mental health practitioners (whose work is hampered by an inability to access or share their clients’ full medical and behavioural histories) are lobbying for it to be amended or withdrawn.

Trust and transparency are paramount when it comes to the use and sharing of personal information, especially when the data relates to something as intimate as a person’s mental health. The process of obtaining and managing consent should be made transparent and easy at every stage. And it can, within relatively straightforward contexts and applications. However, one of the reasons trust is so important within the mental healthcare space is that, due to the complexity of the data being collected, and the likely length of any written terms and conditions surrounding its use, many people will base their decisions on instinctive trust (either in their own clinician or in the organization concerned) rather than on a comprehensive understanding. Note also that, as a society, we are becoming increasingly accustomed to blindly accepting written terms and conditions – especially in relation to apps on smartphones – without either reading or digesting them. In doing so, users are in effect (often unconsciously) placing significant trust in whoever has developed the product or written the agreement; they are trusting that the organization, it’s software and potentially its selected third parties will only act ethically and in the user’s best interests.

Trust takes time to develop, but it can be lost quickly – and the effect of losing trust may be worse than never having had it in the first place. Mental health practitioners are arguably some of the most trusted members of society, due in no small part to the highly confidential nature of the conversations to which they are privy. Technology companies, on the other hand, do not typically command those same high levels of public trust. To realize the potential of technology in mental healthcare, psychiatrists and other mental health practitioners will need to work hard to build people’s trust around new digital tools and technology-based therapies, and the technology companies themselves must embrace the need (demand) for ethical practice; a failure to do so jeopardizes all.

Of course, practitioners themselves also need to be open to incorporating new tools into treatment plans. The provision of mental healthcare continues to be based on a model where in-person, one-to-one sessions with a highly qualified professional represent the best form of intervention. In some circumstances, this is appropriate, but in the case of other (especially lower- level) mental illnesses, technology can play a valuable role in delivering or facilitating effective therapy – and at scales that the human workforce could not achieve. However, trust remains vital – because even the best technology can be rendered useless if people (and practitioners) do not trust it enough to use it confidently.

2. Big data: Establish protocols to enable research while respecting individuals’ data.

The primary benefits of being able to use individuals’ mental health data (digital exhaust, phenotyping and other technology-generated data) at the aggregate level would be to improve prevention, diagnosis and prediction – understanding cause, triggers, early warning signs – and to evaluate the efficacy of mental health treatment and thereby develop more effective treatments that would benefit both individuals and society.

Within populations (countries, health regions), data would offer the ability to better understand, and improve, a population’s overall level of mental well-being and happiness and to help target resources. But when it comes to using an individual’s mental health information beyond their own clinical team or treatment setting, practitioners and healthcare providers must navigate very carefully the logistical and privacy issues surrounding the de-identification and anonymization of that data. In navigating the issues around big data, it will be critical to engage with individuals and to put them in control of their data.

3. Intervention: Intervene more proactively on mental health issues.

Governments, communities, organizations and individuals have all, to a certain extent, been less willing to intervene when faced with poor mental health than they would be when faced with a similar level of poor physical health. For example, most people walking down the street would stop to offer help if they saw a person fall over and hurt their arm, but many of those same people would not choose to stop and talk to a person who was sitting on the pavement in a confused state calling out to passers-by. Similarly, many people would not even feel comfortable starting a conversation with one of their own friends whose mental health appeared to be declining.

There are some understandable reasons for our culture of non-intervention. One immediate challenge is that even if the symptoms are visible, the underlying problem may be highly intractable in nature and the solution may be far from obvious. As such, it is difficult for friends and relatives to offer help because they simply do not know what type of help is required.

Policy and funding decisions can also be affected by the intractability of many mental health problems, along with the often-unquantifiable nature and timescale of the benefits of launching or scaling new initiatives in this space. Governments have the added complication of their historical role in relation to mental health: in centuries past, one of the state’s primary approaches to dealing with people suffering from mental illness was to lock them up and or to criminalize their activities. Although frustration and punishment have been replaced by empathy and treatment in some countries, incarceration and human rights abuses of those with mental illness are still all too common in some parts of the world.

Many people are inherently suspicious of governments and institutions exploiting new forms of technology, so much so that occasionally the ethics of a situation are viewed very differently based on whether technology is part of the equation.

For example, if a person is considered a risk to themselves (if, for example, they tell a colleague they are considering taking their own life and are later seen heading to the roof of their office building), then the emergency services will be informed and action taken to prevent loss of life. Very few people would question the ethics of intervening in such a scenario. But what if the only clues to a person’s suicidal tendencies were digital or technology-based (for example, if they have been researching suicide techniques online or they have posted suicidal thoughts on social media)? Then there is broad debate about ethics. Should society be making use of readily available, well-validated digital tools to detect and intervene to prevent suicides? When a human being raises the alarm, they are seen as being caring, but if an algorithm or a bot raises the alarm, it is perceived as being intrusive.

Most governments are not actively monitoring their citizens’ internet search activities for keywords associated with mental illness or suicide. However, many large technology firms and social media giants are taking steps to help people who display signs of poor mental health.

For example, people searching the internet for information about how to take their own life will often have their search results manipulated; typically, they are presented first with the telephone number of a counseling service and other sources of mental health advice, whereas the results for their actual search query are relegated further down the page. Similarly, many of the large social media platforms employ human and algorithm-driven “compassion teams” who look out for concerning behaviours and content, and who can either generate helpful pop-ups for the user (promoting a helpline, for example) or refer troubling material to local authorities. This activity generates conflicting reactions from outside the industry (for example, because of the lack of transparency over the algorithms used), yet such firms are often criticized for failing to do more, or for not being able to prevent tragic events in real time. The issue could be addressed under the governance structure we propose in our recommendations.

Just how proactive society wants its governments and organizations to be when intervening in mental health will doubtless evolve over time, and it will continue to be hotly debated. But part of the answer to this ethical issue (and the related data privacy topics) lies in destigmatizing mental health in general. Policymakers are hesitant to examine if and when to intervene more proactively on mental health issues in part because they know that citizens perceive mental health data to be intimate, and that in turn is because mental health is still viewed by many as a taboo subject. People feel able – or even proud – to tell their friends that they have beaten cancer or openly test their blood sugar levels to manage their diabetes, but most would not feel comfortable announcing that they have managed to significantly reduce their level of depression and anxiety. Working to reduce that stigma and valuing

positive mental health – so placing mental health on an equal footing with physical health – will enable society to take a far more open and proactive approach to mental health and well-being.

4. Equal access: Use technology to promote broad and equal access to treatment, thus combatting rather than exacerbating the digital divide.

One of the main advantages to using technology-based mental healthcare therapies is that, once developed, these solutions can often be scaled relatively quickly and cheaply compared to traditional forms of therapy. This is largely because some of the required infrastructure and underlying resources already exist. For example, rather than having to build new clinics or double the number of trained practitioners in a specific geographic location, individuals can instead access treatment via mobile devices that they already possess. In some parts of the developing world, mobile phones have become more ubiquitous than clean drinking water. Treatments such as conversational or chat-based therapy can be delivered virtually, allowing people in areas with few or no local therapists to benefit from experts based in other parts of the country, or even other parts of the world. Equally, as seen in some of the case studies highlighted by this report, technology can also play a valuable role in connecting, mobilizing and training members of the local community to provide effective support to others, often on a truly anonymous basis. And because digital assets can be replicated and distributed almost without additional cost (for example, a self-guided mindfulness app can be downloaded by one user or by one million users), the potential for

scaling these tools is huge, and their propagation may even start to take place organically via word-of-mouth referrals.

It is clear, therefore, that technology has the potential to be a great leveler in terms of improving the breadth and equality of access to mental health information, advice and treatments. And many of the individuals and communities who stand to benefit most from these new digital tools live in low-income or middle-income settings – settings that exist in the developed world as well as in developing countries. However, conscious action must be taken to prioritize the development of therapies that will deliver this equalizing effect – rather than prioritizing other kinds of tools that, because they may be relevant or accessible to only a privileged or self-selecting segment of the population, threaten to create a digital divide within mental healthcare. The focus should therefore be on increasing the availability and adoption of these new tools to the highest standard of care for the individual, rather than becoming overly concerned with the sophistication of the tools themselves. It is human nature to become distracted by the nascent capabilities of fashionable new devices. But these may be available to only an incredibly small number of people, whereas more basic methods (for example, simple SMS-based messaging on a basic mobile phone) may ultimately generate a far greater benefit to society due to their reach and accessibility.

When considering how to promote broader awareness and adoption of technology-based initiatives, it is important also to exploit non-traditional distribution channels for bringing individuals (or just people in general) into contact with these new tools at scale. It is not simply the government or the professional healthcare sector that has a role to play here; entities such as employers, schools and universities are all well-placed to make a huge contribution. Some large employers, for example, are starting to incorporate mindfulness and meditation apps – along with more traditional telephone advice helplines – into the suite of offerings made available to help employees monitor and

improve their mental health and well-being. Promoting awareness of (and destigmatizing) mental health issues among young people is also highly desirable, because many common forms of mental illness, such as anxiety and mild depression, can begin early in life and then either worsen or reoccur throughout adulthood. Initiatives such as those undertaken by the Black Dog Institute – whose activities include making mental health smartphone apps available to entire school populations in parts of Australia – are a good example of how to make information and advice available to young people at scale and in a non-judgemental manner.

5. Pragmatic progress: Manage the need for safety and efficacy alongside the need to address treatment gaps quickly.

One of the most commonly raised ethical concerns surrounding the implementation of technology within mental healthcare is that some of these new tools and capabilities have not yet been fully perfected or undergone rigorous clinical trials. This attracts criticism that the resulting therapies may not be effective.

Researchers in Germany, France, and the US recently developed a convolutional neural network (a form of AI) that could detect skin cancer by looking at images of potential melanomas. During tests, the computer successfully detected 95% of the malignant melanomas shown to it. Clearly, there is room for improvement. However, the human dermatologists scored only 87% in the same tests. People and doctors would thus benefit from having access to these kinds of tools. But society tends to treat human error and poor judgment differently from errors caused by machines or algorithms; there is a higher expectation that technology should get it right and less understanding when there is an error. And indeed, there are times when that higher expectation is justified; when a human makes a mistake it’s (typically) the error of a single person but if a machine is programmed wrongly, the mistake or bias

is too often replicated at scale.

Where low-risk technology exists that can outperform humans with more accurate or timely diagnoses, or better treatment outcomes, then there is clearly a strong ethical (not to mention economic) case for deploying it. However, there are also instances where it makes sense to use technology-based therapies even if they are not as effective as in-person therapies. For example, it is widely believed that a human therapist can provide a more empathetic experience than could ever be provided by an automated chatbot or an algorithm, and there is concern that technology-based therapies could leave people feel isolated and uncared for. But having the opportunity to interact with an app or an algorithm may still be better than receiving no treatment at all.

In the race to respond to the global crisis in mental healthcare there is a need to understand the risk profile of the technologies that may be part of the solution. High-risk technologies are those that offer diagnosis and/or clinical interventions and have the power to harm (a misdiagnosis of depression has extremely serious implications for someone who is actually suffering from thyroid deficiency). Such applications require testing and approval before implementation. Lower risk technologies – such as those that monitor symptoms or that assist a therapist in their diagnosis – make it easier to access advice that will help to provide much-needed support in the near term. As they are deployed, careful measurement of outcomes and of unintended consequences (such as how individuals feel when the human element is partially or completely removed) will be an essential part of any trial or implementation.

Eight actions to scale the use of mental health technology

The human, social and economic imperatives to improve mental health provisions are compelling. Technology offers transformational solutions that are ready for deployment at scale. We recommend that global leaders take the following actions and, in doing so, are guided by the principle of “human-first”, putting the mental healthcare user at the heart of every step and engaging them in all decisions.

- Create a governance structure to oversee the broad, ethical use of new technology in mental healthcare, ensuring that innovations meet the five ethical imperatives listed above and updating them as appropriate. People with lived experience, governments, policy-makers, technology providers and medical professionals must come together to agree how to govern the use of technology in general and the handling of personal data

and effective outcome measurement in particular, learning from the best examples of existing governance structures. Governance must also include the views and voices of people with mental health concerns, as they are the ones who stand to gain and lose the most. - Develop regulation that is nimble enough to enable and encourage innovation while keeping pace with technology advances when it comes to ensuring safety and efficacy. The need to manage proof of efficacy and safety alongside the need to act quickly where provision is lacking has been highlighted above. Some medical technologies will – and do – require approval for use. However, to prevent innovation being stifled and opportunities to tackle the growing problem in mental healthcare being missed, regulations and guidelines must be developed to allow for continual adjustment as technology advances. Governments and regulatory bodies need to work with technology-led mental healthcare providers and medical experts to ensure these actions are addressed.

- Embed responsible practice into new technology design. The power, speed and reach of new technologies is at the heart of their potential to transform healthcare. But it also carries risk – of replicating errors at scale and of unintended consequences. In designing new technologies, in particular those that entail direct connections with our brains, technology business leaders and entrepreneurs need to embed and embrace responsible practice. An unintentional misstep could damage the very people the technology was supposed to help.

- Adopt a “test and learn” approach when implementing new, technology-led mental healthcare services. When it comes to rolling out programmes and initiatives using these technologies, it is vital to test, learn and adapt. For example, as mentioned previously, we don’t yet know how those seeking support will feel if services are provided by technology rather than through the “human touch” of a therapist. Healthcare practitioners may feel a loss of agency/autonomy as intelligent technology takes on some of their activities. Through the new governance body, protocols will need to be established and all technologies, particularly higher-risk technologies, will need to be regulated. Here, medical experts, technology healthcare providers, governments, leading research bodies, healthcare professionals and users will need to work together.

- Exploit the advantages of scale. Inter-governmental and inter-agency collaboration, working with private sector or not-for-profit mental healthcare providers/technologies can help coordinate mental health strategies. Designing and deploying them on at least a country-wide or regional basis could help maximize funds and minimize effort and duplication. Where countries are in a state of war, famine or unrest or where there has been a natural disaster, it is possible to provide mental health support from beyond the country’s borders. As with all recommended actions, the involvement of the people receiving the planned healthcare services is essential.

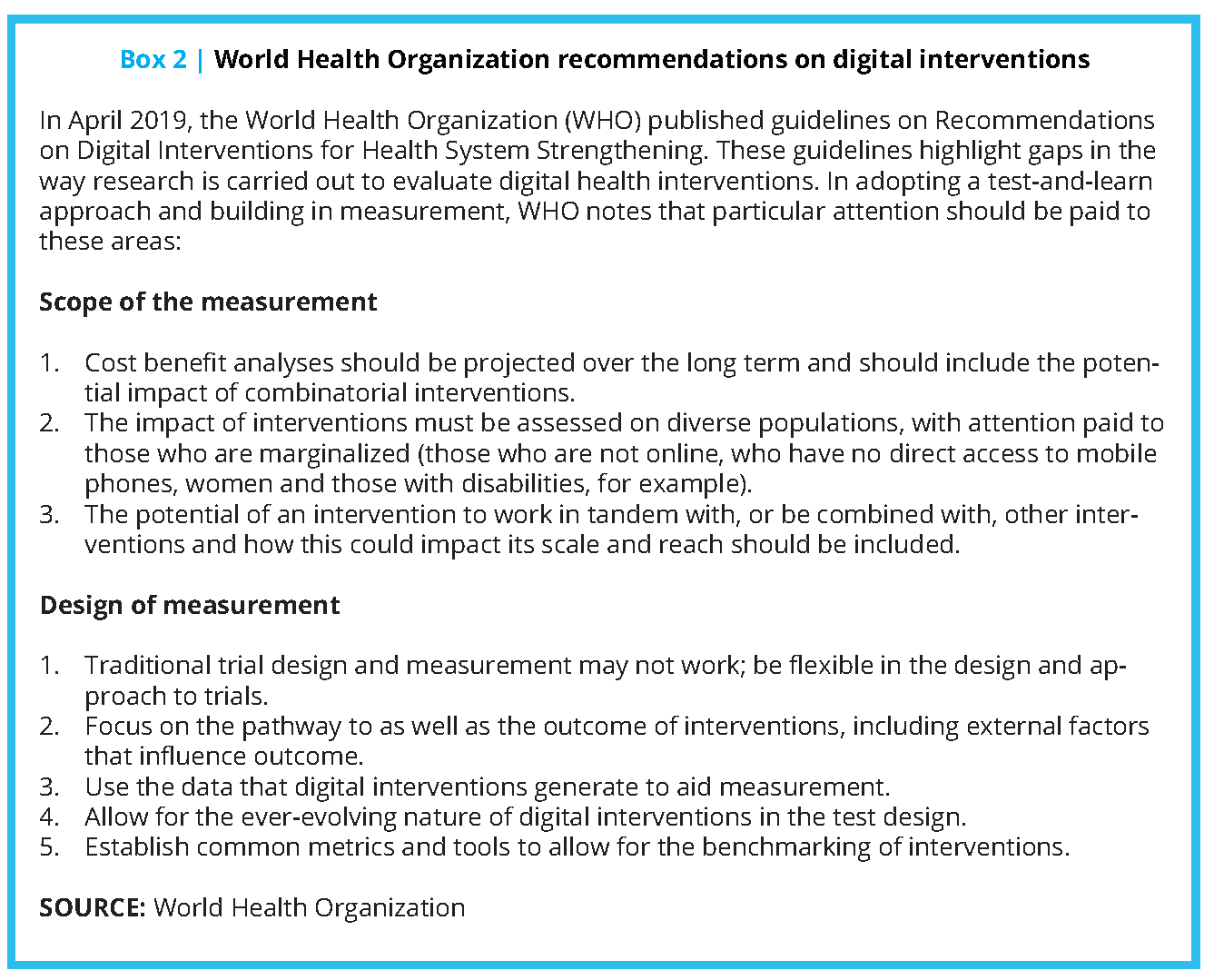

- Design in measurement and agree on unified metrics. To ensure that future innovation is firmly linked to efficacy (currently, there is a lack of tangible, outcome-based measures) and does not have unintended consequences, it is essential to design in tracking and measurement from the first step. As set out in the World Health Organization’s guidelines on digital health interventions, [28] measurements should extend to cost-effectiveness and ensure that inequalities (in gender, income levels, etc.) are not perpetuated or introduced by the use of technology-driven solutions. A unified understanding of mental health and the metrics used to measure and assess it will be essential. As before, multidisciplinary collaboration and the inclusion of people with lived experience is essential.

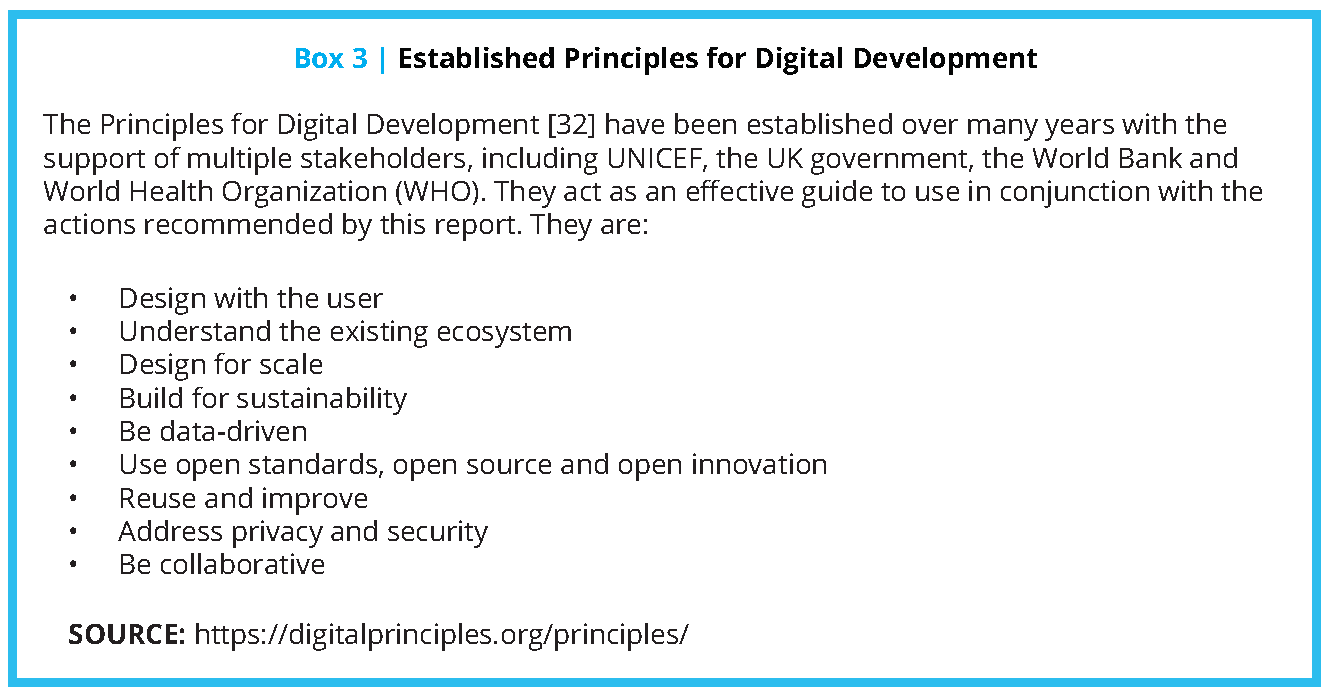

- Build sustainable technology solutions. While technology offers the potential for far-reaching, sophisticated solutions, they must also be sustainable. Simpler technologies have much to offer and should not be overlooked in favour of what seems to be a “smarter” solution. Inter-governmental and interagency collaboration will be needed, as will work with private-sector or not-for-profit mental healthcare providers/technologies (see Box 2 and 3).

- Support low-income communities and countries. International aid from governments to support physical health is a long-established practice. In 2015, over $50 billion was given in health aid worldwide, and a wealth of research and effective methodologies are available. [29] In marked contrast, global development assistance for mental health was just $0.85 per DALY (disability-adjusted life year) compared with $144 for HIV/AIDS and

$48 for malaria. [30] There is an urgent need to grow mental health aid; technology-supported solutions can help ensure it has the maximum possible reach and impact.

The actions above will require the collaboration of international experts from many disciplines: governments, policy-makers, regulators, healthcare providers, technology innovators, the private and the not-for-profit sector. The input of leading psychiatric professionals and of the mental healthcare users will be central to the outcome and international organizations such as the World Economic Forum and World Health Organization (WHO) have powerful roles to play as conveners. The voices of people with lived experience must be central to the future of this work.

Conclusion

The size of the challenge before us is enormous. But so is the extraordinary potential of technology to help improve mental healthcare, and thus mental health, worldwide. The critical next step is to agree to a way through the ethical dilemmas that technology can pose, and with a collective effort on that front, we can make swift progress to expand mental health care coverage. Our research shows what can be done using even the simplest of technologies; the ability to scale these quickly and tap into the potential of more sophisticated technologies to great effect is within our grasp.

Join the conversation!

![]() Tweet this! The rapid spread of smartphones, wearable sensors and cloud-based AI tools offer opportunities for scaling access to mental healthcare. Although the possibilities are vast, they must be weighed alongside challenges. Read more: https://doi.org/10.31478/201910b #NAMPerspectives

Tweet this! The rapid spread of smartphones, wearable sensors and cloud-based AI tools offer opportunities for scaling access to mental healthcare. Although the possibilities are vast, they must be weighed alongside challenges. Read more: https://doi.org/10.31478/201910b #NAMPerspectives

![]() Tweet this! Mental health disorders are among the leading causes of morbidity and mortality worldwide, and even in wealthy nations, over 50% of individuals receive no mental health care. Technology can help bridge this gap. Read more: https://doi.org/10.31478/201910b #NAMPerspectives

Tweet this! Mental health disorders are among the leading causes of morbidity and mortality worldwide, and even in wealthy nations, over 50% of individuals receive no mental health care. Technology can help bridge this gap. Read more: https://doi.org/10.31478/201910b #NAMPerspectives

![]() Tweet this! “Technology is a tool that can allow us to achieve greater scale than ever, but human touch and compassion remain crucial for healing the mind.” Read more abt how technology can effectively supplement mental health professionals: https://doi.org/10.31478/201910b #NAMPerspectives

Tweet this! “Technology is a tool that can allow us to achieve greater scale than ever, but human touch and compassion remain crucial for healing the mind.” Read more abt how technology can effectively supplement mental health professionals: https://doi.org/10.31478/201910b #NAMPerspectives

![]() Tweet this! Our newest #NAMPerspectives paper outlines the eight key actions needed to scale the use of mental health technology in supporting mental health care professionals worldwide. Read about this proposed path forward: https://doi.org/10.31478/201910b

Tweet this! Our newest #NAMPerspectives paper outlines the eight key actions needed to scale the use of mental health technology in supporting mental health care professionals worldwide. Read about this proposed path forward: https://doi.org/10.31478/201910b

Download the graphics below and share them on social media!

References

- World Health Organization. 2004. The Treatment Gap in Mental Health Care. Available at: https://www.who.int/bulletin/volumes/82/11/en/858.pdf (accessed September 2, 2020).

- Survey conducted by the Royal College of Psychiatrists, October 2018.

- World Health Organization. 2001. Mental Disorders Affect One in Four People. Available at: https://www.who.int/whr/2001/media_centre/press_release/en/ (accessed September 2, 2020).

- Ritchie, H. 2018. Global mental data: Five key insights which emerge from the data. Available at: https://ourworldindata.org/global-mental-health (accessed September 2, 2020).

- World Health Organization. n.d. Fact Sheet on Depression. Available at: https://www.who.int/news-room/factsheets/detail/depression (accessed September 2, 2020).

- World Health Organization. 2018. Suicide Data. Available at: https://www.who.int/mental_health/prevention/suicide/suicideprevent/en/ (accessed September 2, 2020).

- Ritchie, H. 2018. Global mental data: Five key insights which emerge from the data. Available at: https://ourworldindata.org/global-mental-health (accessed September 2, 2020).

- Mental Health Foundation UK. 2019. Statistics. Available at: https://www.mentalhealth.org.uk/statistics/mental-health-statistics-global-and-nationwide-costs (accessed September 2, 2020).

- OECD. 2014. Making Mental Health Count. Available at: https://www.oecd.org/publications/making-mental-health-count-9789264208445-en.htm (accessed September 2, 2020).

- Accenture. 2018. It’s Not 1 in 4; It’s All of Us. Available at: https://www.accenture.com/_acnmedia/PDF-90/Accenture-TCH-Its-All-of-Us-Research-Updated-Report.pdf (accessed September 2, 2020).

- Chisholm, D., K. Sweeny, P. Sheehan, B. Rasmussen, F. Smit, P. Cujpers, and S. Saxena. 2016. Scaling-up treatment of depression and anxiety: a global return on investment analysis. Lancet Psychiatry 3: 415-424. http://dx.doi.org/10.1016/S2215-0366(16)30024-4

- National Alliance on Mental Illness of Massachusetts. 2015. Bad for Business: The Business Case for Overcoming Mental Illness Stigma in the Workplace. Available at: http://ceos.namimass.org/wp-content/uploads/2015/03/BAD-FOR-BUSINESS.pdf (accessed September 2, 2020).

- The Lancet. 2018. The Lancet Commission on global mental health and sustainable development. Available at: https://www.thelancet.com/commissions/global-mental-health (accessed September 2, 2020).

- World Health Organization. 2004. The Treatment Gap in Mental Health Care. Available at: https://www.who.int/bulletin/volumes/82/11/en/858.pdf (accessed September 2, 2020).

- Accenture. 2018. Supporting Mental Health in the Workplace: The Role of Technology. Available at: https://www.accenture.com/_acnmedia/PDF-88/Accenture-World-Mental-Health-Final-Version.pdf (accessed September 2, 2020).

- World Health Organization. 2016. Global Health Observatory (GHO) data. Available at: https://www.who.int/gho/mental_health/human_resources/psychiatrists_nurses/en/ (accessed September 2, 2020).

- Unum. 2019. Mental Health. Available at: https://landing.unum.co.uk/mental-health (accessed September 2, 2020).

- World Health Organization. 2019. Mental health, human rights, and legislation. Available at: https://www.who.int/mental_health/policy/legislation/en/ (accessed September 2, 2020).

- Thriving at Work: Stevenson-Farmer Review. 2016. Available at: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/658145/thriving-at-work-stevenson-farmer-review.pdf (accessed September 2, 2020).

- Statista: Global Digital Health Market 2015–2020.

- Accenture. 2018. Supporting Mental Health in the Workplace: The Role of Technology. Available at: https://www.accenture.com/_acnmedia/PDF-88/Accenture-World-Mental-Health-Final-Version.pdf (accessed September 2, 2020).

- GSMA Intelligence. 2019. Home. Available at: https://www.gsmaintelligence.com/ (accessed September 2, 2020).

- App Annie. 2019. Home. Available at: https://www.appannie.com/ (accessed September 2, 2020).

- Accenture. 2018. Supporting Mental Health in the Workplace: The Role of Technology. Available at: https://www.accenture.com/_acnmedia/PDF-88/Accenture-World-Mental-Health-Final-Version.pdf (accessed September 2, 2020).

- eMarketer. 2016. The Internet of Medical Things: What Healthcare Marketers Need to Know Now. Available at: https://www.emarketer.com/Report/Internet-of-Medical-Things-What-Healthcare-Marketers-Need-Know-Now/2001721 (accessed September 2, 2020).

- World Economic Forum. 2018. 3 Ways AI Could Help Our Mental Health. Available at: https://www.weforum.org/agenda/2018/03/3-ways-ai-could-could-be-used-in-mental-health (accessed September 2, 2020).

- U.S. Food and Drug Administration. 2017. FDA approves pill with sensor that digitally tracks if patients have ingested their medication. Available at: https://www.fda.gov/news-events/press-announcements/fda-approves-pill-sensor-digitally-tracks-if-patients-have-ingested-their-medication#:~:text=The%20U.S.%20Food%20and%20Drug,that%20the%20medication%20was%20taken (accessed September 2, 2020).

- World Health Organization. 2019. WHO releases first guideline on digital health interventions. Available at: https://www.who.int/news-room/detail/17-04-2019-who-releases-first-guideline-on-digital-health-interventions (accessed September 2, 2020).

- Reuters. 2018. International aid saves 700 million lives but gains at risk – report. Available at: https://uk.reuters.com/article/uk-health-globalprogress/international-aid-saves-700-million-livesbut-gains-at-riskreport-idUKKCN1MO0WI (accessed September 2, 2020).

- The Lancet. 2018. The Lancet Commission on global mental health and sustainable development. Available at: https://www.thelancet.com/commissions/global-mental-health (accessed September 2, 2020).

- Reuters. 2018. Mental health crisis could cost the world $16 trillion by 2030. Available at: https://www.reuters.com/article/us-health-mental-global/mental-health-crisis-could-cost-theworld-16-trillion-by-2030-idUSKCN1MJ2QN (accessed September 2, 2020).