Artificial Intelligence for Health Professions Educators

A Call to Action

Artificial intelligence (AI) is already impacting many facets of American life and is poised to dramatically alter the maintenance of health and the delivery of health care. The explosion of information available to inform the work of health professionals exceeds each individual’s capacity to process it effectively [1]. Learning to wield collective knowledge to augment their own personal abilities will distinguish the health provider of the future from the health provider of the past. AI will help enable this evolution by supplementing—not supplanting—the savvy provider. All health professions have an opportunity to leverage AI tools to optimize the care of patients and populations.

In 2019, the National Academy of Medicine released a Special Publication titled Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril [2]. Intended to support the vision of a learning health system, the authors addressed “the need for physicians, nurses, and other clinicians, data scientists, health care administrators, public health officials, policy makers, regulators, purchasers of health care services, and patients to understand the basic concepts, current state of the art, and future implications of the revolution in AI and machine learning.”[3] This manuscript is intended to complement the prior Special Publication and serve as a call to action to the health professional education (HPE) community. Educators must act now to incorporate training in AI across health professions or risk creating a health workforce unprepared to leverage the promise of AI or navigate its potential perils.

Overview of AI and its Applications in Health Care

It is incumbent on leaders of HPE to enhance their own understanding of AI in order to guide the training of current and future learners. A brief overview is provided here to anchor the subsequent discussion of educational implications (the prior Special Publication provides more detail).

AI is an umbrella term encompassing multiple methods and capabilities. Much of the “hype” is focused on the science fiction vision of a powerful computer capable of functioning like a human being. The much less exciting narrow AI, or the development of a tool to support a single, specific task, is the actual reality of AI in 2021. Other terms for AI include algorithms, machine learning, and neural networks. Algorithms are at the center of AI and are continually growing in sophistication [4]. Designed to “learn what to do,” the goal of algorithms is to enable computers to act without being given coding guidance for each and every step. Machines that sense, think, and act will impact health care in ways that were unimaginable a few short decades ago [2]. Figure 1 outlines various aspects of AI as they relate to the field of medicine.

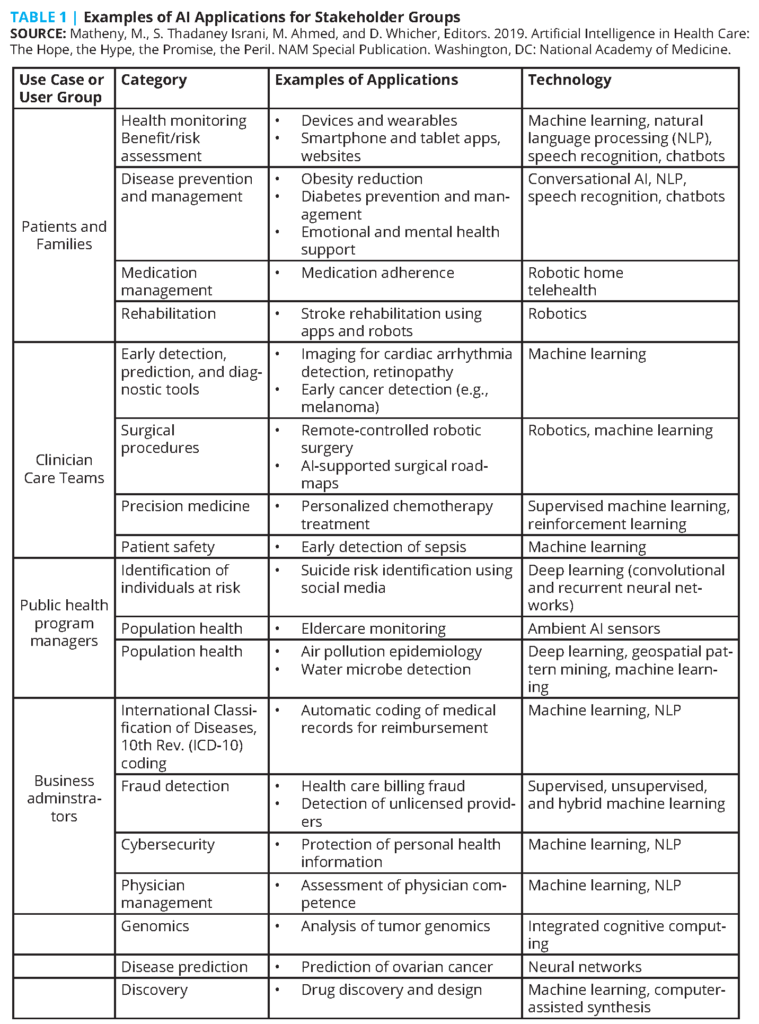

Hope and promise lie in the utilization of AI to capture and process extensive data supporting evidence-based practice. If properly leveraged, AI will increase access, affordability, and quality of health care, and has the potential to enhance privacy and security of patient data. Offloading repetitive tasks and administrative informational processing (such as documentation burdens) frees providers to focus on creative thought processes and attend more directly to their patients. It is beyond the scope of this document to fully catalog potential roles for AI in health care, but examples provided by Matheny et al (see Table 1) demonstrate applications across disciplines and professions [2]. Embracing AI tools as partners will result in augmented intelligence of the entire health care system and the individuals within it.

There is potential peril in any new technology. Compared to prior technologies that have shaped health care, a fundamental difference of AI is its capacity to evolve. Some AI tools are rules-based, programmed to complete a specific task. But an advantage of AI is the ability to train a tool via exposure to a large dataset, allowing AI to identify within the data its own method to complete a task. Currently, many such data-based tools are locked at the point of application; using past data, they apply a formula to categorize new data. But some are set to learn continuously: as the tool is exposed to more and more data, it may modify how it addresses the task. The ability for adaptive learning is simultaneously the wonder and threat of AI. Analogous to a surgical instrument morphing in the surgeon’s hand as they wield it, continuous AI tools require vigilance from health professionals in critically appraising their outputs and performance. Human contribution to the design and application of AI tools is fraught with the same bias already infiltrating U.S. health care systems, and such bias could be amplified by AI if not closely monitored [5]. The rise of AI tools thus has implications for existing health professions training, reminding of the need to acknowledge human fallibility in areas such as clinical reasoning and evidence-based medicine. Currently, much of AI development is shaped by economic drivers and corporate interests; health providers must be informed to serve as advocates for the needs of patients and communities. All health providers need foundational understanding of AI in order to maximize the promise and mitigate the peril.

AI holds great potential to accelerate effective interprofessional collaborative practice. AI will spur a fundamental alteration in provider roles, shifting the anchor of professional identities from the possession of a specific fund of knowledge toward expertise in accessing, assessing, and applying that information. A greater breadth of information will be available to each provider, but the unique perspectives of each profession will remain essential in the meaningful application of that information. To support a learning organization and continuously improve the health system an interprofessional lens is critical to developing effective education in AI [6]. “Coordinated planning among educators, health system leaders, and policy makers is a prerequisite for creating an optimal learning environment and an effective health workforce (Cox and Naylor, 2013). Coordinated planning requires that educators be cognizant of health systems’ ongoing redesign efforts, and that health system leaders recognize the realities of educating and training a competent health workforce” [7].

Some health professionals will make AI a focus of their careers and will pursue extensive training in order to provide the critical influence of health care providers in the development, implementation, and evolution of AI tools. This call to action, however, focuses on the preparation of all health care professionals — those who will utilize a variety of AI tools in their routine provision of care. Health professionals are at an advantage to successfully learn how to incorporate AI into their work, in that principles of critical reasoning and data analysis are already fundamental to health care training. “Health care workers in the AI future will need to learn how to use and interact with information systems, with foundational education in information retrieval and synthesis, statistics and evidence-based medicine appraisal, and interpretation of predictive models in terms of diagnostic performance measures” [2]. Such interactions will demand an attitudinal shift in providers, as AI may be perceived more as a colleague than a tool. It is imperative that leaders of HPE urgently act to ensure that all providers are positioned to contribute to the responsible deployment of AI.

Simultaneously, there is potential for educators to exploit powerful AI tools within the process of education itself. Administrative burdens associated with educational programs could be offset by AI, freeing educators to focus on more creative and relational aspects of their work. Particularly intriguing is the opportunity to enhance the education of each professional throughout one’s career. Analogous to “precision medicine,” educators can foster “precision education” by leveraging data to individualize training and assessment. Data can inform the strategic deployment of educational resources and strengthen the link between practice and education, and educators can advocate for the development of appropriate tools.

This manuscript thus addresses the duality that health professions educators must consider now: the need for training in AI and the role of AI in training.

Addressing Hesitance to Incorporating AI into Health Professions Education

The authors of this manuscript have had the opportunity to advocate for better training in AI in various educational venues and with audiences across health professions. Many doubts have been raised in those discussions, which warrant addressing directly in a transparent manner. Skepticism cannot negate this call to action; indeed, many of the concerns about AI argue all the more for appropriate education of learners [8,9].

Fear of AI leading to a dystopian society where robots overtake their creators has been immortalized through science fiction, leading some to believe that the dangers of AI far outweigh its benefits [10]. Within health care and HPE, concerns about AI are more specific, and therefore should be more readily addressed. Common topics of consternation include concerns for potential job loss, threats to the traditional “expert” role, a lack of strategies for integrating AI into current information technology and electronic health record (EHR) systems, shortage of a well-trained team in data collection and analysis [11], and concerns about AI being a clinical tool that is a “black box” in which decisions are not fully transparent.

The basic premise of AI is to supplement, not supplant, the work of health professionals and educators. Misunderstanding this concept is a primary cause of hesitancy around AI, in practice and in education, along with a more fundamental resistance to change. A lack of knowledge of what AI is and can or cannot do contributes to this resistance. The medical disciplines of radiology and pathology exemplify these points. Studies have documented that algorithms outperform radiologists in identifying malignant tumors, but that speaks more to the value of AI as a tool rather than the likelihood that AI will replace radiologists [12]. In fact, AI systems are designed to accomplish specific tasks like reading and interpreting radiographic images; radiologists are also expected to integrate multiple touch points produced through AI, consult with other health professionals, and interact with patients — all of which are roles most appropriately designed for human-to-human interaction.

Examples from other health professions include advances in AI related to mental health care. AI is being utilized to address the critical shortage of mental health professionals throughout the world. Woebot, a clinically proven Cognitive Behavioral Therapy (CBT) chatbot platform, uses AI to form a therapeutic alliance with the user and adapts the CBT approach based on the individual presenting symptoms [13].

Another clinically proven AI implementation in mental health is Tess, which employs educational, conversational, and therapeutic approaches to foster behavior change in the user [14]. Tess has been used by more than 19 million people worldwide. While millions of people are using AI to supplement the work of mental health professionals, competency domains have not been updated to reflect the knowledge base that practitioners need in relation to AI approaches or to reflect the needed integration of existing AI usage into the treatment process. Certain applications of AI, like deep learning and advanced data analytics, could be viewed as partners and collaborators and not feared as displacers of health care professionals [15]. AI will not replace providers, but providers who leverage AI will replace those who do not.

The already overloaded curriculum across health professions creates resistance to adding additional competencies in AI for learners. The dense curriculum has implications for students’ well-being and faculty burnout. But, as with all evolving areas of medicine, determining how AI relates to existing content and weighing the relative impact of all areas of content on future practice will be critical in order to identify paths forward. As new information in the health professions continues to exponentially grow, “information overload” continues to overwhelm the cognitive and mental capacity of students’ and educators’ minds. This growing incongruence has led to a shift in health professions educators’ thinking regarding instruction, with calls to refocus learning on “knowledge management” rather than the current and long standing “information acquisition” model. The irony here is that the addition of AI as a subject in health professions and as a tool to manage the curriculum can actually reduce curricular load. By migrating some biomedical and clinical knowledge to AI algorithms, educators can instill more breathing room into health professions curricula and into the lived experiences of students and faculty [16,17].

There is also concern about a lack of faculty with the appropriate expertise to create and deliver curricula in AI. Developing new relationships between the educational program and experts in the health system may offer a starting point. Health professions faculty members responsible for curricular oversight will need to improve their digital literacy regarding AI and topics such as mathematical modelling and decision theory, and as such, faculty development must be a priority. Similarly, a lack of knowledge and skills to best utilize AI to enhance the process of education and lack of resources to develop such tools stymies progress.

Health professional instructors who use AI and machine learning for predictive analytics or to offset their workloads must be educated on both the benefits and the risks regarding bias in big data and algorithms. The example of Microsoft’s bot Tay is a cautionary tale of unsupervised machine learning. Designed as an experiment in “conversational understanding,” Tay was established as an openly AI Twitter user. Within 24 hours, hackers overwhelmed the system with hateful and racist comments, leading Tay to spew messages reflective of its data input [18]. Students and educators must realize that AI is not a stagnant function and must be constantly analyzed, evaluated, and updated. Because the efficacy of AI algorithms is impacted by the data collected by humans, unconscious biases can be incorporated unintentionally. Previously conducted research can also add biases to AI-derived outcomes because underrepresented and marginalized groups have traditionally been excluded from datasets [19]. Education in AI is critical so that health professionals understand their own unconscious biases and develop the skills to help explain AI-supported decision making to marginalized groups that have a history of mistrust with and in the health professions.

Concerns about potential negative impacts of AI and a lack of resources to implement educational adjustments argue all the more for educators to tackle this challenge now. It is imperative that educators provide training that empowers all providers to fulfill their professional duty to care for and protect patients. The further development and incorporation of AI will not stop due to the fears or concerns of some educators. Promoting a dialogue and improving partnerships between the health disciplines and computer scientists would enable the development of realistic, usable, and effectively applied technology. Importantly, the future of the health professions, the students and learners being educated now, have demonstrated a more positive acceptance of AI in HPE and practice. A study conducted with radiology residents showed that a majority of students agreed that AI will revolutionize (77 percent) and improve (86 percent) radiology, while disagreeing with statements that human radiologists will be replaced (83 percent). More than two-thirds agreed with the need for AI to be included in medical training (71 percent) [20]. The time is right for health professions educators to position their learners to understand and support the use of AI in the clinical setting with the intent to provide safe, quality patient care.

Thus, health professions educators must consider two aspects of the emergence of AI in health and health care delivery:

- What training in AI will health professionals need to do their jobs well?

- How can AI capabilities be leveraged to improve the training of health professionals?

Training in AI

As mentioned previously, the scope of this manuscript is to consider foundational needs of all providers, not to outline the needs of those who will choose to specialize in AI development. Training in AI is ripe for interprofessional educational approaches because all fields are relatively early on in this process and the relationships of work across professions will likely shift as AI advances. Learning about, from, and with one another adds rich perspectives that will enable health professions to anticipate and mitigate perils and amplify the promise of AI. The following domains outline key elements to be addressed in education about AI.

Information Overload

Historically, acquisition of information, its synthesis, and proper application has characterized health care professionals’ training. Due to the explosion of data from EHRs, imaging, biometrics, multi-omics, and remote monitoring via sensors, managing analyses of such data increasingly requires application of sophisticated algorithms. Traditional curricula can no longer prepare health professionals for the evolving needs that require knowledge management and effective utilization of machine learning and data analytics [16]. In addition, trainees need to be prepared for an increasing focus on precision health due to the ability to gather detailed, personalized data using multiple digital tools (e.g., an Apple Watch can collect various physiological measurements including sleep assessment) that will help people adapt their behavior and make healthy lifestyle choices. Explicitly naming this challenge of information overload helps health professions learners understand the need for AI support and for their own continual learning.

Foundations in AI

All providers need a fundamental understanding of what AI is, how it works, and how it differs from other forms of technology currently in practice. A general understanding of various ways in which AI is applied to the work of health professionals is appropriate at early stages of training; as one advances in a given field, additional training will be needed in more specific applications used in one’s discipline. Understanding the provider’s role in oversight of AI applications, including regulatory and ethical concerns, will aid in meeting the collective professional obligation to ensure that these new tools are used in a manner that optimizes their promise and minimizes potential perils.

New Competencies

Recent literature offers suggestions regarding the elements of training in AI to be incorporated into HPE. McCoy et al. [21] describe broad competencies as:

New roles that health professionals must assume in delivering care:

- Evaluator: Being able to evaluate when a technology is appropriate for a given clinical context and what inputs are required for meaningful results

- Interpreter: Interpretation of knowledge and skills with a reasonable degree of accuracy including knowing potential sources of error, bias, or clinical inappropriateness

- Communicator: Communication of results and underlying process in a way that patients and other health professionals can understand

Competencies for understanding AI in a broader professional context:

- Stewardship: Be a responsible steward for patient data to ensure basic trust between provider and patient

- Advocacy: Understand the risks around data security and privacy—health care providers must be equipped to advocate for the development and deployment of ethical and equitable systems

McCoy et al. and Law et al. argue for the additional need for explicit training of health professionals in fundamental computer programming skills, an understanding of good practices in software design, and the ability to envision how to incorporate new tools into practice [20, 21]. Training in programming may not be indicated for every health professions student, but all of them need a fundamental understanding of best practices in, and limitations of, algorithmic development and maintenance.

Considering Competency Broadly

Beyond knowledge of AI, other competency domains necessary for health professions will be impacted as well. Accepting that AI will supplement, not supplant, the work of providers, prompt consideration of the qualitative attributes that providers need to partner effectively with AI is both warranted and necessary. For example, as AI is incorporated into clinical encounters, health professions students need training in new communication skills and professionalism to retain the critical humanist elements of care delivery. Increased emphasis on skills such as clear communication, empathy cultivation, health advocacy, and collaboration will be increasingly needed to prepare professionals who can connect effectively with patients while being facile with technology [22, 23]. Fostering communication, empathy, and caring will require more attention and expertise [24]. To reference a quote that is attributed to many, but perhaps most to Gino Wickman, “Systemize the predictable, so you can humanize the exceptional.” Such a “high tech, high touch” approach seems to be gaining popularity. According to 2016 Association of American Medical Colleges data, training in medical humanities has consistently increased during the past few years, with 94 percent of medical schools offering either required or elective courses positioning students to better understand the needs of diverse patients [25].

Rethinking Traditional Content

The emergence of AI also presents evidence that traditional areas of content may need to be addressed in new ways. The proliferation of information available to clinicians at the time of decision making has exceeded the processing capacity of an individual provider; AI can assist by augmenting one’s individual capabilities with collective knowledge. This reflects increasing acknowledgment over recent decades of the realities of human diagnostic error [26]. Similarly, society is coming to terms with the structural biases that permeate every system, including health care. As noted previously, many are quick to point to the risk that AI could amplify bias that already exists, which argues for stronger training around human bias and existing structural determinants of health. Health professions students thus need deeper training in metacognition — an understanding of how humans think and make decisions. Systematic training programs are required to become aware of and to overcome historical biases [27]. Because AI draws on existing datasets, additional training is needed to emphasize systematic and historical biases, errors, and omissions in health data. Health professionals must be trained to embrace responsibility for ensuring data quality. All providers contribute to the collection of — and, importantly, the documentation of — information about patients and communities. Education in responsible documentation processes and appropriate contribution to EHRs will strengthen the datasets on which AI relies. Finally, clinicians must be taught to apply an understanding of contexts of health and health care when determining the relevance of AI applications in a particular milieu and for particular populations.

Shifting Interprofessional Roles

HPE must anticipate shifts in interprofessional practice patterns. The vision of what it means to be a health care provider will move away from individual stewards of information toward systems-thinking experts in accessing, assessing, and applying information to the needs of an individual or a given community. It is critical that each provider be taught that data inputs impact AI outputs that guide care and treatment. Wearable devices connected by AI will increasingly integrate prevention and care delivery. Training in digital health literacy is needed to promote understanding of such data from the lens of a specific health profession, but also in recognizing how such information fits within the expertise of other health professions and the broader health system. Data collected by patients themselves through smart technology monitoring will supplement data submitted by each care provider. Health professions training needs to prepare students for the paradigm shift from the traditional “disease-oriented medicine” to promotion of wellness in the context of a partnership with patients and providers. The composition of the interprofessional team will be altered, as health care providers will collaborate with data scientists and other digital experts [28]. The role of the team will shift toward a more interprofessional person-centered approach, guiding individuals toward healthier options and decision making based on deep learning algorithms. Educators must thus develop interprofessional learning opportunities to recognize data biases, bioethical challenges, and implications for liability as teams increasingly rely on data-driven algorithms to drive decision making [28].

It may be that a different type of person will succeed as a health professional. An increasing recognition of the value of personal humility that positions a provider to accept external input, whether from fellow members of the care team or from AI tools, must balance a traditional value for strong personal knowledge. Educating health professions learners together on the risks and benefits of AI and machine learning will set up a system of shared knowledge as students become members of the health professional team.

Ethical and Professional Implications

The duty of health professionals to engage in ethical and regulatory oversight of AI also argues for targeted education. In the United States, when decision makers at health care organizations were asked how confident they were that AI would improve medicine, roughly 50 percent feared that it will produce fatal errors, have operational flaws, and produce unmet expectations [29]. In the United Kingdom, 63 percent of the adult population is not comfortable with providing and allowing personal data to be used to improve health care through AI. Training must be developed to ensure that providers understand and monitor data protection requirements, patient privacy, and confidentiality, as well as minimize bias sampling and demand that AI developers provide transparent communication and actions addressing these endeavors [29]. Further collaboration among interprofessional educators will be necessary to clarify what additional competencies are needed and how historical competencies must be refined in light of increasing penetrance of AI in health care.

AI in Training

In addition to preparing health professions learners to utilize AI tools in their future work, educators should consider the tremendous potential of AI to improve the process of education. Multiple examples, which are laid out below, have already emerged that show how leveraging AI can propel innovative approaches to teaching and learning across the continuum of training.

Easing Administrative Burdens

Information is critical to educational systems and their ability to recognize and address the learning needs of individual health professionals. However, there are significant administrative aspects to educational systems. Akin to the clinical application of AI to reduce a provider’s burden of documentation, leveraging AI could free bandwidth for direct interactions with students and for more creative activities to advance innovation in HPE. As an example, the Georgia Institute of Technology [30] sought to expand its capacity for online education, but leaders recognized that the demand for teaching assistants (TAs) would exceed supply. Identifying that many questions for TAs are administrative in nature rather than focused on deeper learning issues, they developed an AI application, Jill Watson, to function as a TA. Jill was successful in quickly addressing simple questions and was able to recognize more advanced issues that needed to be referred to human educators. Ongoing refinement is under way to optimize functionality.

Another example comes from the New York University Grossman School of Medicine. Upon the announcement that the school would award full-tuition scholarships to all enrolled students, leaders anticipated a surge in applications to the already prestigious program. They developed a dataset of 53 variables extracted from the admissions applications records of more than 1,000 previous matriculants and performed an analysis to identify factors correlated with subsequent success in medical school. Their big data approach enabled enhanced processing of subsequent applications, incorporating more holistic measures at the point of initial screening in a manner intentionally designed to mitigate prior biases common to admissions processes nationally [31]. Other uses have similarly eased the workload of teacher-scholars by triaging relevant research articles indexed by PubMed and automating certain activities like narrowing the number of applicants for specialized programs and dividing classes based on competencies and motivation to learn [32].

Delivery of Content and Enabling Adaptive Learning

As applied to content delivery, AI enables adaptive learning and assessment with the goal of “serve[ing] the benefits of a well-trained human tutor providing students support, guidance, interpreting student answers and encouraging more dialogue on the discussion” [33]. For example, an AI application known as the BRCA Gist has been found to be beneficial in supporting learning about genetic risk in breast cancer [34]. Developed to help patients navigate the personal decision whether to pursue genetic testing, this tool involves leveraging complex AI applications to create avatars that interact in a conversational manner, adjusting responses to the patient’s text replies. Students selected to interact with the system as if they were a patient demonstrated increased declarative knowledge of genetic risk. Use of such technology in educational programs would enable educators to expand the mix of cases that students are exposed to and provide the opportunity to discover new concepts in an interactive manner with prompt feedback from the system. The learning process is no longer bound by time and place and instead offers an “apprenticeship” tutor-type experience. Performance trainers in the health professions exist and are found to achieve the tutoring needed, resulting in higher performance scores than learning in the traditional classroom.

Providing Information and Feedback

AI has also been used in higher education to provide individualized feedback, build learning pathways, and decrease costs [35]. It will not replace human teachers, although it will change the work that humans do. In one case, a professor of computer science’s master’s students designed a teacherbot to work with students as a TA, promptly answering online questions at all hours of the day for multiple students simultaneously [18]. Such models have been used to predict which students will be low-engagement participants in online class activities and are likely to require additional assistance throughout the course [36]. This could be especially valuable when running Massive Open Online Courses. Additionally, many health professions programs have instituted dashboards to compile and display feedback from the clinical learning environment. Layering AI tools could streamline the data collection burden, signaling priorities regarding what specific feedback is needed and which supervisors could be in a position to provide it.

Supporting Competency-Based Assessment and Individualized Learning Pathways

Learners in any health profession come to their training with a rich diversity of prior educational and life experiences, and as such, their true educational needs vary. Recent interest in competency-based approaches to HPE emphasizes greater attention to monitoring learning outcomes over traditional reliance on time as a proxy for learning. Competency-based approaches rely on rich programmatic assessment data about each learner. Ideally, each health professions learner is assessed by a variety of supervisors within a complex clinical system. Some standardization of expectations is necessary, yet there is richness in this diversity of supervisor perspectives on performance. A historical barrier to implementation of competency-based approaches has been the challenge of managing a wealth of performance evidence, visualizing and interpreting it in a manner that informs future learning. Preliminary work at some medical schools [37-39] demonstrates the power of programmatic assessment and individualized performance dashboards that span courses and time, but these programs are still limited by the capacity of human processing. AI could be applied to identify consistent signals in performance evidence that in turn would steer additional diagnostic assessments or learning experiences. Akin to precision medicine, AI enables precision education by identifying individual performance trends and making recommendations to support individualized learning pathways.

Streamlining Continual Professional Development

Ongoing education is a fundamental aspect of every health profession, but meaningful continuing professional development has also suffered from this lack of customization, with poor alignment to each provider’s actual practice. The American Board of Medical Specialties recently argued that “continuing certification must change to incorporate longitudinal and other innovative formative assessment strategies that support learning, identify knowledge and skills gaps, and help diplomates stay current” [40]. Certification must be based on the competency demands of one’s practice. AI offers the potential to monitor a provider’s patient panel and outcomes and recommend appropriate educational resources just in time. It can be used to understand one’s true scope of practice and recommend appropriate, targeted assessments for continuing certification, thus strengthening the link between practice and licensure.

Optimizing the Deployment of Educational Resources for Learning

Educational resources are limited across all health professions. The efficient deployment of advising, educational experiences, and assessment practices would serve to extend material supplies and the time and effort of educators and staff. In the field of social work, AI is being explored to better anticipate which individual youth involved in foster care will require special support, while examining how human bias embedded in training data can be minimized computationally and by including humans in final decision making [41]. Similarly, health professions educators could leverage AI to predict which learners and practitioners need extra learning support.

Enabling the Learning Health Care System

The ideal of a learning health care system [5] can be realized with support from AI. The Amplifire learning platform illustrates these concepts well [42]. Within Amplifire, health system metrics and EHR data are mined to identify opportunities for improvement and shared learning needs, which is then converted into system-wide training across professions. During the assessment phase, each participant is asked not only to answer questions but also to rate confidence in one’s answer. Participants who are incorrect but less confident may simply need more training. AI helps to uncover individuals harboring confidently held misinformation; addressing this group is critical to the success of the entire learning community. Thus, AI enables the health system to tailor both the depth of and approach to education in order to meet each individual’s — and thus the system’s — learning needs.

Mitigating Burnout

AI has effectively been applied to supporting mental health and well-being. Automating parts of instructors’ workload can help mitigate some of the stress and burnout of health professions educators. Advanced technology has been applied by mental health practitioners in the early detection of health care workers at high risk for mental health disorders [43]; similar algorithms could be developed to monitor health professions students for stress and anxiety. Similarly, natural language processing algorithmic models developed through a smartphone app and pilot tested among adolescents might help educators and preceptors identify suicidal health professions students [44, 45].

A call for transformation of training programs and educational practice is needed, not only to improve learning effectiveness and reduce costs of HPE, but also to provide a better learner experience that ultimately leads to higher quality care for patients.

Next Steps: Answering the Call to Action

Educators must collaborate to better define the necessary incorporation of AI training into HPE. A lack of action among educators poses risk in that adequate training of health professionals in AI is the strongest tool to promote its promise over its peril. The broad categories of understanding outlined in prior sections will need to be formally articulated as learning objectives and competencies. Appropriate “dosing” of training must be considered along with defining the core content needed across health professions, as well as advanced training needs in given professions and across the various specialties within each profession. Additionally, common educational administrative challenges across health professions offer shared opportunities to leverage AI to improve the process of education.

This manuscript is intended to serve as a call to action, and in it, the authors offer concrete steps that health professions educators should take now (see Box 1). Collective action of educators across health professions will foster a desired future state in which the power of AI is optimized while mitigating unintended consequences.

Limitations

This manuscript is informed by the experience of authors across many health professions, who themselves are in the process of learning about AI and its implications for health, health care, and education in the health professions. AI is a rapidly advancing field, so there is impermanence to the ideas offered here. Some health professions and disciplines are currently more impacted than others; however, as advancing capabilities of AI are realized over time, educational objectives at all levels must evolve. An informal review of current training in AI informed this overview, but a collaborative research agenda is needed across health professions educational programs to clarify best practices.

Join the conversation!

![]() Tweet this! “Educators must act now to incorporate training in AI across health professions or risk creating a health workforce unprepared to leverage the promise of AI or navigate its potential perils.” Read more in a new #NAMPerspectives: https://doi.org/10.31478/202109a

Tweet this! “Educators must act now to incorporate training in AI across health professions or risk creating a health workforce unprepared to leverage the promise of AI or navigate its potential perils.” Read more in a new #NAMPerspectives: https://doi.org/10.31478/202109a

![]() Tweet this! A new #NAMPerspectives examines how training in appropriate use of AI will become increasingly important for health professions educators, and highlights AI’s ability to supplement, not replace, health care workers: https://doi.org/10.31478/202109a

Tweet this! A new #NAMPerspectives examines how training in appropriate use of AI will become increasingly important for health professions educators, and highlights AI’s ability to supplement, not replace, health care workers: https://doi.org/10.31478/202109a

![]() Tweet this! When properly used, AI will increase access, affordability, and quality of health care and free health care professionals to operate at the top of their license by offloading repetitive and administrative tasks. Read more: https://doi.org/10.31478/202109a #NAMPerspectives

Tweet this! When properly used, AI will increase access, affordability, and quality of health care and free health care professionals to operate at the top of their license by offloading repetitive and administrative tasks. Read more: https://doi.org/10.31478/202109a #NAMPerspectives

Download the graphics below and share them on social media!

References

- Stead, W. W., J. R. Searle, H. E. Fessler, J. W. Smith, and E. H. Shortliff e. 2011. Biomedical informatics: Changing what physicians need to know and how they learn. Academic Medicine 86(4):429-434. https://doi.org/10.1097/ACM.0b013e3181f41e8c.

- Matheny, M., S. Thadaney Israni, M. Ahmed, and D. Whicher, Editors. 2019. Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. NAM Special Publication. Washington, DC: National Academy of Medicine.

- National Academy of Medicine. n.d. The Learning Health System Series: Continuous Improvement and Innovation in Health and Health Care. National Academy of Medicine Leadership Consortium for Value & Science-Driven Health Care. Available at: https://nam.edu/wp-content/uploads/2015/07/LearningHealthSystem_28jul15.pdf. (accessed September 2, 2021).

- Randhawa, G. K., and M. Jackson. 2020. The role of artificial intelligence in learning and professional development for healthcare professionals. Healthcare Management Forum 33(1):19–24. https://doi.org/10.1177/0840470419869032.

- Myers West, S. 2019. Discriminating Systems: Gender, Race and Power in AI. Video. AI Now Institute. Available at: http://hdl.handle.net/1853/62480 (accessed September 2, 2021).

- deBurca, S. 2000. The learning health care organization. International Journal for Quality in Health Care 12(6):457–458. https://doi.org/10.1093/intqhc/12.6.457.

- IOM (Institute of Medicine). 2015. Measuring the Impact of Interprofessional Education on Collaborative Practice and Patient Outcomes. Washington, DC: The National Academies Press. P. 182.

- Borenstein, J., and A. Howard. 2021. Emerging challenges in AI and the need for AI ethics education. AI and Ethics 1(1):61–65. https://doi.org/10.1007/s43681-020-00002-7.

- Owens, K., and A. Walker. 2020. Those designing healthcare algorithms must become actively antiracist. Nature Medicine 26(9):1327–1328. https://doi.org/10.1038/s41591-020-1020-3.

- Leslie, D. 2019. Raging robots, hapless humans: The AI dystopia. Nature 574:32–33. Available at: https://media.nature.com/original/magazine-assets/d41586-019-02939-0/d41586-019-02939-0.pdf (accessed September 2, 2021).

- Chen, M., and M. Decary. 2020. Artificial intelligence in healthcare: An essential guide for health leaders. Healthcare Management Forum 33(1):10–18. https://doi.org/10.1177/0840470419873123.

- Davenport, T., and R. Kalakota. 2019. The potential for artificial intelligence in healthcare. Future Healthcare Journal 6(2):94–98. https://doi.org/10.7861/futurehosp.6-2-94.

- Fitzpatrick, K. K., A. Darcy, and M. Vierhile. 2017. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomized controlled trial. JMIR Mental Health 4(2):e19. https://doi.org/10.2196/mental.7785.

- Fulmer, R., A. Joerin, B. Gentile, L. Lakerink, and M. Rauws. 2018. Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: Randomized controlled trial. JMIR Mental Health 5(4):e64. https://doi.org/10.2196/mental.9782.

- Masters, K. 2019. Artificial intelligence in medical education. Medical Teacher 41(9):976 –980. https://doi.org/10.1080/0142159X.2019.1595557.

- Wartman, S. A., and C. D. Combs. 2018. Medical education must move from the information age to the age of artificial intelligence. Academic Medicine 93(8):1107–1109. https://doi.org/10.1097/ACM.0000000000002044.

- Wartman, S. A., and C. D. Combs. 2019. Reimagining medical education in the age of AI. AMA Journal of Ethics 21(2). Available at: https://journalofethics.ama-assn.org/article/reimagining-medical-education-age-ai/2019-02 (accessed September 2, 2021).

- Popenici, S. A. D., and S. Kerr. 2017. Exploring the impact of artificial intelligence on teaching and learning in higher education. Research and Practice in Technology Enhanced Learning 12(1):22. https://doi.org/10.1186/s41039-017-0062-8.

- Daugherty, P. R., H. J. Wilson, and R. Chowdhury. 2018. Using artificial intelligence to promote diversity. MIT Sloan Management Review. Available at: https://sloanreview.mit.edu/article/using-artificialintelligence-to-promote-diversity (accessed September 2, 2021).

- dos Santos, P., D. Giese, S. Brodehl, S. H. Chon, W. Staab, R. Kleinert, D. Maintz, and B. Baeßler. 2019. Medical students’ attitude towards artificial intelligence: A multicentre survey. European Radiology 29(4):1640–1646. https://doi.org/10.1007/s00330-018-5601-1.

- McCoy, L. G., S. Nagaraj, F. Morgado, V. Harish, S. Das, and L. A. Celi. 2020. What do medical students actually need to know about artificial intelligence? NPJ Digital Medicine 3:86. https://doi.org/10.1038/s41746-020-0294-7.

- CanMEDS. 2015. CanMEDS Framework: Better Standards, Better Physicians, Better Care. Royal College of Physicians and Surgeons of Canada. Available at: https://www.royalcollege.ca/rcsite/canmeds/canmeds-framework-e (accessed September 2, 2021).

- Verghese, A., N. H. Shah, and R. A. Harrington. 2018. What this computer needs is a physician: humanism and artificial intelligence. JAMA 319(1):19–20. https://doi.org/10.1001/jama.2017.19198.

- Johnston, S. C. 2018. Anticipating and training the physician of the future: The importance of caring in an age of artificial intelligence. Academic Medicine 93(8):1105–1106. https://doi.org/10.1097/ACM.0000000000002175.

- American Association of Medical Colleges. n.d. Content Documentation in Required Courses and Elective Courses. Available at: https://www.aamc.org/data-reports/curriculum-reports/interactive-data/content-documentation-required-courses-and-elective-courses (accessed September 2, 2021).

- IOM (Institute of Medicine). 2000. To Err Is Human: Building a Safer Health System. Washington, DC: The National Academies Press. https://doi.org/10.17226/9728.

- Mezzio, D. J., V. B. Nguyen, A. Kiselica, and K. O’Day. 2018. Evaluating the presence of cognitive biases in health care decision making: A survey of U.S. formulary decision makers. Journal of Managed Care & Specialty Pharmacy 24(11):1173 –1183. https://doi.org/10.18553/jmcp.2018.24.11.1173.

- Paranjape, K., M. Schinkel, R. N. Panday, J. Car, and P. Nanayakkara. 2019. Introducing artificial intelligence training in medical education. JMIR Medical Education 5(2):e16048. https://doi. org/10.2196/16048.

- Vayena, E., A. Blasimme, and I. G. Cohen. 2018. Machine learning in medicine: Addressing ethical challenges. PLOS Medicine 15(11):e1002689. https://doi.org/10.1371/journal.pmed.1002689.

- Eicher, B., L. Polepeddi, and A. Goel. 2018. Jill Watson Doesn’t Care If You’re Pregnant: Grounding AI Ethics in Empirical Studies. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society. New Orleans, LA: Association for Computing Machinery. pp. 88–94.

- Baron, T., R. I. Grossman, S. B. Abramson, M. V. Pusic, R. Rivera, M. M. Triola, and I. Yanai. 2020. Signatures of medical student applicants and academic success. PLoS One 15(1):e0227108. https://doi.org/10.1371/journal.pone.0227108.

- Ishizue, R., K. Sakamoto, H. Washizaki, and Y. Fukazawa. 2018. Student placement and skill ranking predictors for programming classes using class attitude, psychological scales, and code metrics. Research and Practice in Technology Enhanced Learning 13(1):7. https://doi.org/10.1186/s41039-018-0075-y.

- Payne, V. L., O. Medvedeva, E. Legowski, M. Castine, E. Tseytlin, D. Jukic, and R. S. Crowley. 2009. Effect of a limited-enforcement intelligent tutoring system in dermatopathology on student errors, goals and solution paths. Artificial Intelligence in Medicine 47(3):175–197. https://doi.org/10.1016/j.artmed.2009.07.002.

- Wolfe, C. R., V. F. Reyna, C. L. Widmer, E. M. Cedillos-Whynott, P. G. Brust-Renck, A. M. Weil, and X. Hu. 2016. Understanding genetic breast cancer risk: Processing loci of the BRCA gist intelligent tutoring system. Learning and Individual Differences 49:178–189. https://doi.org/10.1016/j.lindif.2016.06.009.

- Chan, K. S., and N. Zary. 2019. Applications and challenges of implementing artificial intelligence in medical education: Integrative review. JMIR Medical Education 5(1):e13930. https://doi.org/10.2196/13930.

- Hussain, M., W. Zhu, W. Zhang, and S. R. Raza Abidi. 2018. Student engagement predictions in an e-learning system and their impact on student course assessment scores. Computational Intelligence and Neuroscience 2018:6347186. https://doi.org/10.1155/2018/6347186.

- Spickard, A., T. Ahmed, K. Lomis, K. Johnson, and B. Miller. 2016. Changing medical school IT to support medical education transformation. Teaching and Learning in Medicine 28(1):80–87. https://doi.org/10.1080/10401334.2015.1107488.

- Mejicano, G. C., and T. N. Bumsted. 2018. Describing the journey and lessons learned implementing a competency-based, time-variable undergraduate medical education curriculum. Academic Medicine S42–S48. ttps://doi.org/10.1097/ACM.0000000000002068.

- Lomis, K. D., R. G. Russell, M. A. Davidson, A. E. Fleming, C. C. Pettepher, W. B. Cutrer, G. M. Fleming, and B. M. Miller. 2017. Competency milestones for medical students: Design, implementation, and analysis at one medical school. Medical Teacher 39(5):494–504. https://doi.org/10.1080/0142159x.2017.1299924.

- American Medical Association House of Delegates. 2019. Lack of Support for Maintenance of Certification. Available at: https://www.ama-assn.org/system/files/2019-05/a19-305.pdf (accessed September 2, 2021).

- Gambini, B. 2020. UB receives $800,000 NSF/Amazon grant to improve AI fairness in foster care. Available at: http://www.buffalo.edu/news/releases/2020/02/001.html (accessed September 2, 2021).

- amplifire. 2020. Home Page. Available at: https://amplifire.com (accessed September 2, 2021).

- Ćosić, K., S. Popović, M. Šarlija, I. Kesedžić, and T. Jovanovic. 2020. Artificial intelligence in prediction of mental health disorders induced by the COVID-19 pandemic among health care workers. Croatian Medical Journal 61(3):279–288. https://doi.org/10.3325/cmj.2020.61.279.

- Coentre, R., and C. Góis. 2018. Suicidal ideation in medical students: Recent insights. Advances in Medical Education and Practice 9:873–880. https://doi.org/10.2147/AMEP.S162626.

- Witte, T. K., E. G. Spitzer, N. Edwards, K. A. Fowler, and R. J. Nett. 2019. Suicides and deaths of undetermined intent among veterinary professionals from 2003 through 2014. Journal of the American Veterinary Medical Association 255(5):595–608. https://doi.org/10.2460/javma.255.5.595.