Advancing Artificial Intelligence in Health Settings Outside the Hospital and Clinic

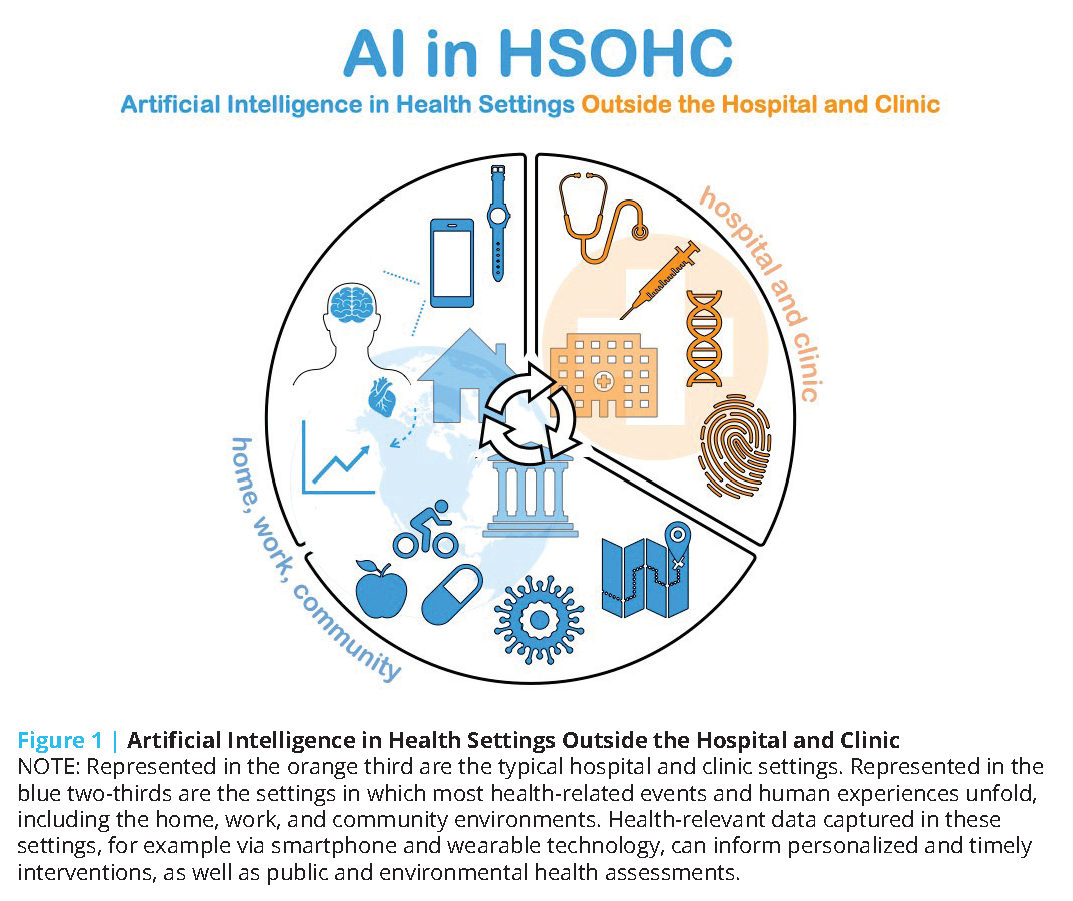

The health care ecosystem is witnessing a surge of artificial intelligence (AI)-driven technologies and products that can potentially augment care delivery outside of hospital and clinic settings. These tools can be used to conduct remote monitoring, support telehealth visits, or target high-risk populations for more intensive health care interventions. With much of patients’ time spent outside of a hospital or a provider’s office, these tools can offer invaluable benefits in facilitating patients’ access to their provider teams in convenient ways, facilitating providers’ understanding of their patients’ daily habits, extending care access to underserved communities, and delivering personalized, real-time care in the patient’s home environment. More importantly, by expanding care to novel settings (e.g., home, office), these technologies could empower patients and caregivers, as most of these tools are aimed at helping patients adapt their own behaviors or facilitating bidirectional communication between patients and clinicians for more personalized care. The authors of this manuscript refer to these such environments as “health settings outside the hospital and clinic,” abbreviated and referred to as HSOHC (pronounced “h-sock”) hereafter (see Figure 1). In some instances, the capabilities of these tools are proving to be extremely timely in continuing care delivery amidst the disruptions posed by the COVID-19 pandemic.

While a number of AI applications for care delivery outside of the hospital and clinical setting in medical specialties ranging from cardiology to psychiatry are either currently available or in development, their reliability and true utility in improving patient outcomes are highly variable. In addition, fundamental logistical issues exist, including product scalability, inter-system data standardization and integration, patient and provider usability and adoption, and insurance reform that must be overcome prior to effective implementation of AI technologies. Broader adoption of AI in health care and long-term data collection must also contend with urgent ethical and equity challenges, including patient privacy, exacerbation of existing inequities and bias, and fair access, particularly in the context of the U.S.’s fragmented mix of private and public health insurance programs.

Introduction and Scope

To address the U.S. health care system’s deep-seated financial and quality issues [1], several key stakeholders, including health systems, retail businesses, and technology firms, are taking steps to transform the current landscape of health care delivery. Notable among these efforts is the expansion of health care services outside the hospital and clinic settings [2,3]. These novel settings, or HSOHC, and modes of care delivery include telehealth, retail clinics, and home and office environments. Care delivered in these environments often incorporates advanced technological applications such as wearable technology (e.g., smartwatches), remote monitoring tools, and virtual assistants.

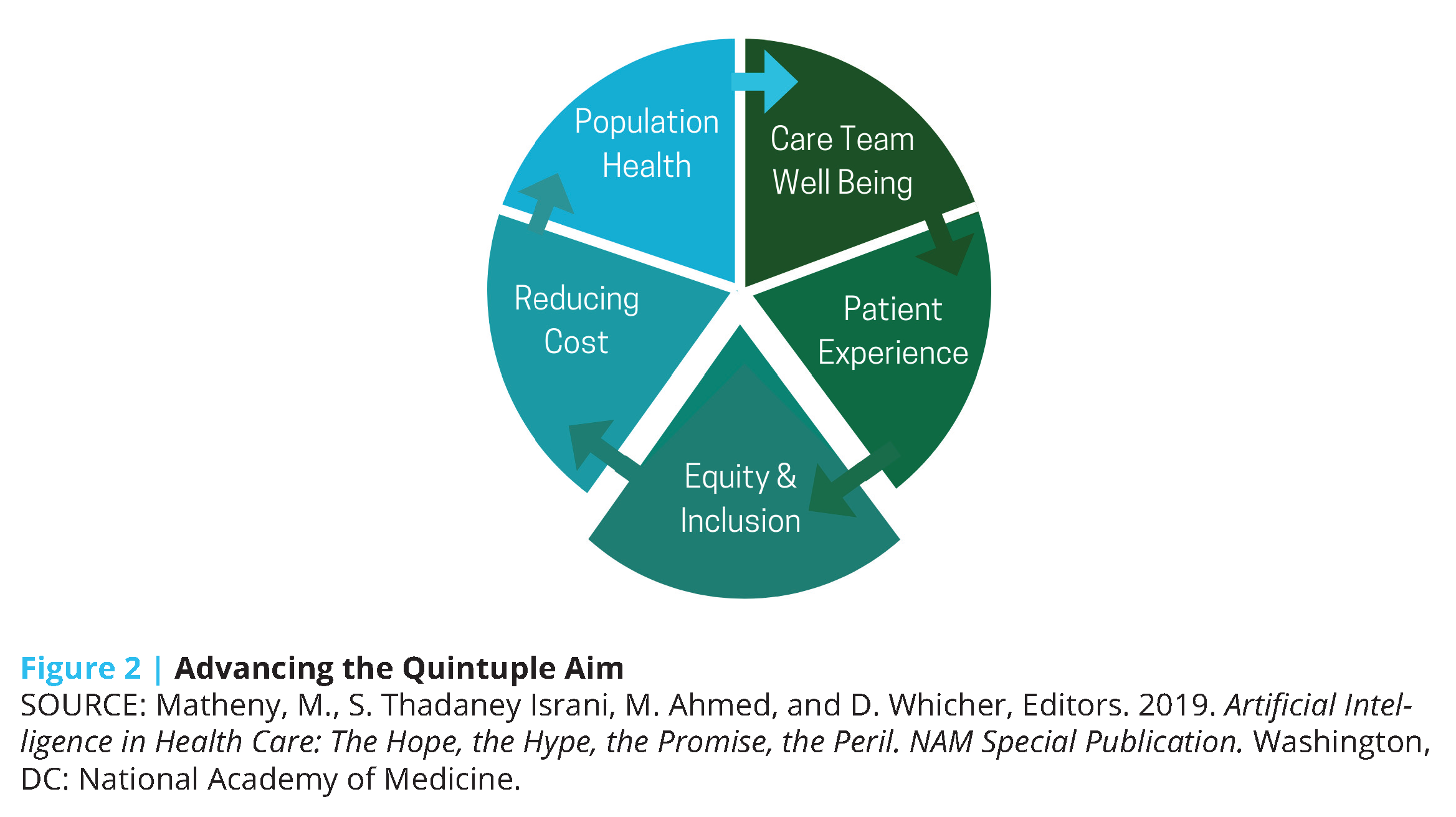

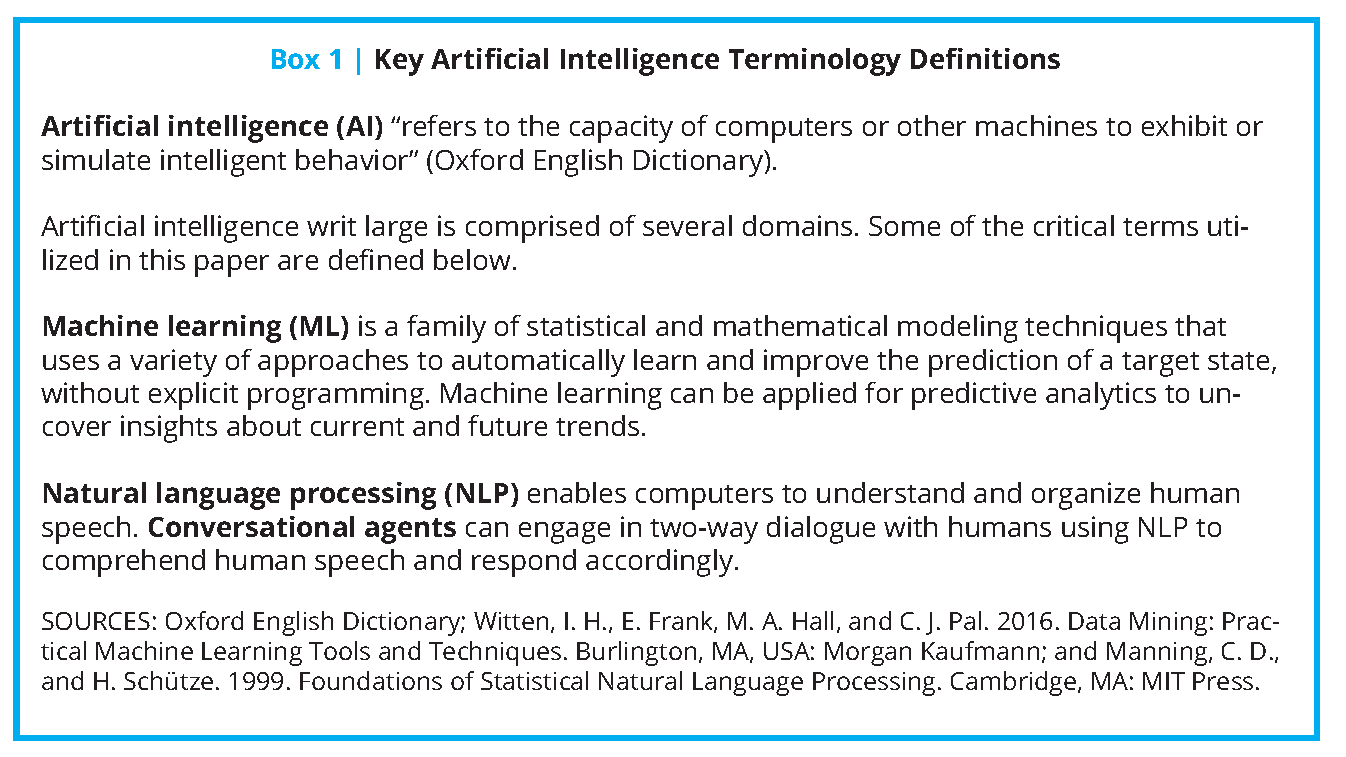

The growing adoption of these technologies in the past decade [4] presents an opportunity for a paradigm shift in U.S. health care toward more precise, economical, integrated, and equitable care delivery. Coupled with advances in AI, the potential impact of such technologies expands exponentially (see Box 1 for key definitions). Machine learning (ML), a subdomain of AI, can take advantage of continuous data regarding activity patterns, peripheral physiology, and ecological momentary assessments of mood and emotion (all gathered in the home, school, community, and office settings) to predict risk for future health events and behavioral tendencies, and ultimately suggest personalized lifestyle modifications and treatment options. The increasing affordability of remote monitoring devices, decreased dependence on brick-and-mortar health care infrastructure, and real-time feedback mechanisms of these tools position AI as an indispensable factor in achieving the Quintuple Aim of health care: better patient outcomes, better population health, lower costs, increased clinician well-being, and prioritized health equity and inclusiveness [5] (see Figure 2).

These tools, which use ML and conversational agents – another application of AI – are also particularly suitable for addressing and continuing care during the COVID-19 pandemic (see Box 1 for key definitions). In fact, the spread of COVID-19 has catalyzed many digital health and AI-related tools to augment personal and population health in the U.S. and in many other parts of the world.

While a number of AI applications for care delivery outside of the hospital and clinical setting in medical specialties ranging from cardiology to psychiatry are either currently available or in development, their reliability and true utility in improving patient outcomes are highly variable. In addition, fundamental logistical issues exist, including product scalability, inter-system data standardization and integration, patient and provider usability and adoption, and insurance reform that must be overcome prior to effective implementation of AI technologies. Broader adoption of AI in health care and long-term data collection must also contend with urgent ethical and equity challenges, including patient privacy, exacerbation of existing inequities and bias, and fair access, particularly in the context of the U.S.’s fragmented mix of private and public health insurance programs.

In this discussion paper, the authors outline and examine the opportunities AI presents to transform health care in new and evolving arenas of care, as well as the significant challenges surrounding the sustainable application and equitable development and deployment that must be overcome to successfully incorporate these novel tools into current infrastructures. The discussion paper concludes by proposing steps for institutional, governmental, and policy changes that may facilitate broader adoption and equitable distribution of AI-driven health care technologies and an integrated vision for a home health model.

Surveying Key Examples of AI Outside the Hospital and Clinic Setting: Evaluating Current and Emerging Technologies

Implementing AI on the Individual Level for Better Personal Health

Telehealth and AI

Telehealth has been a long-standing element of health care delivery in the U.S. [6], but not until COVID-19 has it been considered vital to sustaining the connection between patients and providers. These electronic interactions can be materially enhanced by AI in reducing the response time for medical attention and in alleviating provider case load and administrative burden. For example, AI triaging for telehealth uses conversational agents embedded in a virtual or phone visit to stratify patients based on acuity level and direct them accordingly to the most appropriate care setting [7]. By reducing the risk of patient exposure, AI triaging platforms have been especially advantageous during COVID-19, and a number of health systems, retail clinics, and payers have implemented them to continue the facilitation of care services [8] and identify possible COVID-19 cases. On the federal level, the Centers for Disease Control and Prevention (CDC) has launched a “Coronavirus Self-Checker” based on Microsoft’s Bot technology to guide patients to care using CDC guidelines [9,10]. Outside of the urgency of the COVID-19 pandemic, natural language processing has also been used to transcribe provider-patient conversations during phone visits, which can assist providers in writing care plans after the call concludes and can be useful to patients as a reference of what was discussed [11].

These integrations are the “tip of the iceberg” of the possibilities of AI in the telehealth domain. Given the escalating pressure amid the COVID-19 pandemic to continue regulatory and financial support for telehealth [12], one could envision a burgeoning variety of AI couplings with telehealth. The future capacity of AI might include using video- and audio-capture tools with facial or tonal interpretation for stress detection in the home or office, or the incorporation of skin lesion detection apps into real-time video for dermatological visits.

Using AI to Augment Primary Care Outside of the Clinical Encounter

In the last six years, there has been a significant increase in the use of consumer applications for patient self-management of chronic diseases, and to a lesser degree for patient-provider shared management through home health care delivery and remote monitoring [13].

In 2018, diabetic care witnessed the landmark approval by the U.S. Food and Drug Administration (FDA) of IDx-DR, an ML-based algorithm that detects diabetic retinopathy, as the first AI-driven medical device to not require physician interpretation [14,15]. Outside the hospital, several AI applications have been developed for diabetes self-management, including those that have shown improvements in HgbA1c through AI analysis of photos of patient meals to assess calories and nutrients [16] and another pilot trial of fully automated coaching for prediabetics, showing decreases in weight and HgbA1c [17]. For insulin management in type 1 diabetes, multiple studies have found that using self-adaptive learning algorithms in conjunction with continuous glucose monitors and insulin pumps results in decreased rates of hypoglycemia and an increase in patients reaching their target glucose range [18]. In March 2020 in the United Kingdom, the first such tool was officially licensed and launched publicly [19]. For type 2 diabetes, a promising example is an FDA-approved diabetes management system called WellDoc that gives individualized feedback and recommendations on blood sugar management and has been shown to reduce HgbA1c levels significantly [20,21].

Other consumer tools, some with approvals by regulatory agencies, help monitor and support blood pressure control and vital sign checks. One app, Binah.ai, features a validated tool that can scan a person’s face in good lighting conditions and report heart rate (HR), oxygen saturation, and respiration with high levels of accuracy [22,23]. In addition, an increasing number of AI virtual health and lifestyle coaches have been developed for weight management and smoking cessation.

Remote Technology Monitoring for Promoting Cardiac Health

Wearable and remote monitoring technology can assist in ushering in the next era of health care data innovation by capturing physiologic data in HSOHC [24]. In the current clinic-based paradigm, data is captured in isolated snapshots and often at infrequent time intervals. For example, blood pressure is measured and recorded during clinic visits once or twice a year, which does not provide an accurate or longitudinal understanding of an individual’s blood pressure fluctuations.

The current “Internet of Things” era has changed the landscape for wearable technology. Wearables can capture data from any location and transmit it back to a hospital or clinic, moving a significant piece of the health care enterprise to places where patients spend the bulk of their time. These measurements can then be coupled with machine learning (ML) algorithms and a user interface to turn the data into relevant information about an individual’s health-related behaviors and physiological conditions.

Wearable technology has been applied to many health care domains, ranging from cardiology to mental health. Prominent examples of technologies are those that incorporate cardiac monitoring, such as HR and rhythm sensors, including the Apple Watch, iRhythm, and Huawei devices. These devices are quite popular and, in the case of the Apple Watch, have received FDA approval as a medical device to detect and alert individuals of an irregular heart rhythm, a condition called atrial fibrillation [25]. Atrial fibrillation is associated with reduced quality of life and can result in the formation of blood clots in the upper heart chambers, ultimately leading to increased risk of stroke. Theoretically, enabling diagnosis of this condition outside of the clinic could bring patients to medical attention sooner and, in turn, considerably reduce the risk of stroke.

However, the efficacy of some of these devices in relation to improving patient outcomes (increased quality of life and longevity) through detection of abnormal rhythms remains unproven, and there have been some concerns regarding the accuracy of the ML algorithms. For example, some of the Huawei and Apple Watch studies suggest that the devices seem to work well in sinus rhythm (beating normally at rest), but underestimate HR at higher rates in atrial fibrillation or in elevated sinus rhythm (i.e., with exercise) [26,27].

Hypertension, or high blood pressure, is another example of a highly prevalent, actionable condition that merits surveillance. Hypertension affects approximately 45 percent of Americans and is associated with heart failure, stroke, acute myocardial infarction, and death [28]. Sadly, hypertension control rates are worsening in the U.S., which will have downstream effects in most likely increasing the prevalence of cardiovascular disease [29]. Unlike smartwatch devices, blood pressure cuffs have been commercially available for decades.Today, several blood pressure manufacturers, including Omron, Withings, and others, offer cuffs that collect and transmit blood pressure measurements along with data like HR to health care providers [30,31]. Collecting longitudinal, densely sampled HR and blood pressure data in these ways allows for nuanced pattern detection through ML to predict increased risk of cardiovascular events like stroke or heart failure and, in turn, triage patients for medication management or more intensive treatment. Ultimately, such prognostic capabilities could be embedded into the device itself. However, establishing the accuracy of data capture measurements relative to traditional sphygmomanometry is challenging because of the lack of scientific assessment standards [32].

In addition to established measurement standards, remote blood pressure monitoring devices should be coupled with a system to deliver interventions based on the data. One such option includes ML-powered smartphone apps paired with remote monitoring devices. The apps should effectively provide behavioral therapy for hypertensive patients [33], assess adherence to interventions, and promote patient self-awareness [34]. In terms of patient outcomes, some studies suggest that home monitoring, when coupled with pharmacist-led medication management and lifestyle coaching, is associated with improved blood pressure control; other studies are neutral [35]. Historically, the traditional health care delivery system has been unsuccessful in blood pressure control, and moving management into the home settings shows promise [36].

Remote Sensing and Mobile Health (mHealth) for Behavioral and Psychiatric Care

The pursuit of precision medicine — “delivering the right treatments, at the right time, every time to the right person” [37,38] — has been a long-standing goal in medicine. In particular, for psychiatry, clinical psychology, and related disciplines, increased precision regarding the timing of interventions presents an important opportunity for mental health care. In major depressive disorder, episodes of depression contrast with periods of relatively improved mood. In bipolar disorder, patients cycle between both manic and depressive episodes. For substance use disorders, patients may alternate between periods of use and disuse. At an even more granular level, risk for returning to substance use can be instigated at times by discrete stressors but at other times in the presence of substances or peers using substances. Poor sleep and other issues that affect self-regulation may exacerbate this risk some days but not others. In each of these examples, different interventions are better suited to each of these specific moments in time to improve mental health.

The synthesis of AI with “personal sensing” provides a powerful framework to develop, evaluate, and eventually implement more precise mental health interventions that can be matched to characteristics of the patient, their context, and the specific moment in time [39]. Today, sensors relevant to medical care are ubiquitous. Smartphones log personal communications by voice calls and text messages. Facebook posts, Instagram photos, tweets, and other social media activities are also recorded. Smartphone-embedded sensors know our location (via GPS) and activity level (via accelerometer), and can detect other people in our immediate environment (via Bluetooth). Smartwatches that can monitor our physiology and many other raw signals are increasing in popularity.

Personal sensing involves collecting these many raw data signals and combining them with ML algorithms to predict thoughts, behaviors, and emotions, as well as clinical states and disorders. This synthesis of ML and personal sensing can revolutionize the delivery of mental health care beyond the one-size-fits-all diagnoses and treatments to personalized interventions based on vast amounts of data collected not only in health care settings but in situ.

To be clear, the field of personal sensing (or digital phenotyping) is nascent and rapidly evolving [39]. However, emerging evidence already demonstrates the potential of its signals to characterize relevant mental health states at any moment in time. For example, GPS, cellular communication logs, and patterns of social media activity have all been used to classify psychiatric disorders and prognosis over time [40,41]. Natural language processing of what people write on social media can also be used to sense cognitive or motivational states (depressed mood, hopelessness, suicidal ideation) that may be more difficult to monitor with nonverbal sensors [42]. Moreover, many of these promising signals are collected passively by people’s smartphones, such that they can be measured without burden. This allows for long-term, densely sampled, longitudinal monitoring of patients that will be necessary to provide precisely timed interventions (e.g., just-in-time adaptive interventions [43]) for psychiatric disorders that are often chronic and/or cyclical.

mHealth apps are also well positioned to deliver AI-assisted precision mental health care. Mobile apps without AI have been already developed and deployed for post-traumatic stress disorder, depression, substance use disorders, and suicidal ideation, among others [44,45], many of which have been pioneered by the U.S. Department of Veterans Affairs. These applications can screen for psychiatric disorders, track changes over time, and deliver evidence-based treatment or post-treatment support. They often include a variety of tools and services for patients including bibliotherapy, cognitive-behavioral interventions, peer-to-peer or other social support, guided relaxation and mindfulness meditation, and appointment and medication reminders. In fact, many studies have demonstrated that patients are more expressive and more willing to report mental health symptoms to virtual human interviewers [46,47]. Moreover, because smartphones are nearly always both on and available, mobile mental health care apps can provide immediate intervention while a patient is waiting for a higher level of care. Active efforts are underway to augment these systems with personal sensing AI to improve their ability to detect psychiatric risk in the moment and to recommend specific interventions among their available tools and services based on the characteristics of the patient and the moment in time [43,48].

Leveraging AI and Patient-Level Data from Remote Monitoring Tools to Gather Population-Level Insight

Integrating AI into Population Health Strategies

Since population health takes a holistic philosophy about caring for a large group of patients’ health throughout their lives and all their activities, health management at this level necessarily goes far outside the bounds of a traditional medical encounter and into the daily lives of patients. A variety of integrated care delivery mechanisms have been used to improve population-level wellness and health, in many cases through novel partnerships and collaborations [49]. With the ongoing development of increasingly refined AI applications for individual use, next-generation population health strategies include analysis of aggregate patient-level data geared toward identification of broader population health trends and habits. Furthermore, these large-scale datasets set the stage for population-level AI algorithms for the purposes of epidemiological prediction, fueling a synergistic and powerful feedback loop of personal and population health innovation.

In the U.S., much of population health is managed and prioritized by insurance companies, employers, and disease management companies and increasingly by accountable care organizations and risk-bearing health care delivery organizations, whose primary aim is to decrease wasteful spending and improve health care quality by proactively engaging with and intervening for patients. The question becomes how to precisely identify these patients at the right time in their care journey, so as to not engage them too late—after the health care decision is made and costs are no longer avoidable—or to engage them too early, and therefore waste administrative resources in engaging them. This need has given rise to the field of predictive analytics, which increasingly leverages AI to improve the effectiveness and efficiency of these programs. In the health care industry, these analytics typically rely on medical and pharmacy claims data, but are increasingly integrating a more diverse set of data, including health risk assessment data, electronic health record data, social determinants of health data—and even more recently, data from connected health devices and from transcribed call and messaging data between patients and these managed care organizations.

There has been tremendous interest and investment in deploying sensors, monitors, and automated tracking tools that, when combined with AI, can be used for population health management [50]. These tools and systems have been applied with varying degrees of sophistication to a wide variety of acute and chronic diseases, such as for diabetes and hypertension (described in previous sections), monitoring patients in rehabilitation [51], ongoing cardiovascular care [52], mental health care, falls [53], or dementia and elder care [54]. This category of potential applications distinguishes itself from self-management-related AI through the primary users of the systems. In this domain, the users are health care professionals seeking to manage population health through information synthesis and recommendations.

Just like on an individual level, these algorithms remotely and passively detect physical and physiologic indicators of health and pathology, integrate them with patient-level environmental or health care system data, and generate insights, recommendations, and risks for many conditions. The challenges in this domain are melding disparate data—some from sensing information, some from image tracking, some from voice and audio analysis, and some from inertial or positional data—with more traditional medical data to improve outcomes and care.

Improving Medication Adherence with AI Tools

Another key challenge that population health faces is a lack of medication adherence. In some disease treatments, up to 40 percent of patients misunderstand, forget, or ignore health care advice [55]. Promotion of adherence to medical therapy is a complex interaction between patient preferences and autonomy; health communication and literacy; trust between patients/caregivers and the clinical enterprise; social determinants of health; cultural alignment between patients, caregivers, and health care professionals; home environment; management of polypharmacy; and misunderstandings about the disease being treated [56]. Numerous examples of adherence challenges abound, from treatments of chronic obstructive pulmonary disease [57], asthma [58], diabetes [59], and heart failure [60].

There is tremendous opportunity for AI to identify and mitigate patient adherence challenges. One example of how AI might assist in improving adherence is in the case of direct oral anticoagulants in which an AI system embedded in smartphones was used to directly observe patients taking the medications. The AI incorporated imaging systems such as facial recognition and medication identification as well as analytics to identify those at high risk or to confirm delay in administration. Those who were identified as being at high risk were routed to a study team for in-person outreach as needed [61]. In a 12-week randomized controlled trial format, the AI arm had 100 percent adherence and the control arm had 50 percent adherence by plasma drug concentration level assessment. There are other notable examples in this area of medication adherence, such as with tuberculosis [62] and schizophrenia treatments [63].

AI Efforts in Public and Environmental Health

There is a strong need and opportunity for the use of AI technologies in public health, with opportunities that include information synthesis, outbreak detection, and responsible, appropriately governed, ethical, secure, and judicious syndromic surveillance. Public health has been incorporating and leveraging AI technologies for a number of years, and many countries have syndromic surveillance systems in place, such as RAMMIE in the U.K. [64]. As a subdomain of public health, environmental health has applied ML techniques to tremendously benefit from the wide integration of publicly available data sources. One example is the need to assess toxicity in silico among chemicals used in commercial products, with over 140,000 mono-constituent chemicals in use and safety studies in less than 10 percent of them, not counting the vast number of chemical admixtures and metabolites [65,66,67]. There are important implications for environmental impacts in overall determinants of health along with genetic and chronic disease data, and AI will be critical in allowing the effective analysis of these types of data.

Another key area is the estimation of exposure histories and magnitude of patients over time, which requires diverse data ranging from location history, environmental conditions in areas of exposure, and subsequent evaluation and integration of said data into overall disease risk and clinical management strategies [68]. This also requires complex capacities in geospatial analysis and transformation [69]. In addition, the emphasis on geography and location mapping to assess potential outbreaks and environmental exposures is important for air pollution modeling [70]. AI-driven air pollution modeling uses a combination of satellite data, fixed monitoring, and professional and personal mobile monitoring devices to conduct complex assessments [71]. However, sensors such as PurpleAir require individuals to pay and install them in their homes and communities [72]. Thus, access is limited to those who have the privilege of disposable income. There have also been novel applications in assessing and informing public health policy with regard to neighborhood physical activity and assessment of greenspace access, as well as access to healthy food outlets and grocery stores [73].

Combating COVID-19 with AI-Assisted Technologies

AI interpretation and human review of incoming data for syndromic surveillance provided early warning of the recent COVID-19 pandemic. The first early warning alert of a potential outbreak was issued on December 30, 2019, by the HealthMap system at Boston Children’s Hospital, while four hours earlier a team at the Program for Monitoring Emerging Diseases had mobilized a team to start looking into the data and issued a more detailed report 30 minutes after the HealthMap alert [74]. BlueDot also issued an advisory on December 31, 2019, to all its customers [75]. These systems are interconnected and share data using a complex system of machine learning and natural language processing to analyze social media, news articles, government reports, airline travel patterns, and in some cases emergency room symptoms and reports [76,77,78]. Another set of ML algorithms consumes these processed data to make predictions about possible outbreaks [79].

In addition, wearable devices could serve an important role in the surveillance of high prevalence conditions, for which COVID-19 provides an immediate and important application. Fever alone provides inadequate screening for COVID-19 infection [80], but combining temperature with HR, respiratory rate, and oxygen saturation—all of which can be captured via wearable devices—could aid in triage and diagnosis. Prior research related to influenza, in which investigators found that Fitbit data among 47,249 users could reliably predict prevalence rates estimated by the CDC, supports the role of wearables in infectious disease surveillance [81]. Indeed, randomized trials to test this hypothesis in relation to COVID-19 are underway [82], while others are using wearables for COVID-19 tracking outside of the research enterprise [83]. Furthermore, many wearables can provide location data when linked to a smartphone, opening the door for geographic outbreak monitoring.

Development and Integration of Health-Related AI Tools: Overarching Logistical Challenges and Considerations

AI development and integration, especially of those devices deployed in HSOHC, face several logistical challenges in the health care marketplace. The authors of this discussion paper focus on six major categories of challenges that have been carefully documented in the literature and in practice: data interoperability and standardization, data handling and privacy protection, systemic biases in AI algorithm development, insurance and health care payment reform, quality improvement and algorithm updates, and AI tool integration into provider workflows.

Data Interoperability and Standardization

Logistical challenges to technology development and integration with virtual care systems include the challenges inherent to health care data collection, aggregation, analysis, and communication. In particular, AI-based programs must contend with data interoperability standards that have been created to ensure that data can be reliably transferred between scheduling, billing (including electronic health records), laboratory, registry, and insurer entities, as well as third party health data administrators, and ultimately be actionable to end users. Common data interoperability standards for health care data (e.g., the Health Level Seven standards [84] and its Fast Healthcare Interoperability Resources specification [85]) have helped to enhance communication among AI developer teams, data analysts, and engineers working on other health care platforms, such as electronic health records. Nevertheless, considerable time can be spent by AI developers on extraction, transformation, and loading of data into different formats to both input and output data from AI platforms to health care data system.

Often a major hurdle to AI development has been the personnel effort and time needed for data organization and cleaning, including the development of a strategy to address unclear data definitions and missing data [86]. The Observational Health Data Sciences and Informatics (or OHDSI, pronounced “Odyssey”) program involves an interdisciplinary collaboration to help address these issues for data analytics, and has introduced a common data model that many AI developers are now using to help translate and back-translate their health care data into a standard structure that aids communication with other health data management systems [87].

Data Handling and Privacy Protection

AI developer teams may also be subject to state and federal privacy regulations that affect sharing, use, and access to data for use in training and operating AI health care tools. As the major federal medical privacy statute, the Health Insurance Portability and Accountability Act (HIPAA) applies to “HIPAA-covered entities,” including health care providers such as clinics and hospitals, health care payers, and health care clearing houses that process billing information. HIPAA-covered entities are subject to the HIPAA Privacy Rule, the federal medical and genetic privacy regulation promulgated pursuant to HIPAA. However, many entities that handle health-related information are not HIPAA-covered. Such entities can include many medical device and wearable/home monitoring manufacturers and medical software developers, unless they enter into “Business Associate Agreements” with organizations that do qualify as HIPAA-covered entities. Overall, because HIPAA is targeted to traditional health care providers, it often does not cover health AI companies that do not intersect or work closely with more traditional organizations.

Because the HIPAA Privacy Rule is directed at private sector players in health care, Medicare data and other health data in governmental databases are governed by a different statute, the Federal Privacy Act. State privacy laws add a layer of privacy protections, because the HIPAA Privacy Rule does not preempt more stringent provisions of state law. Several states, such as California, have state privacy laws that may cover commercial entities that are not subject to HIPAA, and which may provide more stringent privacy provisions in some instances. This means that companies that operate across multiple states may face different privacy regulatory requirements depending on where patients/clients are located.

When AI software is developed by a HIPAA-covered entity, such as at an academic medical center or teaching hospital that provides health care services, data must be maintained on HIPAA-compliant servers (even during model training) and not used or distributed to others without first complying with the HIPAA Privacy Rule’s requirements. These requirements include that HIPAA-covered entities must obtain individual authorizations before disclosing or using people’s health information, but there are many exceptions allowing data to be used or shared for use in AI systems without individual authorization. An important exception allows sharing and use of data that have been de-identified, or had key elements removed, according to HIPAA’s standards [88]. Also, individual authorization is not required (even if data are identifiable) for use in treatment, payment, and health care operations (such as quality improvement studies) [88]. This treatment exception is particularly broad, and the Office for Civil Rights in the U.S. Department of Health and Human Services, which administers the HIPAA Privacy Rule, has construed it as allowing the sharing of one person’s data for treatment of other people [89]. This would allow sharing and use of data for AI tools that aim to improve treatment of patients.

Data also can be shared and used with public health authorities and their contractors, which could support data flows for public health AI systems [90]. Data can be shared for use in AI research without consent (including in identifiable form) pursuant to a waiver of authorization approved by an institutional review board or privacy board [90]. Such bodies sometimes balk at approving research uses of identifiable data, but the HIPAA Privacy Rule legally allows it, subject to HIPAA’s “minimum necessary” standard, which requires a determination that the identifiers are genuinely necessary to accomplish the purpose of the research [91,92]. These and various other exceptions, in theory, allow HIPAA-covered care providers to use and share data for development of AI tools. However, all of HIPAA’s authorization exceptions are permissive, in that they allow HIPAA-covered entities to share data but do not require them to do so.

Another concern is that much of today’s health-relevant data, such as those from fitness trackers and wearable health devices, exist outside the HIPAA-regulated environment. This is because, as discussed above, HIPAA regulates the behavior of HIPAA-covered entities and their business associates only, leaving out many other organizations that develop AI. This has two implications: (1) the lack of privacy protection is of concern to consumers, and (2) it can be hard to access these data, and to know how to do it ethically, absent HIPAA’s framework of authorization exceptions. Ethical standards for accessing data for responsible use in AI research and AI health tools are essential. Otherwise, public trust will be undermined.

There are an increasing number of publicly available and de-identified datasets that will allow for model comparisons, catalogued in the PhysioNet repository for biomedical data science and including the Medical Information Mart for Intensive Care dataset that involves intensive care unit data [93,94]. As most of these data are from research or hospital contexts, they highlight the need for more public, de-identified data from outpatient settings including telemedicine and patient-driven home monitoring devices.

Systematic Biases in AI Algorithm Development

Beyond data standards and regulations, a major challenge for AI developers in the U.S. health care environment is the risk that AI technologies will incorporate racial, social, or economic biases into prediction or classification models. Moreover, even if training datasets are perfectly reflective of the U.S. general population, an AI system could still be biased if it is applied in a setting where patients differ from the U.S. population at large. Many biases do, however, reflect broader historical racism and societal injustices that further perpetuate health care inequalities. Once these biased data are incorporated into ML algorithms, the biases cannot easily be interrogated and addressed. For example, while de-identified health care data from payers is increasingly available to predict which patients are higher or lower cost, Black patients in the U.S. are disproportionately at risk for lower health care access, and thus lower cost relative to their illness level (because of inadequate utilization). This artificially lower cost occurs in spite of this population’s higher burden of social ills that increase the risk of poor health outcomes, such as social stressors related to hypertension or poor food security that often worsens diabetes outcomes. Researchers have observed that AI prediction models that seek to determine which people need more outreach for home-based or community-based care were developed from cost data, without a correction for differential access, and thus biased predictions against predicting care needs for Black individuals [95]. Outside of the hospital and clinic settings, historically marginalized communities may face similar barriers to access to technologies, algorithms, and devices. Indeed, recent surveys indicate that use of smartwatches and fitness trackers correlates with household income, but ethnicity-based differences are less pronounced, with Black and Latinx Americans reporting usage rates equivalent or higher than those of white Americans [96].

Developing AI tools is a process of active discovery and simultaneously subject to counterintuitive complexities, making the work of removing bias from health care data extremely complex. For example, observing equal treatment among groups may actually be indicative of a highly inequitable AI model [97]. Some groups may be properly deserving of higher attention because of disproportionate risk for a health care event, and therefore treating them equally would be an error [98]. Bias in the data itself is also paired with bias in outcomes, in that AI models can predict risk of an event such as health care utilization, but can also make suggestions for appropriate health care treatments. If the treatment recommendations are also biased, then disadvantaged groups may get erroneous advice more often, or simply not receive AI-aided advice while their counterparts who are better represented in the data receive the advantages of the AI-aided decision making [99]. To reiterate, using AI systems and tools that utilize biased data or biased processes will further entrench and exacerbate existing inequities and must be addressed before a system or tool is deployed.

Insurance and Health Care Payment Reform

A logistical challenge for AI use outside of the hospital and clinic setting that also challenges AI development and integration is the U.S. health care payment landscape. Many, albeit an increasingly smaller percentage, of health care payments from commercial insurers or government payers (e.g., Medicare Part B) to health care delivery entities are in the form of fee-for-service payments for in-person visits or procedures. Health care delivery entities generate revenues by billing payers with attached billing codes that reference negotiated payments for different services, from routine office visits to a primary care provider to surgeries. Programs outside of the hospital and clinic setting are incentivized to fit into the fee-for-service model if they are to be paid for by traditional payment mechanisms. While telemedicine visits (video and phone) are now covered by most payment entities, and, in the initial months of the COVID-19 pandemic, were reimbursed at an equal rate to in-person visits [100], the tools used to deliver such services are traditionally not reimbursed. For example, a physician could use many AI tools, remote sensing tools included, to help improve the quality or precision of diagnosis or therapeutic recommendations. Such tool use could be costly in personnel and computational time, and as discussed earlier, these tools can have questionable validity. However, the use of these tools would not necessarily be paid for, as its use would be considered implicit in conducting a medical visit, even though various diagnostic procedures with their own personnel and equipment costs (e.g., radiology) have their own payment rates. A change in such policies to help pay for AI tools is one step to encouraging AI applications both inside and outside of the hospital or clinic. The first billing code for an AI tool is one that helps to detect diabetic retinopathy. Still, it is unclear at this point how much this new code will pave the way for payers to accept the code and pay for AI services within a fee-for-service payment structure [101].

While much billing in the U.S. health care landscape remains a fee-for-service billing approach in which services are rendered and reimbursed according to negotiated rates for services that a payer covers, alternative payment models exist that may alter the AI payment landscape. Such alternative models include capitation payments (per-patient, per-month payments) that payers could increase for practices or providers that incorporate high-quality, externally validated AI tools into their practice, and value-based payments for providers who show that their use of AI tools has improved outcomes. Capitation payments have now been increasingly adopted for routine health care delivery in many managed-care environments, but as of yet there are no adjustments for the use of AI tools. Value-based payments have to date failed to capture a majority of the health care market share [102,103], and such value-based payments may incentivize use of AI tools outside of the hospital or clinic if they improve clinical outcomes, whether or not such tools require intervention within a medical visit. Both capitation and value-based payments could be adjusted to explicitly reward the use of AI tools for better outcomes.

Quality Improvement and Algorithm Updates

To further aid in the adoption and implementation of AI tools into clinical practice, particularly into telemedicine and virtual care environments, it is important to solidify the practice of quality improvement and to responsibly navigate the challenges of ownership, responsibility, decision making, and liability. As telehealth and virtual care platforms continue to improve their user experiences, it becomes critical that the AI tools they rely on—from symptom checkers that direct providers toward considering particular diagnoses, to scheduling and billing tools that aid patients, to personalized recommendation systems that help remind patients of routine cancer screening and available health coaching—must have a built-in feedback process. There are numerous examples of complex chronic diseases that require detailed self-management, such as blood glucose monitoring and adjustments in daily calorie intake or insulin administration for diabetes [104,105] or management of diet, salt, exercise, and medication dosing after heart failure [106]. The key challenges in this subdomain are those of appropriate data collection through patient-facing technologies—whether linked glucose monitors, blood pressure monitoring, calories and types of food eaten, steps taken, and other features—and integrating AI algorithms and tools safely into cautions and recommendations along with information synthesis to patients.

AI-driven personal sensing algorithms will likely have limited shelf lives for a variety of reasons [39]. Given the rapid pace of development, there is considerable churn in both the software and hardware that are used to measure these signals. As sensor software and hardware are updated, raw data signals will change. There will also be shifts in how patients interact with these software and hardware and where these digital interactions happen that necessitate changes in the devices and signals that are monitored. Additionally, as modes of data collection become more precise (e.g., more advanced HR and glucose monitors), algorithms can be regularly retrained with these more reliable data to harness greater predictive accuracy.

For example, smartphone use has changed dramatically in recent years. Communications have shifted from voice to SMS, and SMS itself has moved from native smartphone apps to separate applications like Facebook Messenger, Snapchat, and WhatsApp. Video conferencing has also been rapidly adopted during COVID-19.

More fundamentally, the meaning of the raw signals may change over time as well. Language usage and even specific words that indicate clinically relevant effects or stressors have a temporal context that may change rapidly based on sociopolitical or other current events (e.g., the COVID-19 pandemic, the Black Lives Matter movement and associated protests, political election cycles). Patterns of movement and their implications can change as well (e.g., time spent at home or in the office). This limited shelf life for personal sensing algorithms must be explicitly acknowledged and processes must be developed to monitor and update the performance of the algorithms over time to keep them current and accurate.

AI Tool Integration into Provider Workflows Outside of the Hospital or Clinic

Most health care systems today have training and execution of quality improvement programs that identify important problems such as medical errors and undergo cycles of planning, piloting, studying, and modifying workflows to reduce such problems, often using a Lean framework for improvement [107,108]. AI tools outside of the hospital or clinic can be integrated into that workflow to improve their effectiveness, efficiency, and utilization. Such tools may be vital for quality improvement of services outside of hospital or clinic settings, as well as to scale and diffuse such technologies among teams that may be initially skeptical about their value. Issues of usability have significant implications for provider adoption. The increasing volume of data collected through wearable technology can overwhelm providers who are already experiencing high rates of alert fatigue and clinician burnout. Ensuring usability entails developing an accessible user interface and presenting information in a clear and actionable way.

Inherent to the implementation and improvement process is the dilemma of how to ensure that the business models underlying AI tool innovation are tailored to their users. It is often assumed that AI tools will have a single user: a provider or a patient. Typically, however, AI tools are used in a mixed manner because of availability and access of the tools in shared environments or in the transition of settings from the home to clinical visits where providers use and show the results or visualizations from a tool. Therefore, communications to mixed groups of users are important to consider [109].

Practical Steps for Integration of New AI Tools into the U.S. Health System

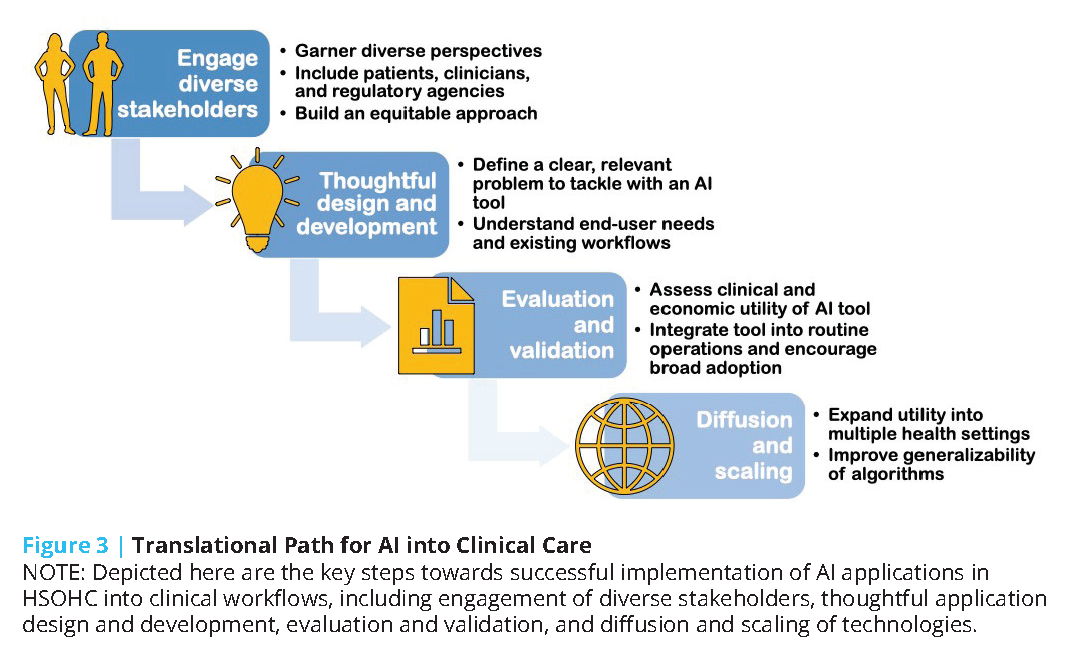

To help overcome the challenges of AI tool development and deployment, the authors of this discussion paper suggest considering a series of steps for taking a model from design to health system integration and highlight challenges specific to each step (see Figure 3).

The first step on the translational path for AI into clinical care is to engage a wide range of stakeholders to ensure that the tools developed account for a wide range of perspectives, including patients and clinicians across the care continuum, and that the approach to building the technology does not “automate inequality” [110] or build “weapons of math destruction” [111].

The second step should be careful and thoughtful model design and development. During the model design step, AI developers often curate a dataset, secure initial research funding to develop a model, and build an interdisciplinary team with technical and clinical experts. A critical challenge during this stage is to develop a product that solves a real, relevant problem for end users. AI developers looking to translate their technologies into practice need to approach the technical task of training a model as part of a product development process. As described by Clayton Christensen, “when people find themselves needing to get a job done, they essentially hire products to do that job for them” [112].For an AI model to be used in practice, the model must successfully complete a job for an end user, be it a patient or expert clinician. Unfortunately, this goal usually involves more than a straightforward modeling task, and models need to be conceptualized as a single component within a more complex system required to deliver value to users. Deeply understanding the “job to be done” requires close collaboration with end users and interdisciplinary collaboration [113]. In contrast, many AI and ML technologies are built without clinical collaborators and leverage readily available datasets to model a small set of outcomes [114,115]. Teams that successfully navigate the design and develop steps deeply understand user needs and have developed an AI technology potentially able to solve a problem.

The third step on the translational path is to evaluate and validate the new AI tool. During this step, AI developers often evaluate the clinical and economic utility of a model using retrospective, population-representative data. Models may then undergo temporal and external validation, and then be integrated into a care delivery setting to assess clinical and economic impact. Unfortunately, many AI models undergo in silico experiments using retrospective data and do not progress further [116]. These experiments can provide preliminary data on the potential utility of a model, but do not provide evidence of realized impact. Prospective implementation in clinical care requires both clinical and technical integration of the AI model into routine operations. Technical integration requires sophisticated infrastructure that automates and monitors extraction, transformation, and load processes that ingest data from data sources and write model output into workflow systems [117]. Clinical integration requires the design and successful implementation of clinical workflows for end users. There is a rich literature on innovation adoption in health care, and adoption barriers and facilitators specific to AI are emerging [118,119]. Teams that successfully navigate the “evaluate and validate” steps are able to demonstrate the clinical and economic impact of an AI model within at least one setting.

The fourth and fifth steps on the translational path are to diffuse and scale. To date, no AI model has efficiently scaled across all health care settings. Most models have been validated within silos or single settings, and a small number of AI technologies have undergone peer-reviewed external validations [120]. Furthermore, while some AI developers externally validate the same model in multiple settings, other teams take a different approach. For example, there is ongoing research into a generalizable approach to train site-specific Clostridium difficile models (a model of within-hospital infection) by which each hospital has a local model [120,121]. Externally validating and scaling a model across settings also introduces data quality challenges as institutional datasets are not interoperable and significant effort is required to harmonize data across settings [122].

Equitable and Humanistic Deployment of Health-Related AI Tools: Legal and Ethical Considerations

Health-related AI tools designed for use outside hospitals and clinics present special legal, regulatory, and ethical challenges.

Chief among the legal challenges are:

- the safety of patients, consumers, and other populations whose well-being may depend on these systems; and

- concerns about accountability and liability for errors and injuries that will inevitably occur even if these tools deliver hoped-for benefits such as improving patient care and public health, reducing health disparities, and helping to control health care costs.

Major ethical challenges are:

- ensuring privacy and other rights of persons whose data will be used or stored in these systems;

- ensuring ethical access to high-quality and inclusive (population-representative) input data sets capable of producing accurate, generalizable, and unbiased results; and

- ensuring ethical implementation of these tools in home care and other diverse settings.

Safety Oversight

The sheer diversity of AI tools discussed in this paper implies a nonuniform and, at times, incomplete landscape of safety oversight. Policymakers and the public often look to the FDA to ensure the safety of health-related products, and the FDA is currently working to develop suitable frameworks for regulating software as a medical device [123], including AI/ML software [124]. For software intended for use outside traditional care settings, however, the FDA cannot by itself ensure safety. Involvement of state regulators, private sector institutional and professional bodies, as well as other state and federal consumer safety regulators such as the Federal Trade Commission and Consumer Product Safety Commission, will also be required. Coordination is crucial, however, and the FDA can use its informational powers to inform and engage the necessary dialogue and cooperation among concerned oversight bodies: state, federal, and nongovernmental.

The 21st Century Cures Act of 2016 delineated types of health-related software that the FDA can and cannot regulate [125]. In 2017, the agency announced its Digital Health Innovation Action Plan [126] followed by the Digital Health Software Precertification (Pre-Cert) Program [127] and published final or draft guidance documents covering various relevant software categories, including consumer grade general wellness devices such as wearable fitness trackers [128], mobile medical applications [129], and clinical decision support software [130].

The software discussed in this paper raises special concerns when it comes to regulatory oversight. First, AI software intended for population and public health applications is not subject to FDA oversight, because it does not fit within Congress’s definition of an FDA-regulable device intended for use in diagnosing, treating, or preventing disease of individuals in a clinical setting [131]. Second, there is a potential for software designed for one intended use to be repurposed for new uses where its risk profile is less understood. For example, consumer grade wearables and at-home monitoring devices, when marketed as general wellness devices, lie outside the FDA’s jurisdiction and do not receive the FDA’s safety oversight. These devices might be repurposed for medical uses by consumers or by developers of software applications. Repurposing raises difficult questions about the FDA’s capacity to detect and regulate potential misuses of these devices [132]. Consumers may not understand the limits of the FDA’s regulatory jurisdiction and assume that general wellness devices are regulated as medical devices because they touch on health concerns.

Also pertinent to the home care setting, the FDA tried in 2017 to address “patient decision support” (PDS) software, where the user is a patient, family member, or other layperson (paid or unpaid caregivers) in the home care setting (as opposed to a medical professional in a clinic or hospital), but subsequently eliminated this topic from its 2019 clinical decision support draft policy [130]. The regulatory framework for PDS software remains vague. Even when a trained medical professional uses clinical decision support software (whether in a clinic, hospital, or HSOHC), patient safety depends heavily on appropriate application of the software. This is primarily a medical practice issue, rather than a medical product safety issue that the FDA can regulate. State agencies that license physicians, nurses, and home care agencies have a crucial role to play, as do private-sector institutional and professional bodies that oversee care in HSOHC. A singular focus on the FDA’s role as a potential software regulator distracts from the need for other regulatory bodies to engage with the challenge of ensuring proper oversight for health care workers applying AI/ML software inside and outside traditional clinical settings.

Accountability and Liability Issues

AI tools for public health raise accountability concerns for the agencies that rely on them, but appear less likely to generate tort liability, because of the difficulty of tracing individual injuries to the use of the software and because public health agencies often would apply such software to perform discretionary functions that enjoy sovereign immunity from tort lawsuits.

Concerns about accountability and liability are greater for private-sector users, such as a health care institution applying AI population health tools or quality improvement software that recommends approaches that, while beneficial on the whole, may result in injuries to specific patients. It remains unclear what duties institutions have (either ethically or legally, as elaborated in future tort suits) to inform patients about the objectives of population health software (for example, is the software programmed to reduce health care costs or to ration access to scarce facilities such as ICU beds) and how these objectives may affect individual patients’ care.

More generally, AI tools are relatively new, so there is not yet a well-developed body of case law with which to predict the tort causes of action that courts will recognize or the doctrines courts may apply when hearing those claims. Possible claims may include malpractice claims against physicians, nurses, or home care providers who rely on AI decision-support tools; direct suits against home care agencies and institutions for lax policies and supervision in using such systems; and suits against software developers including possible product liability actions [133]. Scholars are actively engaged in exploring the liability landscape, but uncertainties will remain until courts resolve cases in this field.

Data Privacy and Individual Consent

Many types of data that would be useful for training and ongoing operation of AI health systems, as well as the data such software may generate and store about individuals who use and rely on them, may fall outside the umbrella of HIPAA privacy protections. As discussed above, HIPAA privacy protections generally apply only to HIPAA-covered entities such as providers and payers for health care services. HIPAA’s coverage excludes many device and software developers, governmental public health agencies (which may be governed by the Federal Privacy Act), and research institutions that are not affiliated with HIPAA-covered clinics or teaching hospitals. Data generated and used in HSOHC have spotty privacy protections, subject largely to a patchwork of state privacy laws. Data bearing on social determinants of health, behavioral factors, and environmental exposures are crucial in developing AI tools tailored to diverse subpopulations, yet such data often arise in non-HIPAA-covered environments with weak oversight of sharing and data uses, creating ethical challenges such as “surveillance capitalism” [134] and “automating inequality” [110]. The absence of a uniform floor of federal privacy protections for all types of health-relevant data in all settings (medical and nonmedical) is a factor that may hinder future development of promising AI technologies in the U.S. and undermine public trust in the AI tools that do managed to be developed.

The lack of uniform privacy protections in the U.S. also encourages heavy reliance on de-identified data by AI system developers, who are navigating the ethical challenges of data uses without clear regulatory protections and guidance. The reliance on de-identified data, however, is a “second-best” solution that can diminish the accuracy and generalizability of AI tools available in the U.S. As to the concerns, noted earlier, about the risks of re-identification of such data [135], there is scholarly debate about how real these risks actually are, with empirical studies indicating the risk is considerably lower than portrayed in the popular press [136]. On one hand, there is increased awareness that de-identified data sets can be combined to re-identify individuals, a process known as data aggregation, suggesting that de-identification may not be a complete solution to privacy concerns [137]. The public is concerned about wide dissemination of their de-identified data. On the other hand, a serious—and less understood—concern relates to the quality and generalizability of AI software developed using de-identified data. The process of de-identifying data diminishes its usefulness and can hinder the creation of high-quality longitudinal data sets to support accurate results from AI health tools. Moreover, de-identification strips away information that may be needed to audit data sets to ensure that they are inclusive and generalizable across all population subgroups. This can increase the danger of biased data sets that fail to produce accurate results for all racial, geographical, and gender-related subgroups [138]. Rather than rely on de-identification as a weak proxy for privacy protection, the U.S. needs a strong framework of meaningful privacy protections that would allow the best available data to be used.

As AI systems move into health care settings, patients may not be aware when AI systems are being used to inform their care [139]. Whether and when informed consent is appropriate has not received adequate discussion [140]. On one hand, it is not standard practice for physicians to inform patients about every medical device or every software tool used in their care. On the other hand, some functions performed by AI software tools (such as deciding which therapy is best for the patient) may rise to a level of materiality where consent becomes appropriate. The bioethics community, health care accreditation organizations, and state medical regulators need to engage with the challenge of defining when, and under what circumstances, informed consent may be needed. A related topic is how future consent standards, designed for traditional clinic and hospital care settings, could be applied and enforced in HSOHC.

Ensuring Equitable Use of AI in Health

mHealth apps can address many of the current disparities that result in unmet health care needs. For example, in reference to mental health, the geographic distribution of licensed clinical psychologists across the U.S. is highly uneven, with large swaths of rural America significantly underserved [141]. Office visits with psychiatrists and psychologists can be infrequent, diffi cult to schedule, and typically not available at moments of peak need. Mental health care is costly, and those with greatest need are often uninsured or otherwise unable to afford necessary care [142]. In contrast, access to mental health care via mHealth apps is not limited by either geographic or temporal constraints. Furthermore, the percentage of Americans who now own smartphones is 81 percent, up from just 35 percent in 2011 [143]. Equally important, Black and Latinx adults have smartphones in shares similar to whites and are more likely than whites to use smartphones to access information about health conditions [144].

Nevertheless, equity in access to mHealth apps and technology will not happen without attention and planning. For example, the majority of telehealth visits during the initial months of the COVID-19 pandemic were based on pre-existing provider/patient relationships [145]. This emphasis on continuity of care, rather than establishing new care relationships, suggests that individuals in medically underserved communities may not be benefitting from the shift to digital home health. Likewise, individuals who are already underserved because of racial and other disparities may struggle to access mHealth apps or other AI applications. mHealth apps that are powered by AI personal sensing can address racial and other heath disparities but only if they are thoughtfully designed, developed, and distributed [146] with the intention of reducing biases and the digital divide. The development of these AI algorithms using personal sensing signals must include data from people from racial and ethnic minorities and other underserved groups to account for differences in how these signals function in different groups of people [147]. Algorithms must also be carefully designed and scrutinized to avoid reinforcing contemporary racial and other biases by instantiating them in these algorithms [111]. Thoughtful infrastructure measurement, regulation, and accountability are necessary for the distribution and oversight of these mHealth apps.

Setting the Stage for Impactful AI Tools in HSOCH: Calls to Action

AI-powered digital health technology is a rapidly developing sector that is poised to significantly alter the current landscape of health care delivery in the U.S., particularly as care extends beyond the walls of the hospital and clinic. As mHealth applications and personal health devices, including wearable technology, become increasingly ubiquitous, they enable large-scale collection of detailed, continuous health data that processed through AI can support individual and population health. Illustrated by the examples discussed here, these tools signal a paradigm shift in the traditional notion of clinical point-of-care to one that meets people where they are to deliver care. However, widespread adoption, secure implementation, and integration of these novel technologies into existing health care infrastructures pose major legal and ethical challenges. Concrete steps toward ensuring the success of AI health tools outside the hospital and clinic can include:

- Building broad regulatory oversight to promote patient safety by engaging organizations beyond the FDA, including other state, federal, and nongovernmental oversight bodies;

- Reconsidering the definition and implications of informed consent in the context of big data, AI algorithm development, and patient privacy in HSOHC;

- Developing policy initiatives that push for greater data interoperability and device integration standards with hospital clinical systems, so as to enhance stress-free provider and consumer/patient usability;

- Recognizing and mitigating biases (racial, socioeconomic) in both AI algorithms and access to personal health devices by including population-representative data in AI development and increasing affordability and access (or insurance coverage) of personal health technology, respectively;

- Advocating for insurance and health care payment reform that incentivizes adoption of AI tools into physicians’ workflow; and

- Establishing clarity in regard to liability for applications of health AI, with an eye to supporting rather than hindering innovation in this field.

It is important to acknowledge that many of these steps necessitate fundamental changes in governmental oversight of health care, industry-hospital communication, and the patient-provider relationship itself. However, approaching novel applications of AI in health with a critical but receptive mindset will enable the U.S. to lead in ushering in the next generation of health care innovation.

Join the conversation!

![]() Tweet this! AI tools and applications have the potential to revolutionize health care and focus it on where patients live – outside the hospital and clinic. A new #NAMPerspectives outlines areas of opportunity and hurdles to overcome: https://doi.org/10.31478/202011f #NAMLeadershipConsortium

Tweet this! AI tools and applications have the potential to revolutionize health care and focus it on where patients live – outside the hospital and clinic. A new #NAMPerspectives outlines areas of opportunity and hurdles to overcome: https://doi.org/10.31478/202011f #NAMLeadershipConsortium

![]() Tweet this! The adoption of AI tools and technologies can be the start of a paradigm shift in health care toward more precise, economical, integrated, and equitable care. A new #NAMPerspectives provides more detail: https://doi.org/10.31478/202011f #NAMLeadershipConsortium

Tweet this! The adoption of AI tools and technologies can be the start of a paradigm shift in health care toward more precise, economical, integrated, and equitable care. A new #NAMPerspectives provides more detail: https://doi.org/10.31478/202011f #NAMLeadershipConsortium

![]() Tweet this! Broader adoption of AI tech, which show promise to improve health care outcomes, must also deeply consider ethical and equity challenges like patient privacy & exacerbating existing inequities. Read a new #NAMPerspectives: https://doi.org/10.31478/202011f #NAMLeadershipConsortium

Tweet this! Broader adoption of AI tech, which show promise to improve health care outcomes, must also deeply consider ethical and equity challenges like patient privacy & exacerbating existing inequities. Read a new #NAMPerspectives: https://doi.org/10.31478/202011f #NAMLeadershipConsortium

Download the graphics below and share them on social media!

References

- Tikkanen, R. and M. K. Abrams. 2020. U.S. Health Care from a Global Perspective, 2019: Higher Spending, Worse Outcomes? The Commonwealth Fund. Available at: https://www.commonwealthfund.org/publications/issue-briefs/2020/jan/us-health-care-global-perspective-2019 (accessed September 29, 2020).

- American Medical Association. 2019. Medicare’s major new primary care pay model: Know the facts. Available at: https://www.ama-assn.org/practice-management/payment-delivery-models/medicare-s-major-new-primary-care-pay-model-know-facts (accessed October 26, 2020).

- American Association of Family Physicians. 2020. Chronic Care Management. Available at: https://www.aafp.org/family-physician/practice-and-career/getting-paid/coding/chronic-care-management.html (accessed October 26, 2020).

- National Science and Technology Council, Committee on Technology, Executive Office of the President. 2016. Preparing for the Future of Artificial Intelligence. Available at: https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf (accessed October 26, 2020).

- Matheny, M., S. Thadaney Israni, M. Ahmed, and D. Whicher, Editors. 2019. Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. NAM Special Publication. Washington, DC: National Academy of Medicine.

- Institute of Medicine. 2012. The Role of Telehealth in an Evolving Health Care Environment: Workshop Summary. Washington, DC: The National Academies Press. https://doi.org/10.17226/13466.

- Kuziemsky, C., A. J. Maeder, O. John, S. B. Gogia, A. Basu, S. Meher, and M. Ito. 2019. Role of Artificial Intelligence within the Telehealth Domain. Yearbook of Medical Informatics 28(01): 035-040. https://doi.org/10.1055/s-0039-1677897org/10.1055/s-0039-1677897

- Wosik, J., M. Fudim, B. Cameron, Z. F. Gellad, A. Cho, D. Phinney, S. Curtis, M. Roman, E. G. Poon, J. Ferranti, J. N. Katz, and J. Tcheng. 2020. Telehealth transformation: COVID-19 and the rise of virtual care. Journal of the American Medical Informatics Association 27(6): 957-962. https://doi.org/10.1093/jamia/ocaa067

- Bitran, H. and J. Gabarra. 2020. Delivering information and eliminating bottlenecks with CDC’s COVID-19 assessment bot. Available at: https://blogs.microsoft.com/blog/2020/03/20/delivering-information-and-eliminating-bottlenecks-with-cdcs-covid-19-assessment-bot/ (accessed September 29, 2020).

- Centers for Disease Control and Prevention. 2020. COVID-19 Testing Overview. Available at: https://www.cdc.gov/coronavirus/2019-ncov/symptoms-testing/testing.html (accessed October 26, 2020).

- Menon, R. 2020. Virtual Patient Care Using AI. Forbes, March 26, 2020. Available at: https://www.forbes.com/sites/forbestechcouncil/2020/03/26/virtual-patient-care-using-ai/#18befa3be880 (accessed September 29, 2020).

- Haque, S. N. 2020. Telehealth Beyond COVID-19. Technology in Mental Health. https://doi.org/10.1176/appi.ps.202000368

- Tran, V-T., C. Riveros, and P. Ravaud. 2019. Patients’ views of wearable devices and AI in healthcare: findings from the ComPaRe e-cohort. npj Digital Medicine 2(53). https://doi.org/10.1038/s41746-019-0132-y

- U.S. Food and Drug Administration (FDA). 2018. FDA permits marketing of artificial intelligence-based device to detect certain diabetes-related eye problems. Available at: https://www.fda.gov/news-events/press-announcements/fda-permits-marketing-artificial-intelligence-based-device-detect-certain-diabetes-related-eye (accessed October 30, 2020).

- Abràmoff, M. D., P. T. Lavin, M. Birch, N. Shah, and J. C. Folk. 2018. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. npj Digital Medicine 1(39). https://doi.org/10.1038/s41746-018-0040-6

- Oka, R., A. Nomura, A. Yasugi, M. Kometani, Y. Gondoh, K. Yoshimura and T. Yoneda. 2019. Study Protocol for the Effects of Artificial Intelligence (AI)-Supported Automated Nutritional Intervention on Glycemic Control in Patients with Type 2 Diabetes Mellitus. Diabetes Therapy 10: 1151-1161. https://doi.org/10.1007/s13300-019-0595-5

- Everett, E., B. Kane, A. Yoo, A. Dobs, and N. Mathoudakis. 2018. A Novel Approach for Fully Automated, Personalized Health Coaching for Adults with Prediabetes: Pilot Clinical Trial. Journal of Medical Internet Research 20(2):e72. https://doi.org/10.2196/jmir.9723

- Nimri, R., N. Bratina, O. Kordonouri, M. A. Stefanija, M. Fath, T. Biester, I. Muller, E. Atlas, S. Miller, A. Fogel, M. Phillip, T. Danne, and T. Battelino. 2016. MD-Logic overnight type 1 diabetes control in home settings: A multi-center, multi-national, single blind randomized trial. Diabetes, Obesity, and Metabolism. https://doi.org/10.1111/dom.12852

- Brierly, C. 2020. World’s first artificial pancreas app licensed for people with type 1 diabetes in UK. Medical Xpress, March 16, 2020. Available at: https://medicalxpress.com/news/2020-03-world-artificial-pancreas-app-people.html (accessed November 5, 2020).

- Quinn, C. C., S. S. Clough, J. M. Minor, D. Lender, M. C. Okafor, and A. Gruber-Baldini. 2008. WellDoc Mobile Diabetes Management Randomized Controlled Trial: Change in Clinical and Behavioral Outcomes and Patient and Physician Satisfaction. Diabetes Technology and Therapeutics. https://doi.org/10.1089/dia.2008.0283

- Quinn, C. C., M. D. Shardell, M. L. Terrin, E. A. Barr, S. H. Ballew, and A. L. Gruber-Baldini. 2011. Cluster-Randomized Trial of a Mobile Phone Personalized Behavioral Intervention for Blood Glucose Control. Diabetes Care 34(9): 1934-1942. https://doi.org/10.2337/dc11-0366

- Insurancenewsnet.com. 2020. Binah.ai Awarded Best Digital Health Innovation by Juniper Research. Available at: https://insurancenewsnet.com/oarticle/binah-ai-awarded-best-digital-health-innovati on-by-juniperresearch#.X6SElmhKjIW (accessed November 5, 2020).

- Banerjee, R., A. D. Choudhury, A. Sinha, and A. Visvanathan. 2014. HeartSense: smart phones to estimate blood pressure from photoplethysmography. SenSys ‘14: Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems. https://doi.org/10.1145/2668332.2668378/10.1145/2668332.2668378

- Binkley, P. F. 2003. Predicting the potential of wearable technology. IEEE Engineering in Medicine and Biology Magazine 22(3). https://doi.org/10.1109/MEMB.2003.1213623

- Su, J. 2018. Apple Watch 4 Is Now An FDA Class 2 Medical Device: Detects Falls, Irregular Heart Rhythm. Forbes, September 14, 2018. Available at: https://www.forbes.com/sites/jeanbaptiste/2018/09/14/apple-watch-4-is-now-an-fda-class-2-medical-device-detects-falls-irregular-heartythm/?sh=3ddcdf2b2071 (accessed October 30, 2020).

- Koshy, A. N., J. K. Sajeev, N. Nerlekar, A. J. Brown, K. Rajakariar, M. Zureik, M. C. Wong, L. Roberts, M. Street, J. Cooke, and A. W. Teh. 2018. Smart watches for heart rate assessment in atrial arrhythmias. International Journal of Cardiology 266(1): 124-127. https://doi.org/10.1016/j.ijcard.2018.02.073

- Wang, R., G. Blackburn, M. Desai, D. Phelan, L. Gillinov, P. Houghtaling, and M. Gillinov. 2017. Accuracy of Wrist-Worn Heart Rate Monitors. JAMA Cardiology 2(1): 104-106. https://doi.org/10.1001/jamacardio.2016.3340

- Centers for Disease Control and Prevention. n.d. Facts About Hypertension. Available at: https://www.cdc.gov/bloodpressure/facts.htm (accessed October 30, 2020).

- Muntner, P., S. T. Hardy, L. J. Fine, B. C. Jaeger, G. Wozniak, E. B. Levitan, and L. D. Colantonio. 2020. Trends in Blood Pressure Control Among US Adults With Hypertension, 1999-2000 to 2017-2018. JAMA 324(12): 1190-1200. https://doi.org/10.1001/jama.2020.14545

- Omron. n.d. Blood Pressure Monitors. Available at: https://omronhealthcare.com/bloodpressure/(accessed November 5, 2020).